Memory defects such as buffer overflows, double frees, and use-after-free remain a leading cause of security vulnerabilities in low-level programming languages like C and C++. Contemporary heap exploits differ significantly from stack-based attacks. Exploiting heap defects requires more than just triggering a bug, it demands a deep understanding of the

Memory defects such as buffer overflows, double frees, and use-after-free remain a leading cause of security vulnerabilities in low-level programming languages like C and C++. Contemporary heap exploits differ significantly from stack-based attacks. Exploiting heap defects requires more than just triggering a bug, it demands a deep understanding of the underlying memory allocator.

Over the years, several memory allocators have been proposed, each making different trade-offs between performance and resistance to attacks. This post concentrates on the analysis of glibc 2.42’s default memory allocator, ptmalloc, covering key structs used in the glibc's heap implementation, binning logic, allocation and deallocation paths, integrity checks, bitmap scanning, pointer mangling, and the low-level tricks employed by the allocator.

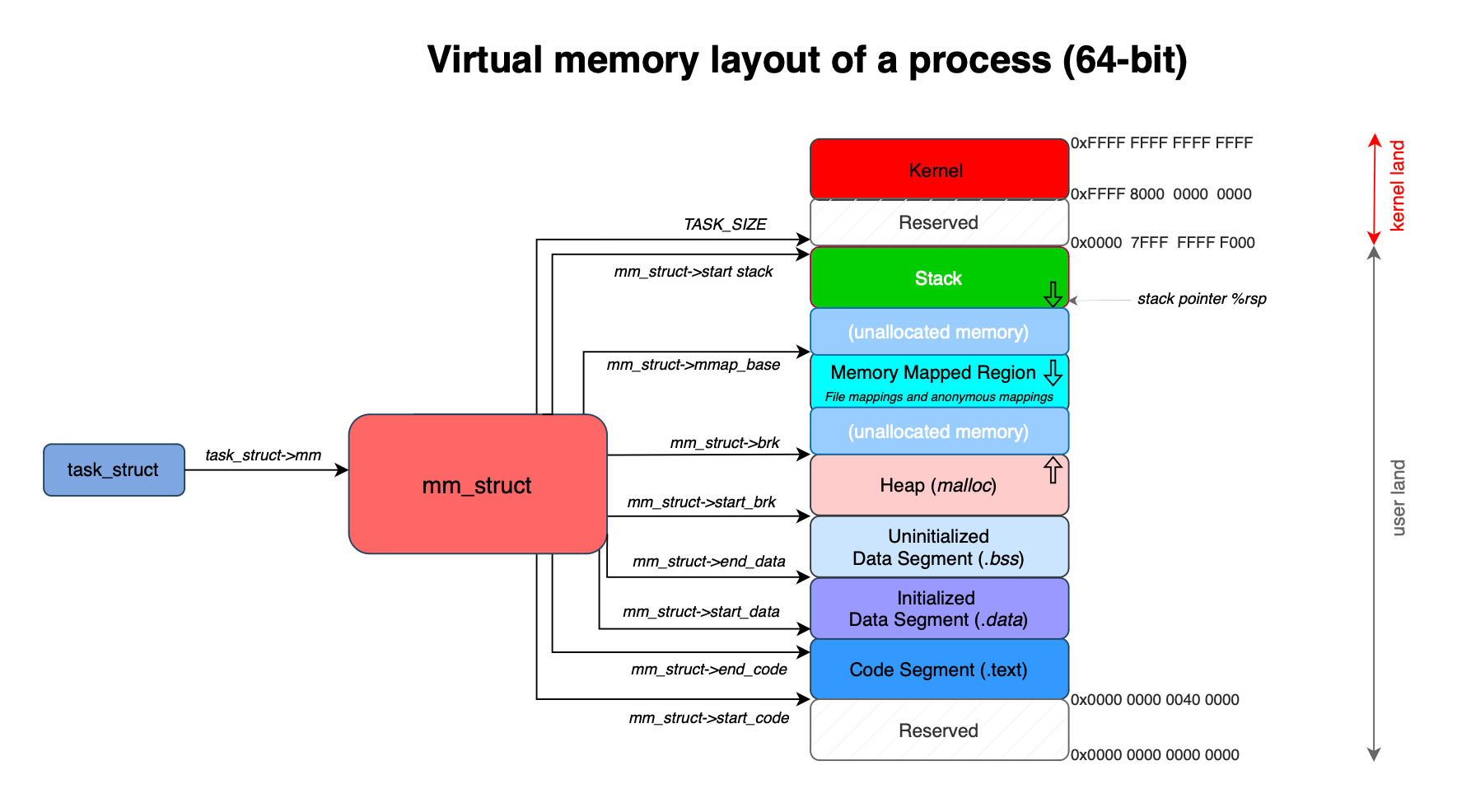

Memory is divided into several segments, including the stack, heap, global, and code segments. The heap represents a portion of virtual memory accessible by programs to request memory dynamically at runtime. Programs typically rely on existing allocators like ptmalloc to request memory (e.g. malloc() or new), delegating the complexity of handling alignment, fragmentation, and performance to the allocator.

Heap corruption occurs when dynamic memory allocation is not managed correctly. These subtle bugs can lead to costly, serious and exploitable vulnerabilities such as privilege escalation, arbitrary code execution, and complete system takeover. Most memory corruptions occur on the heap, which for decades has represented a major source of software defects in programs written in low-level languages like C and C++.

Protecting the heap against attacks is a well-researched problem. The implementation and deployment of defense mechanisms such as ASLR (which randomizes process memory layout, especially effective on 64-bit systems), Non-Executable (NX), and other techniques have significantly raised the bar for attackers. Yet sophisticated, dedicated, and patient adversaries still find ways to bypass most existing defenses.

Heap-based exploits leverage memory safety violations, either spatial (out-of-bounds access) or temporal (accessing freed or invalid memory) such as buffer overflows, double frees, or use-after-free bugs, leading to information disclosure attack, privilege escalation, or arbitrary code execution.

While the primary goal of a memory allocator is to serve chunks dynamically at runtime, not all allocators are designed equally. Some are hardened by design, for example, by segregating heap metadata and preventing predictable heap layouts, whereas others trade security for performance e.g by placing metadata directly adjacent to heap chunks; introducing security risks that are often mitigated through integrity checks.

Heap vulnerabilities differs significantly from stack based memory defects. Stack based vulnerabilities are easier to understand and exploit (though modern mitigations make exploiting them far more difficult today), heap exploitation requires a much deeper understanding of the underlying memory allocator.

Recently, I decided to go low-level and study how heap exploitation techniques are actually developed. I discovered that many of these techniques abuse and corrupt allocator metadata, meaning they are allocator-specific. This makes the heap a prime target for attackers, provided an application vulnerability exists in the first place.

After spending several days studying the internals of glibc’s malloc implementation, I decided to write a post about how the allocator really works under the hood, knowledge that can help anyone interested in learning the foundations of low-level exploitation and memory forensics.

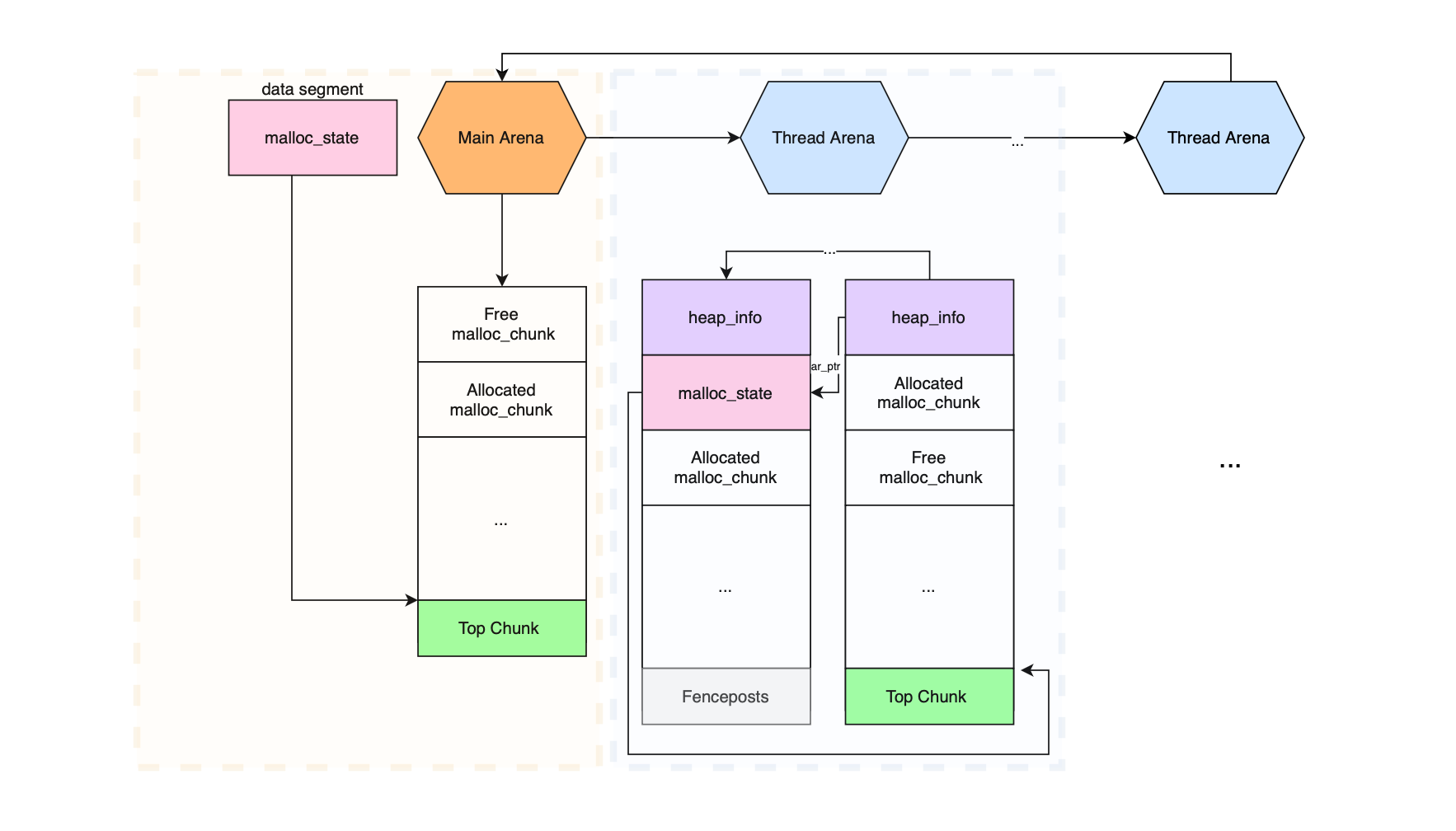

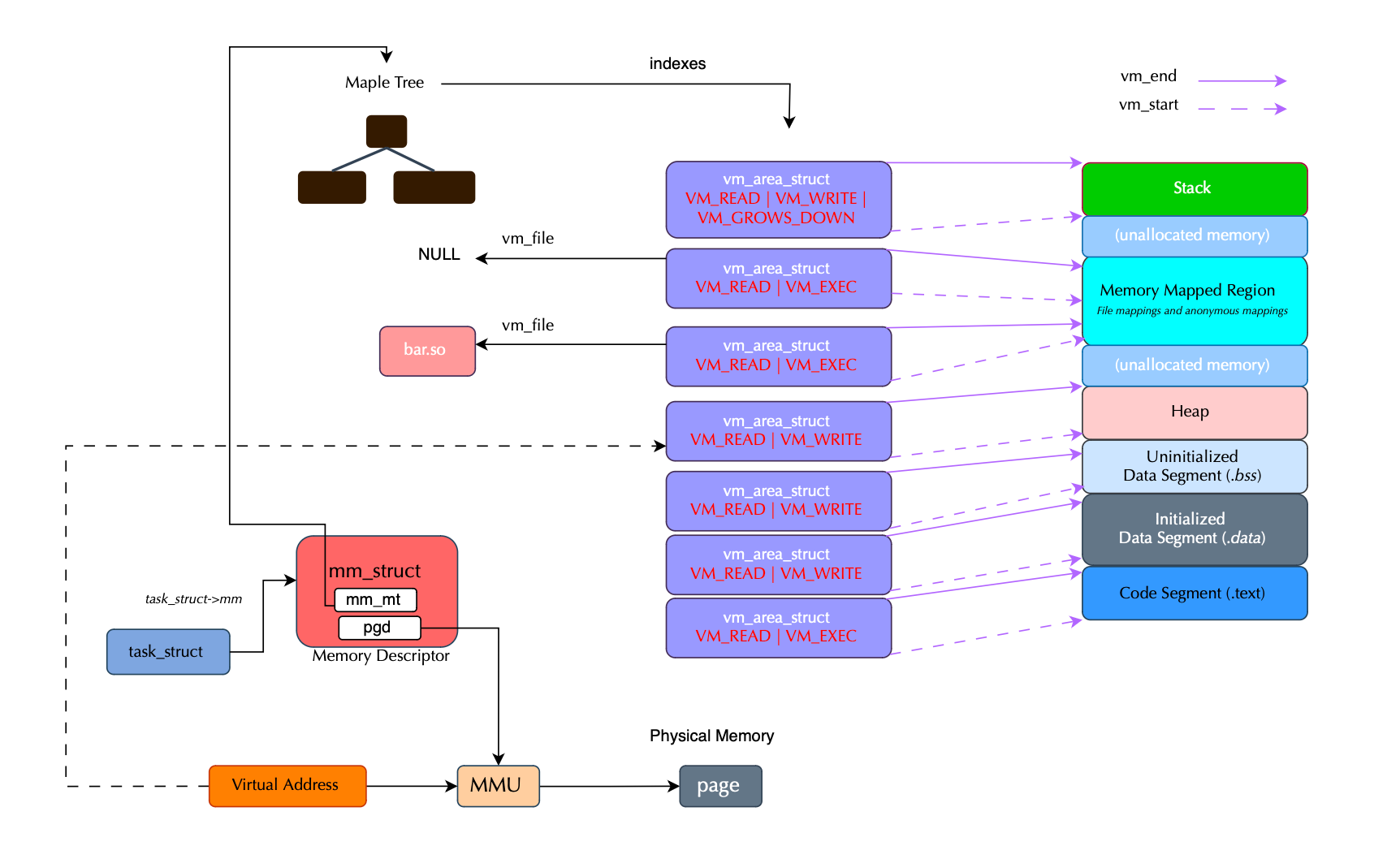

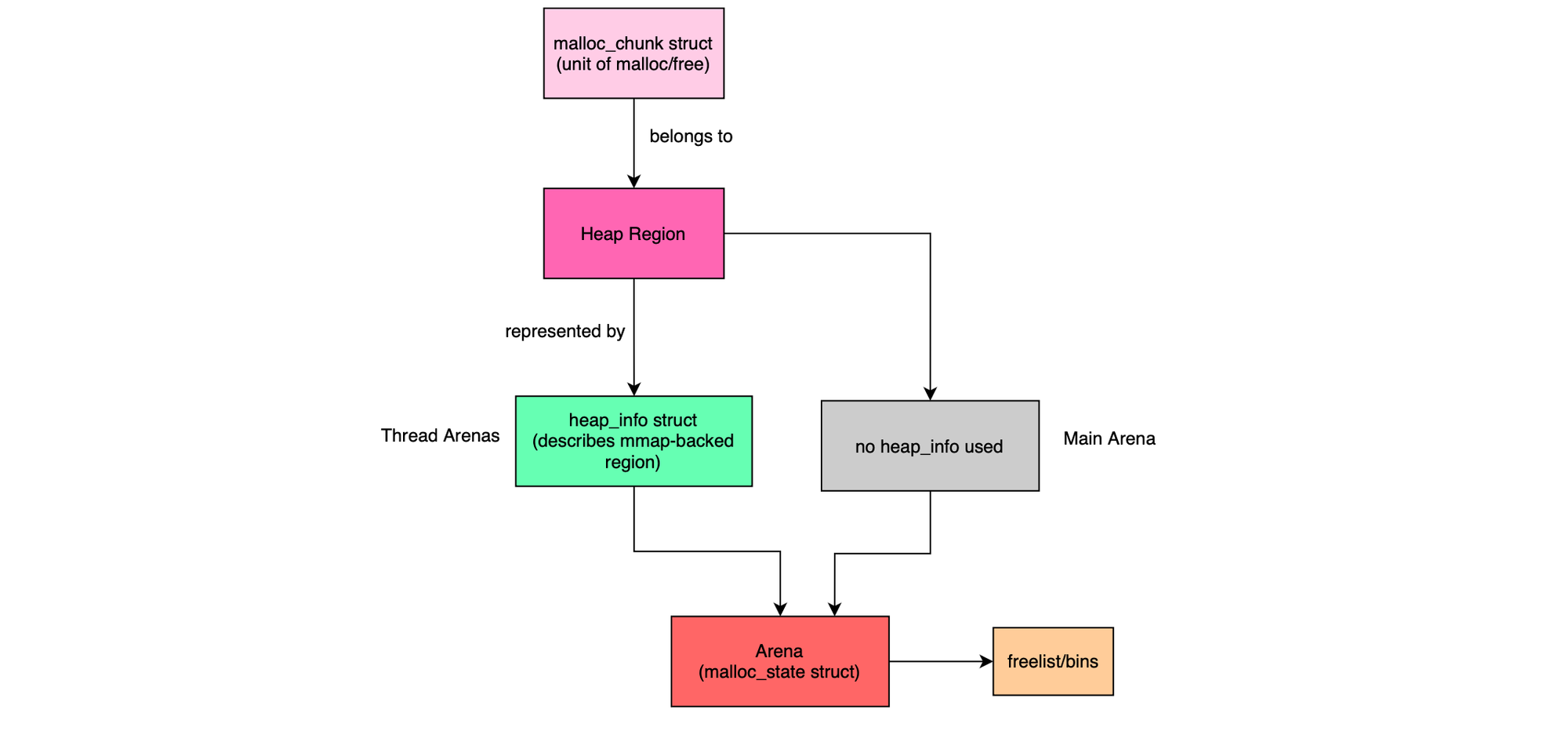

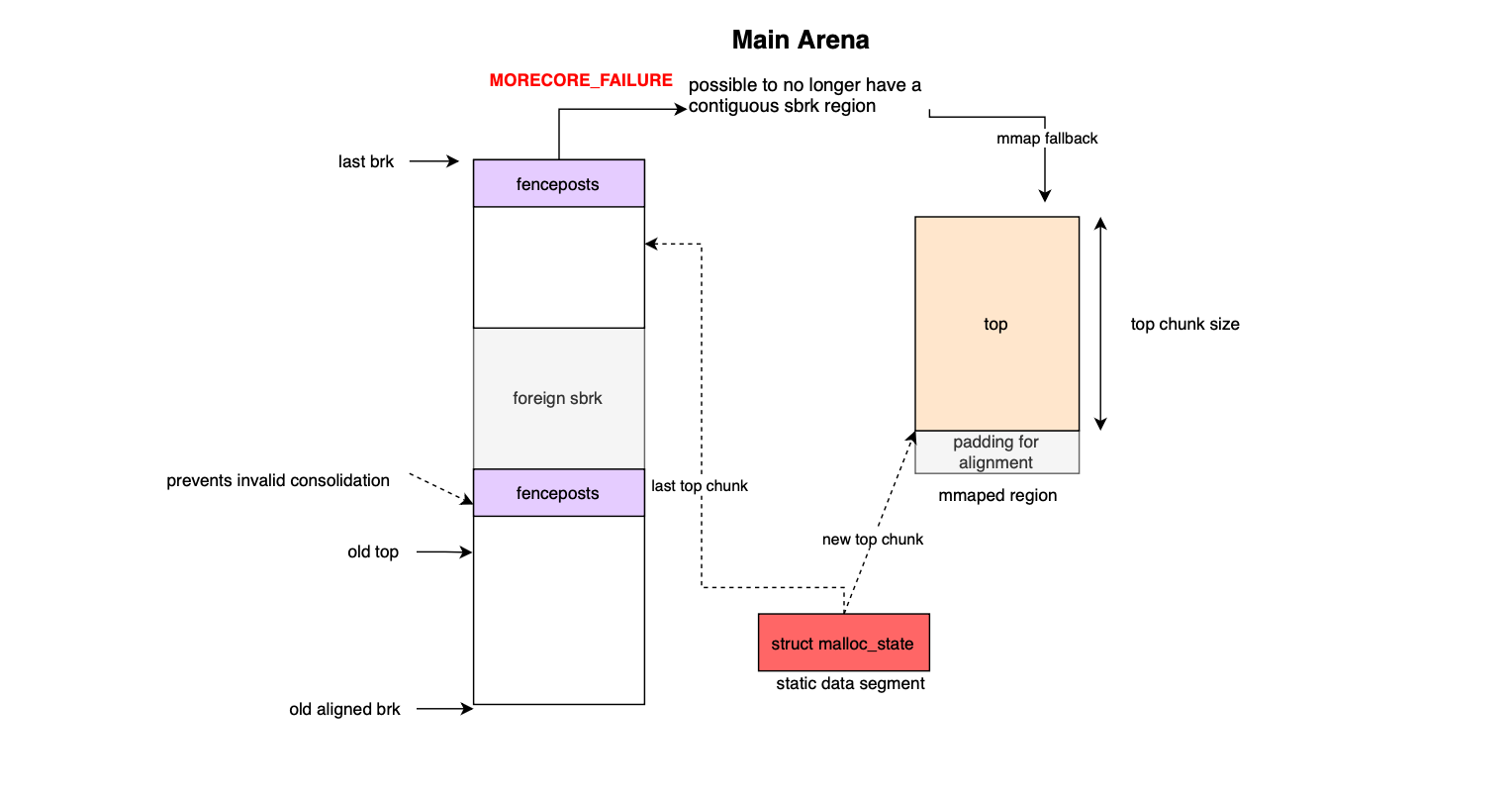

As we will cover in this post, virtual memory is organized into chunks, where each chunk belongs to a heap space (or arena). This could be the traditional process heap, in which case the chunk belongs to the main arena, or a memory-mapped region represented by a vm_area_struct, in which case the chunk belongs to a thread arena.

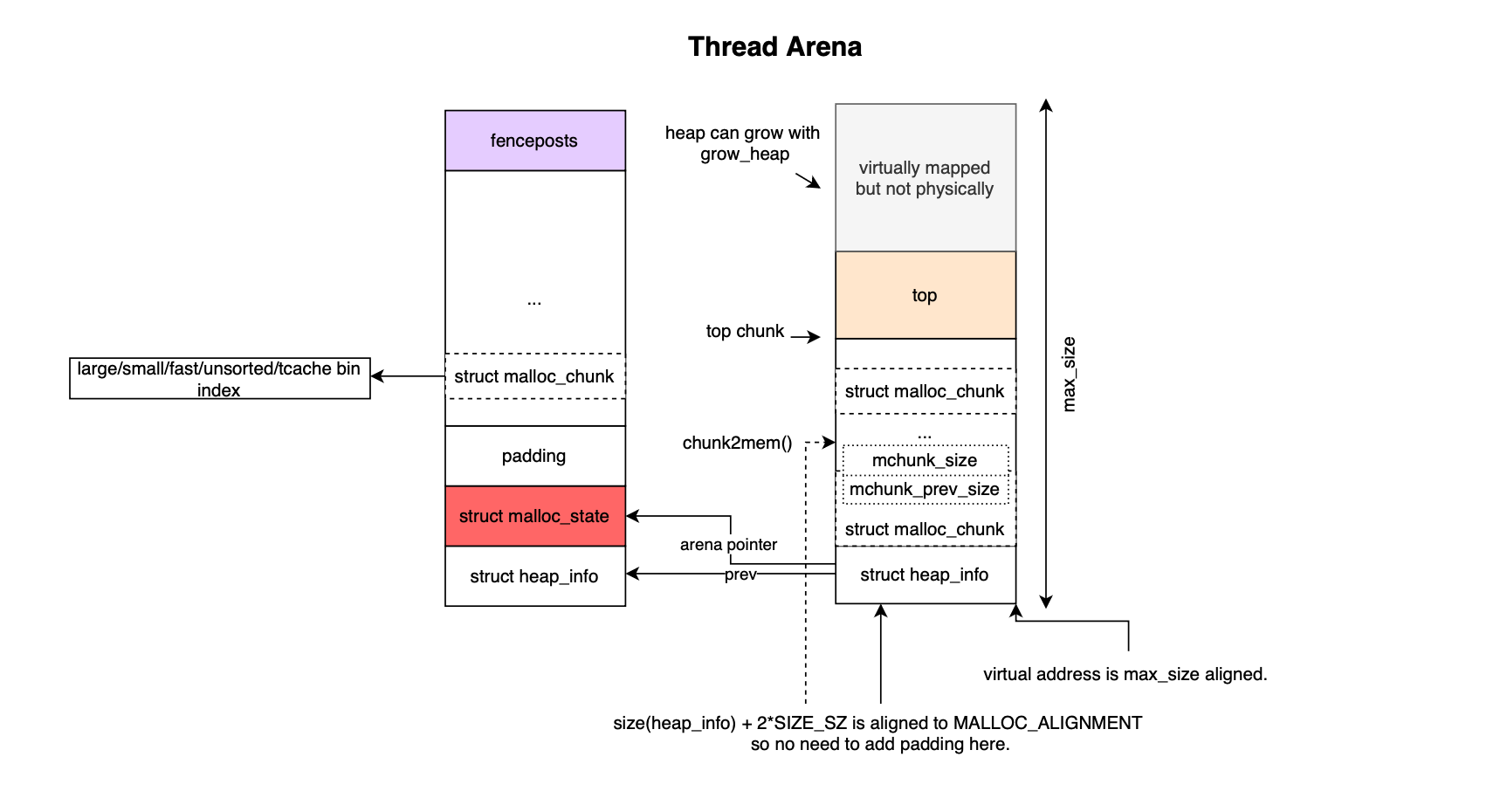

When a thread is assigned to an arena other than the main one, its mapped memory region is represented by a heap_infostructure. This is needed because mmap does not guarantee contiguous memory; when discontinuities occur, heap_infoallows traversal of the heap space managed by an arena.

An arena represents a heap space, storing memory blocks or chunks and belonging to one or more threads. Since malloc limits the number of arenas that can be created, some arenas may eventually be shared across threads. One or more heap_info structures (chained together) belong to a single arena. Thus, a chunk belongs to a heap region represented by a heap_info (except in the main arena), which in turn belongs to an arena represented by a malloc_state struct. During execution, heap_info instances may go away when memory is deallocated and new ones may join during allocation.

There is always a main arena, which uses the standard contiguous process heap region. Because it is contiguous, no heap_info structures are required for the main arena (although over time it can become non-contiguous).

In contrast, to better support multi-threaded applications and reduce synchronization tax, thread-specific arenas were introduced. These arenas consist of multiple heaps (memory-mapped regions) and are attached to different threads. Each thread arena is composed of chained heap_info structures, allocated via mmap().

Finally, when chunks are very large, they are allocated directly via mmap(). Unlike vanilla heap chunks, mmaped chunks are not subject to binning; a bin is a container where freed chunks are linked together.

In this section, internal data structures and concepts of ptmalloc are introduced.

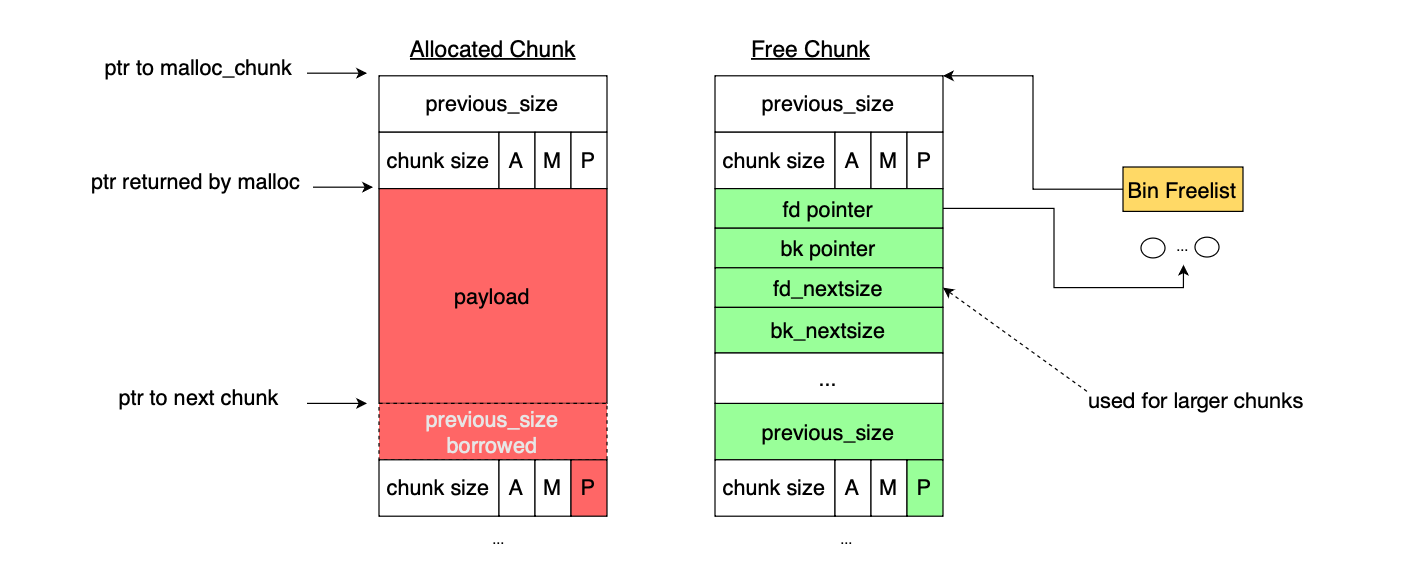

Memory managed by ptmalloc is divided into blocks or chunks, generally housed inside an arena consisting of one or several chained heaps (except the main arena). Each piece of memory or chunk allocated and returned to user code is prefixed with a header, a structure called malloc_chunk. Its definition is shown below:

struct malloc_chunk {

INTERNAL_SIZE_T mchunk_prev_size; /* Size of previous chunk (if free). */

INTERNAL_SIZE_T mchunk_size; /* Size in bytes, including overhead. */

struct malloc_chunk* fd; /* double links -- used only if free. */

struct malloc_chunk* bk;

/* Only used for large blocks: pointer to next larger size. */

struct malloc_chunk* fd_nextsize; /* double links -- used only if free. */

struct malloc_chunk* bk_nextsize;

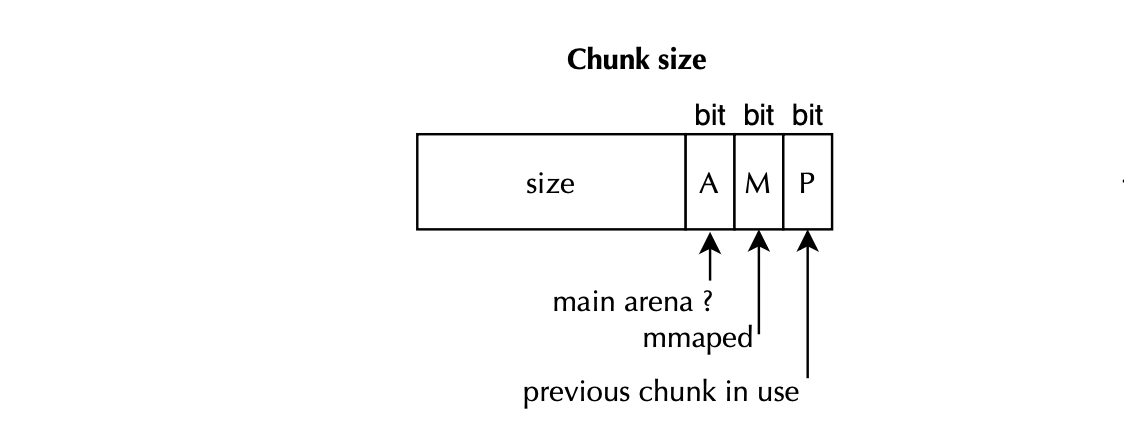

};Chunks sits next to each other in the heap, mchunk_prev_size is the size of the previous chunk, valid only if that chunk is free (otherwise it may house user data). The mchunk_size is the user size of this chunk, including the memory overhead (i.e 16 bytes or 2*INTERNAL_SIZE_T ). Because a chunk is always aligned to MALLOC_ALIGNEMENT =16 bytes by default, mchunk_size stores flags in its least significant bits, which helps during malloc deallocation and consolidation.

P(PREV_INUSE) is stored at lowest order bit and indicates if the previous chunk is free facilitating the malloc consolidation of free chunks as would be covered in more details later. The second lowest order bit, M(IS_MMAPPED), indicates if the chunk was allocated using mmap which would require a different deallocation path e.g munmap as mmaped chunks are not subject for bin indexing. Finally A(NON_MAIN_ARENA) bit if set indicates that the chunk belongs to a thread arena (more on that later).

Let's look at other fields. fd and bk are forward and backward pointers used to link/unlink free chunks in double linked lists (used for binning). The two pointers are stored in the chunk's user accessible memory only if the chunk is free (i.e if the user chunk is allocated then user data would sit at the corresponding memory location).

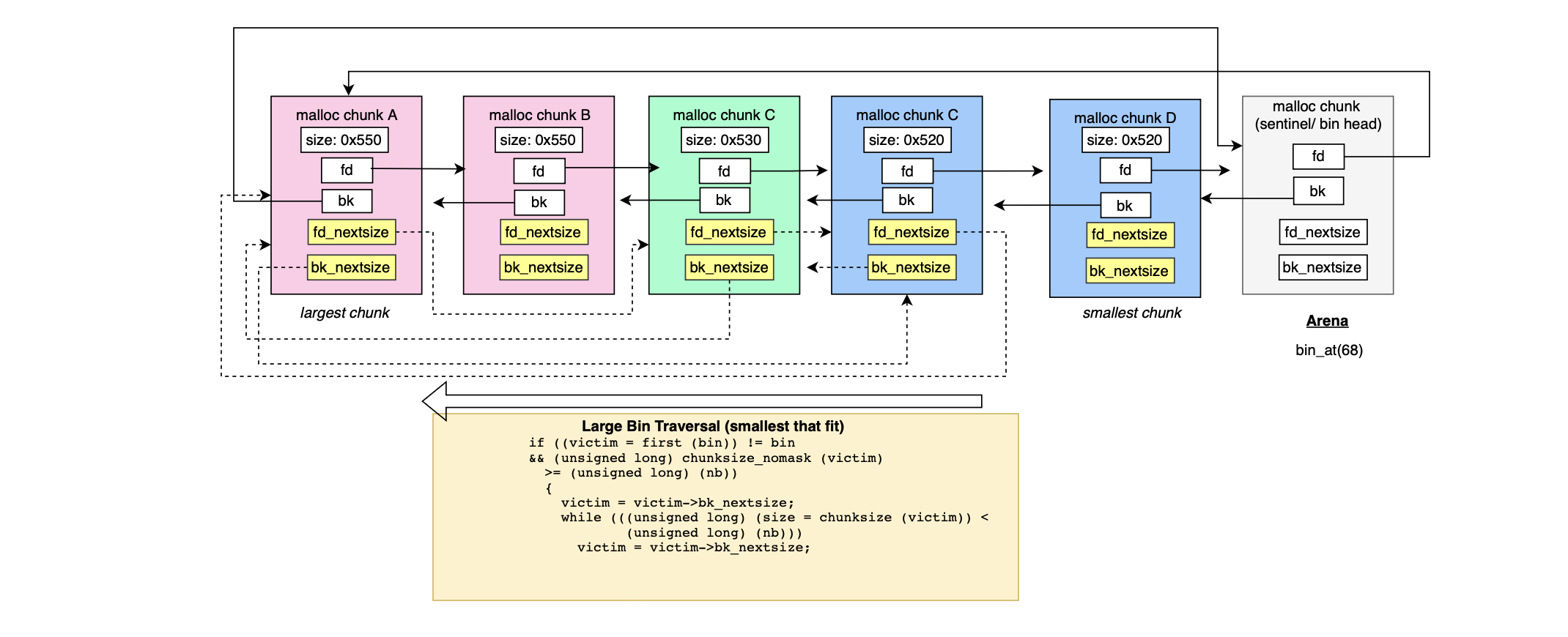

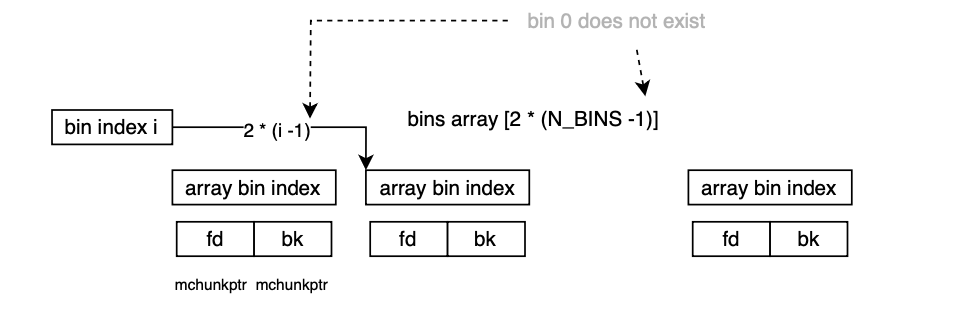

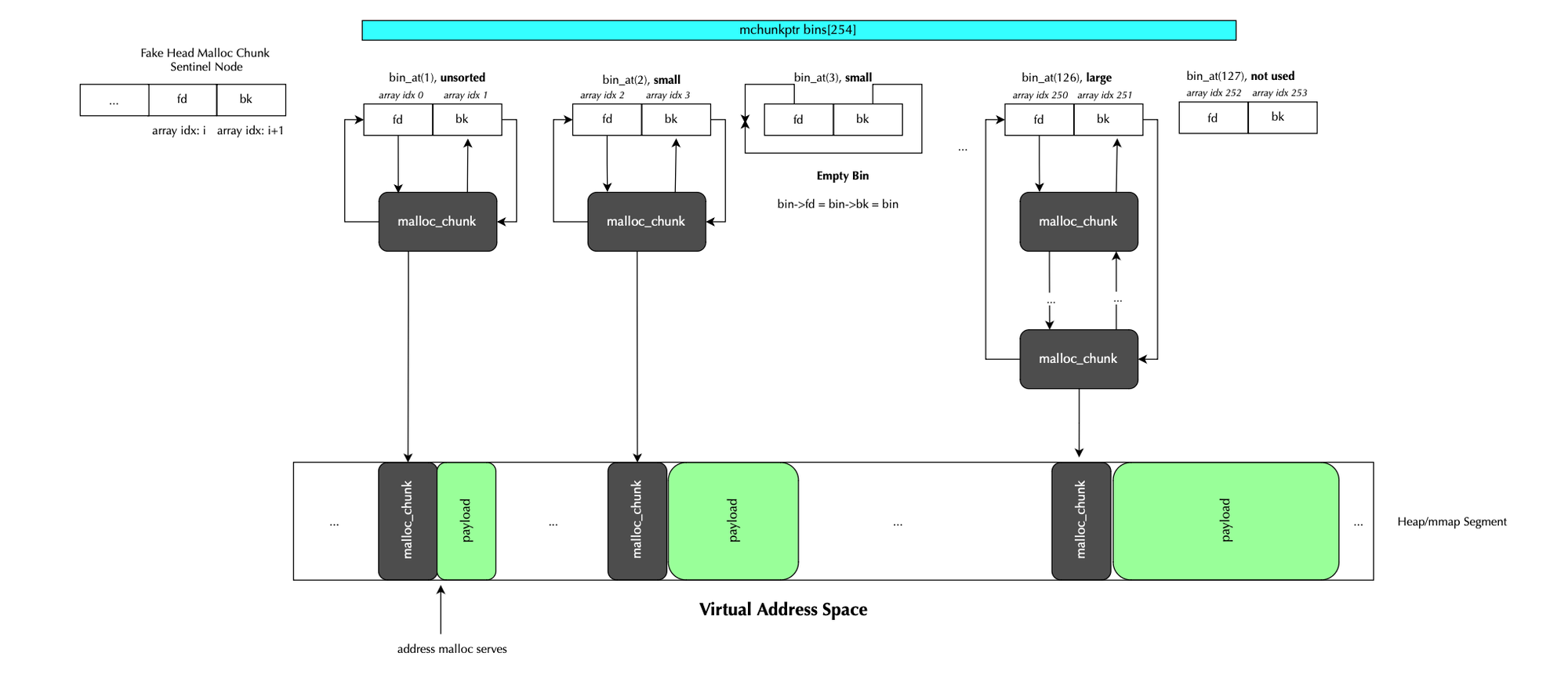

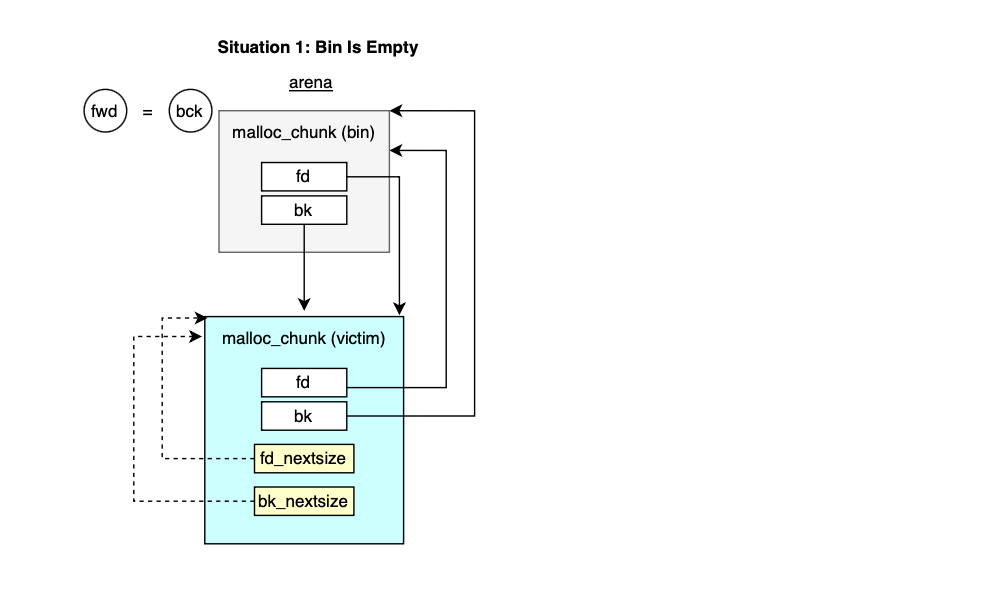

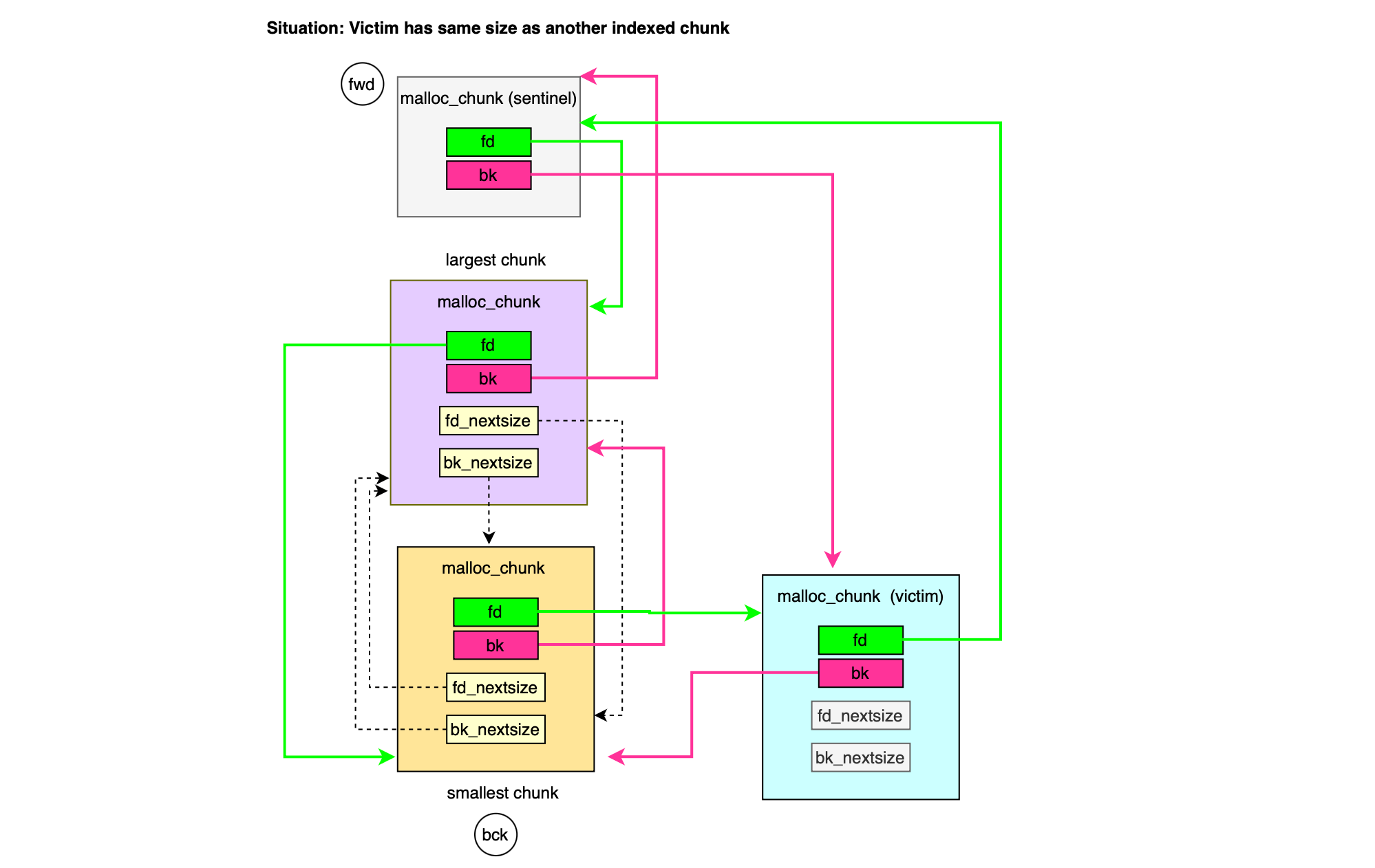

In order to simplify the implementation of the doubly linked list, the allocator uses a sentinel node, a dummy node placed at the front of each doubly linked list (associated with a bin index or size range); the bin node points to the head and tail nodes of the list. The sentinel node does not contain any data; it consists only of a forward pointer fd and a backward pointer bk, requiring two pointers for each bin (minus one, since bin 0 does not exist), i.e.,2 × (NBINS – 1).

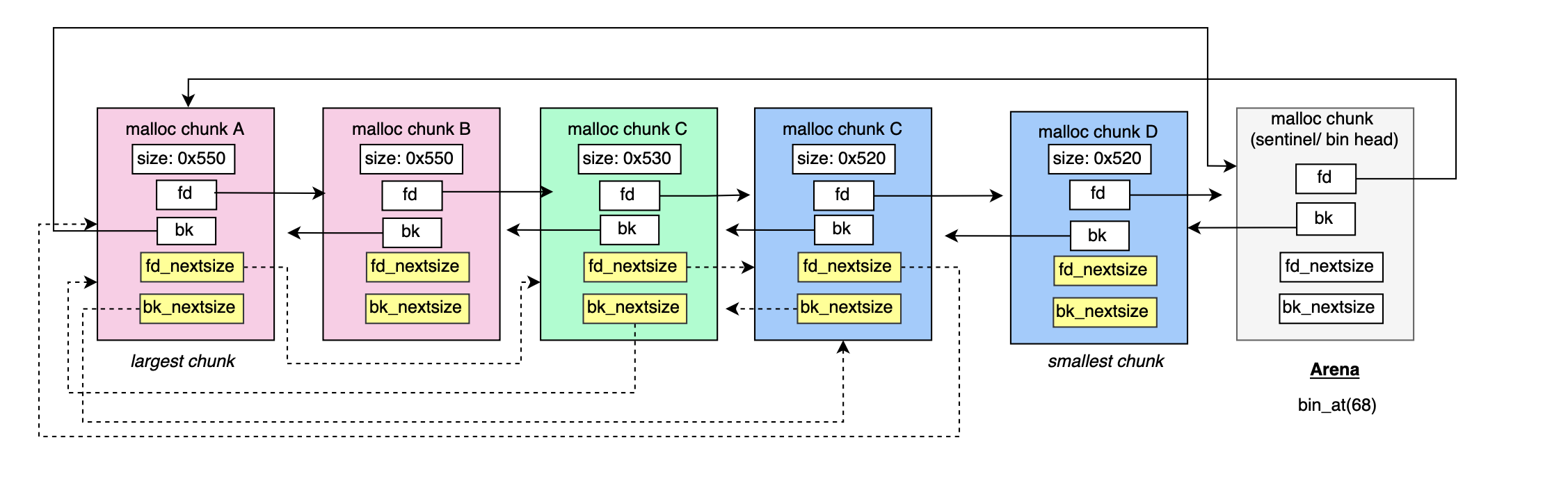

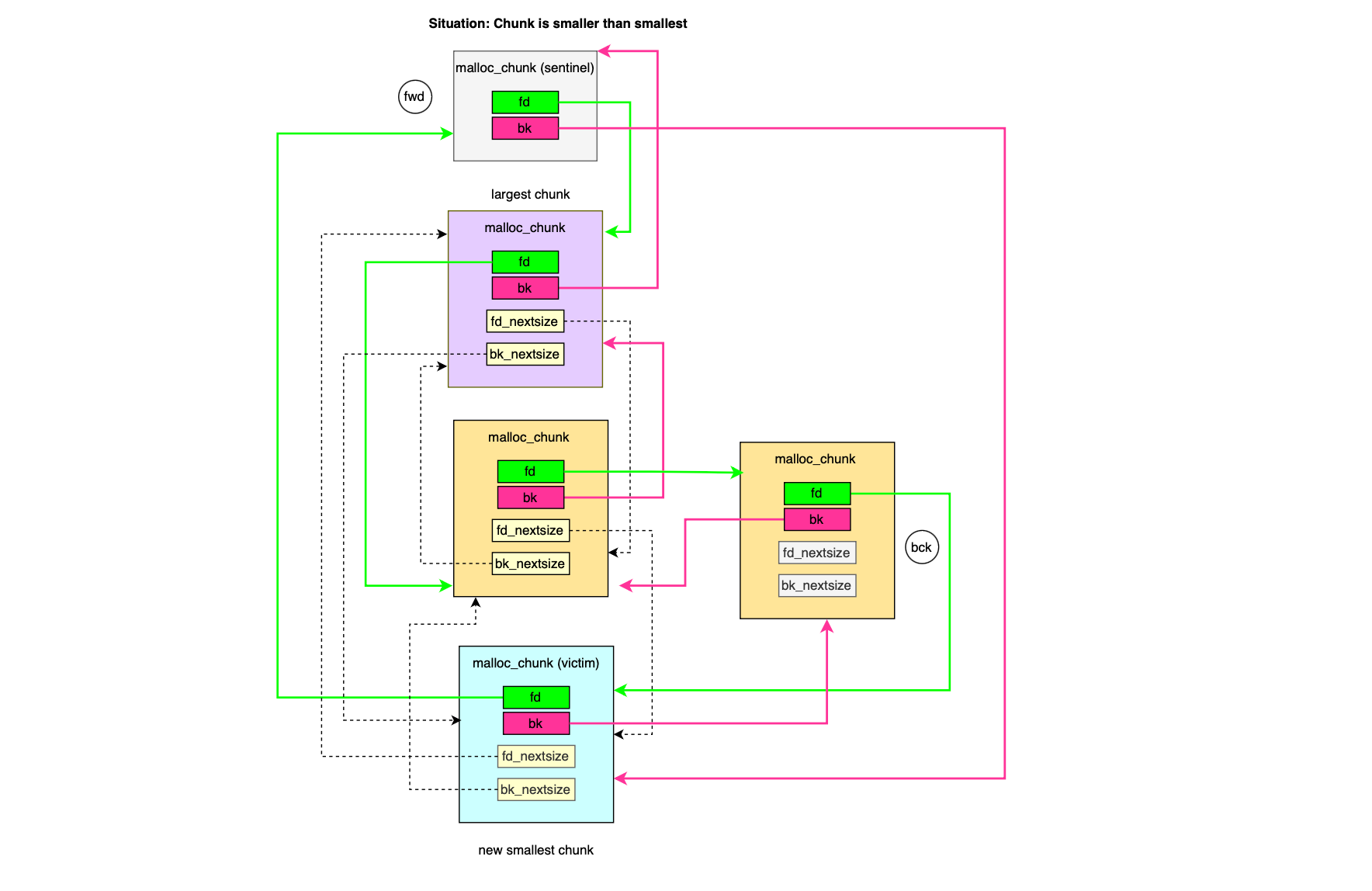

The forward and backward pointers reference the largest and smallest malloc chunks indexed in the bin (index traversal by size is only relevant for large bin indexes). If the header or sentinel node points to itself, then the doubly linked list is empty.

To speed up traversal of bin indexes when several chunks of the same size are adjacent (and would otherwise require few steps), the allocator introduces another type of doubly linked list, represented by fd_nextsize and bk_nextsize. fd_nextsize and bk_nextsize allow skipping over chunks of identical size, as they point directly to the next smaller/larger chunk available. This is illustrated below.

When memory is allocated, the allocator move past the header of size CHUNK_HDR_SZ and return the obtained memory address to the user:

#define chunk2mem(p) ((void*)((char*)(p) + CHUNK_HDR_SZ))When memory is freed, the allocator retrieves the chunk's header as follows :

#define mem2chunk(mem) ((mchunkptr)((char*)(mem) - CHUNK_HDR_SZ))

As we will cover later, the following macros are extensively used during memory allocation and deallocation.

While reading the source code of malloc or posts discussing its internals, you may come across something confusing (e.g https://stackoverflow.com/questions/49343113/understand-a-macro-to-convert-user-requested-size-to-a-malloc-usable-size). The memory overhead used to store chunk metadata on 64-bit system is by default 16 bytes, or 2 words (mchunk_prev_size and mchunk_size). However, when you look at the macro used to map the request size to include the overhead:

#define request2size(req) \

(((req) + SIZE_SZ + MALLOC_ALIGN_MASK < MINSIZE) ? \

MINSIZE : \

((req) + SIZE_SZ + MALLOC_ALIGN_MASK) & ~MALLOC_ALIGN_MASK)

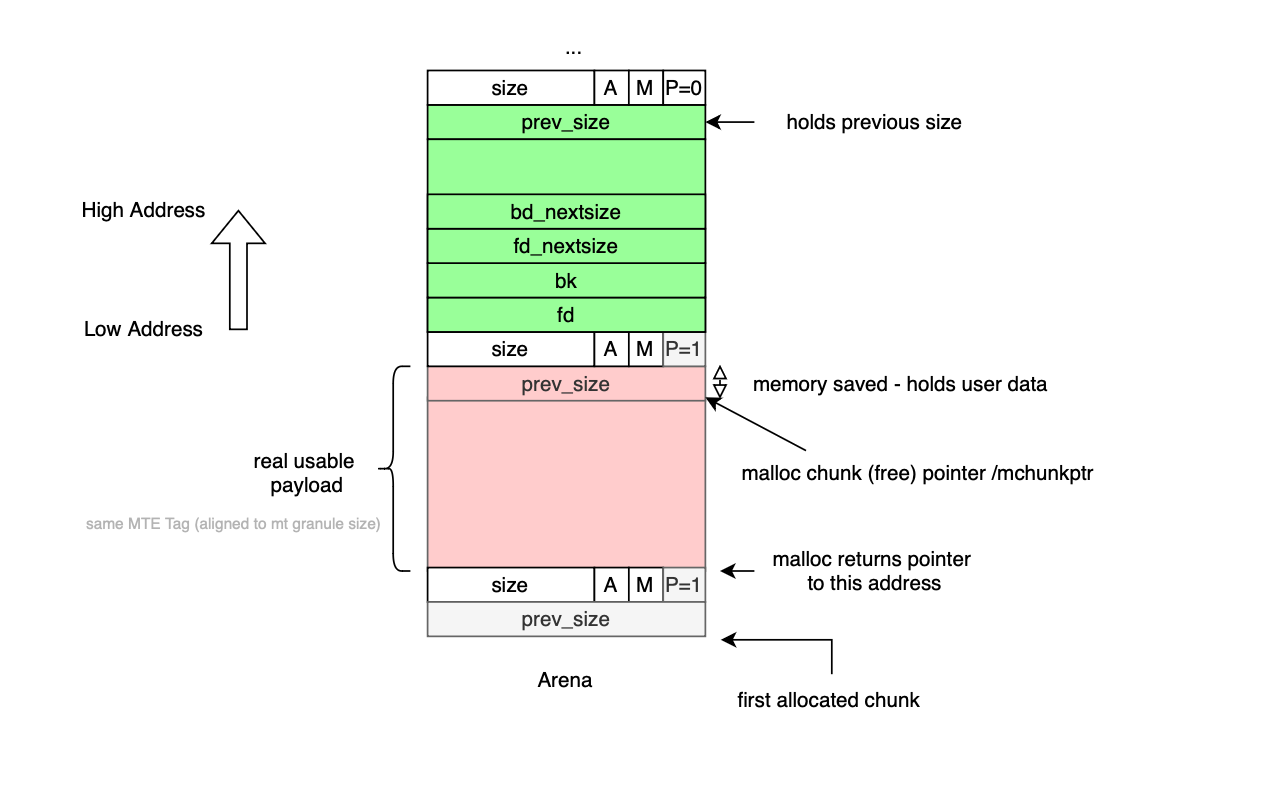

You get confused. Why does it only add SIZE_SZ (8 bytes in 64-bit systems) and not 2 * SIZE_SZ? This is because in worst case scenario, the SIZE_SZ previous size is borrowed from next chunk as anyway it would not be used until the previous chunk is free; thus the allocator only needs to guarantee size for SIZE_SZ.

The footer of the current chunk, that is, the prev_size field, is stored as part of the next chunk's header and is never used when previous chunk is in use so why not use it when possible and use the 8 bytes of prev_size to complete the user memory if needed.

Let's look at the macro memsize(p), which returns the real usable data (very important when Memory Tagging or MT is enabled).

/* This is the size of the real usable data in the chunk. Not valid for

dumped heap chunks. */

#define memsize(p) \

(__MTAG_GRANULE_SIZE > SIZE_SZ && __glibc_unlikely(mtag_enabled) ? \

chunksize(p) - CHUNK_HDR_SZ : \

chunksize(p) - CHUNK_HDR_SZ + (chunk_is_mmapped(p) ? 0 : SIZE_SZ))

Let's first consider the case where memory tagging is not enabled. If the chunk is served by mmap then the usable size is chunksize(p) - CHUNK_HDR_SZ as in this case binning is not used. On the other hand, if the chunk is subject to binning, then the real usable size is chunksize(p) - CHUNK_HDR_SZ + SIZE_SZ as SIZE_SZ can be borrowed in this case.

Now, if memory tagging is enabled and the granule size is larger than SIZE_SZ, it is not safe to return chunksize(p) - CHUNK_HDR_SZ + SIZE_SZ. Instead, the real usable memory is just chunksize(p) - CHUNK_HDR_SZ. This is because when __MTAG_GRANULE_SIZE > SIZE_SZ, the size and prev_size fields would not be in different granules (note that the initial size is always aligned to the granule size before calling the request2size macro and __MTAG_GRANULE_SIZE is multiple of SIZE_SZ , so the user will never be deprived of usable memory here).

On the other hand, if __MTAG_GRANULE_SIZE < SIZE_SZ, it is safe to return chunksize(p) - CHUNK_HDR_SZ + SIZE_SZ. The reason is that if __MTAG_GRANULE_SIZE < SIZE_SZ, then SIZE_SZ is necessarily a multiple of __MTAG_GRANULE_SIZE (since both are power of 2 and recall that \( 2^{x+n} =2^{x} \times 2^{n} \). Sooo we are good.

Memory allocation in glibc is organized using arenas; regions of memory that manage chunks. There are two types of arenas: main and thread specific arenas.

Main arena uses the traditional heap via sbrk() to grow memory (it can also use mmap in certain scenarios and thus can become non contiguous; more on that later). Thread specific arenas in contrast use mmap() to allocate space for malloc chunks. Memory or heap regions in thread arenas are chained together as mmap() may return discontinuous heap regions. As we will cover in a future section, when a heap is not used anymore, the backed physical memory or pages would be handed back to the kernel. Thread arenas are limited to 8 x cores in 64-bit systems.

Each arena is described by a malloc_state struct:

struct malloc_state

{

/* Serialize access. */

__libc_lock_define (, mutex);

/* Flags (formerly in max_fast). */

int flags;

/* Set if the fastbin chunks contain recently inserted free blocks. */

/* Note this is a bool but not all targets support atomics on booleans. */

int have_fastchunks;

/* Fastbins */

mfastbinptr fastbinsY[NFASTBINS];

/* Base of the topmost chunk -- not otherwise kept in a bin */

mchunkptr top;

/* The remainder from the most recent split of a small request */

mchunkptr last_remainder;

/* Normal bins packed as described above */

mchunkptr bins[NBINS * 2 - 2];

/* Bitmap of bins */

unsigned int binmap[BINMAPSIZE];

/* Linked list */

struct malloc_state *next;

/* Linked list for free arenas. Access to this field is serialized

by free_list_lock in arena.c. */

struct malloc_state *next_free;

/* Number of threads attached to this arena. 0 if the arena is on

the free list. Access to this field is serialized by

free_list_lock in arena.c. */

INTERNAL_SIZE_T attached_threads;

/* Memory allocated from the system in this arena. */

INTERNAL_SIZE_T system_mem;

INTERNAL_SIZE_T max_system_mem;

};__libc_lock_define (, mutex) is a synchronization primitive, a mutex, that serializes access to the arena. If one thread holds it, other threads may be suspended until the lock is released by the owner (different from a spinlock). int have_fastchunks indicates whether there are fastbin chunks available; when a chunk is returned to malloc and turns out that the size fits for fast bin index, malloc would set atomically have_fastchunks to make the chunk available to use for next allocations. have_fastchunks is represented using int rather than a bool as atomic booleans are not supported on all targets.

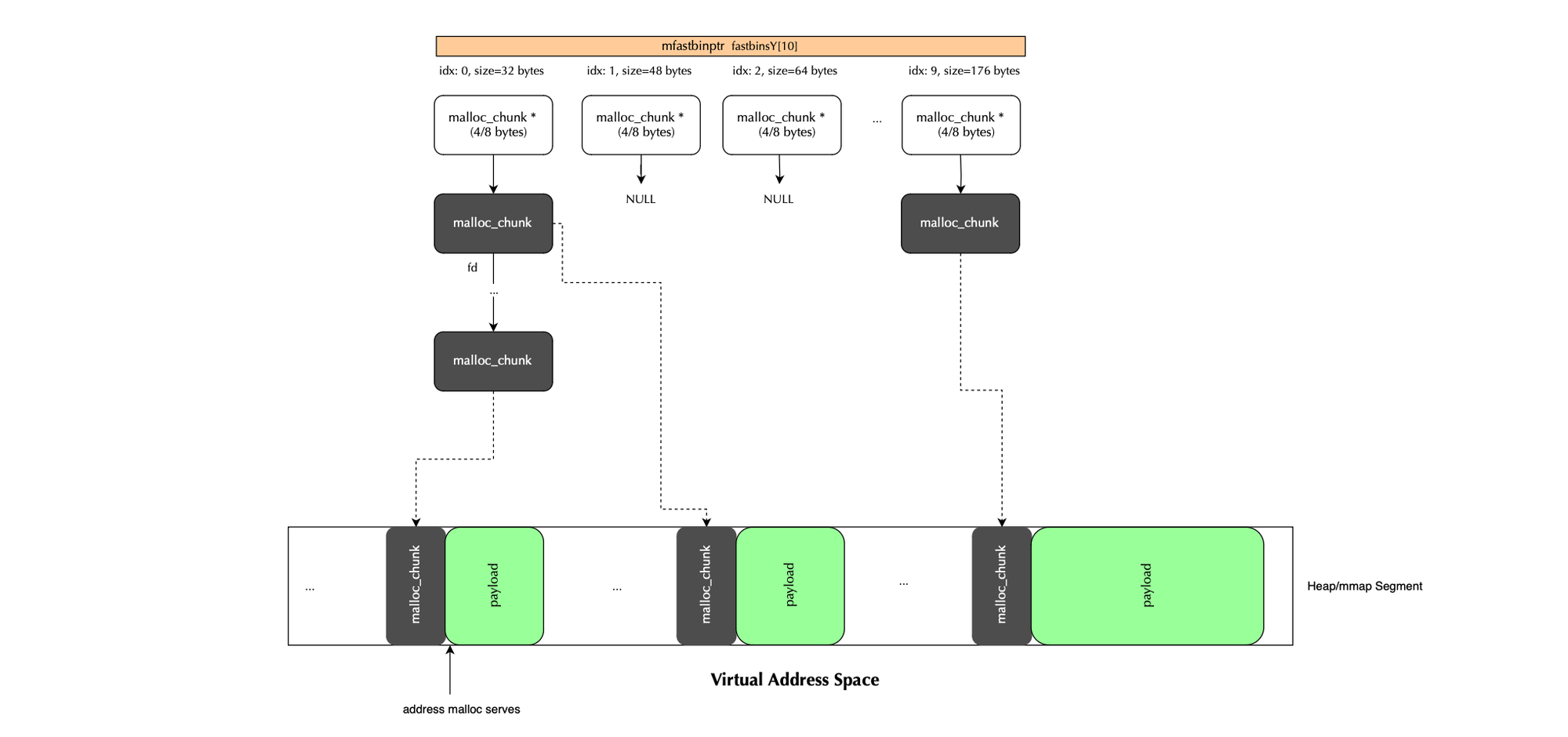

mfastbinptr fastbinsY[NFASTBINS]is the array of fastbin lists (used for small, quick allocations). It uses LIFO semantic and is not coalesced immediately; only during malloc consolidation which is triggered using malloc_consolidate().

mchunkptr top is a pointer to the top chunk; the chunk at the end of the heap that's not in a bin. mchunkptr last_remainder stores the leftover part of a chunk split. mchunkptr bins[ 2*(NBINS -1) ] is a double-linked lists for unsorted, small and large bins where even indices are fd and odd indices are bk.

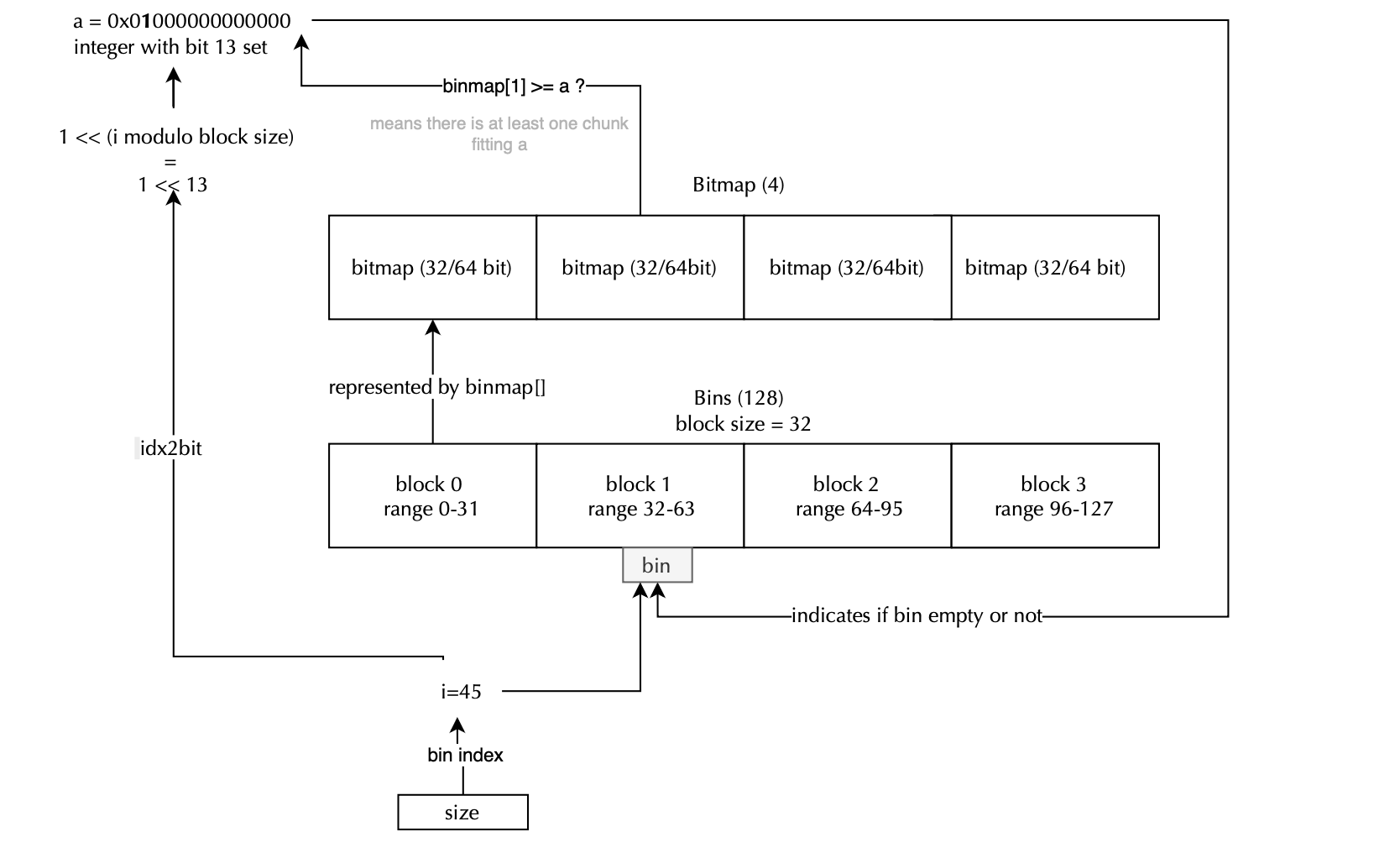

unsigned int binmap[BINMAPSIZE] is a bitmap that flags which bins are non-empty, speeding up lookup (as we will cover later; when a chunk is returned to malloc and size fits in small or large bin index, binmap is updated).

struct malloc_state *next is a pointer to the next arena used to chain multiple arenas. struct malloc_state *next_free points to the next free arena.

INTERNAL_SIZE_T attached_threads houses the number of threads currently attached to this arena (the allocator sets a limit on the number of arenas that can be created so at some points threads may share arenas). If attached_threads= 0, the arena may be reclaimed.

INTERNAL_SIZE_T system_mem is the total memory allocated from the system in this arena via sbrk or mmap (as we will cover later when a heap unused is released or malloc shrinks, top chunk system_mem is updated) and finally INTERNAL_SIZE_T max_system_mem peak memory usage by the arena during its lifetime i.e largest amount of memory observed for that arena.

Free chunk are organized into bins based on size. Each chunk is housed inside exactly one of the bins (unless the chunk is served by mmap()). Additionally when two neighboring chunks are freed, they may be merged into a larger chunk; this is refered to as consolidation; consolidation can also happens between a freed chunk and top chunk. Currently there are 4 types of indexes: fastbin, unsorted, small and large bin). As we will cover later, particularly large chunks are managed with the mmap() system call and are never indexed in bins

Fastbins are introduced for performance and cache locality. Chunks stored in fastbins are always marked as in_use until they are indexed in the unsorted bin.

Chunks of size ( including overhead) smaller than MAX_FAST_SIZE:=(80 * SIZE_SZ / 4) bytes (e.g., 32, 40, ..., 160) are all eligible for fast bins which are organized using a linked list stored in the malloc_state data structure representing the arena:

mfastbinptr fastbinsY[NFASTBINS];

All memory allocations (including overhead ) are aligned to MALLOC_ALIGNEMENT (default to 2 * sizeof(size_t) or 2*SIZE_SZ) and each chunk size is at least MIN_SIZE (default 32 bytes).

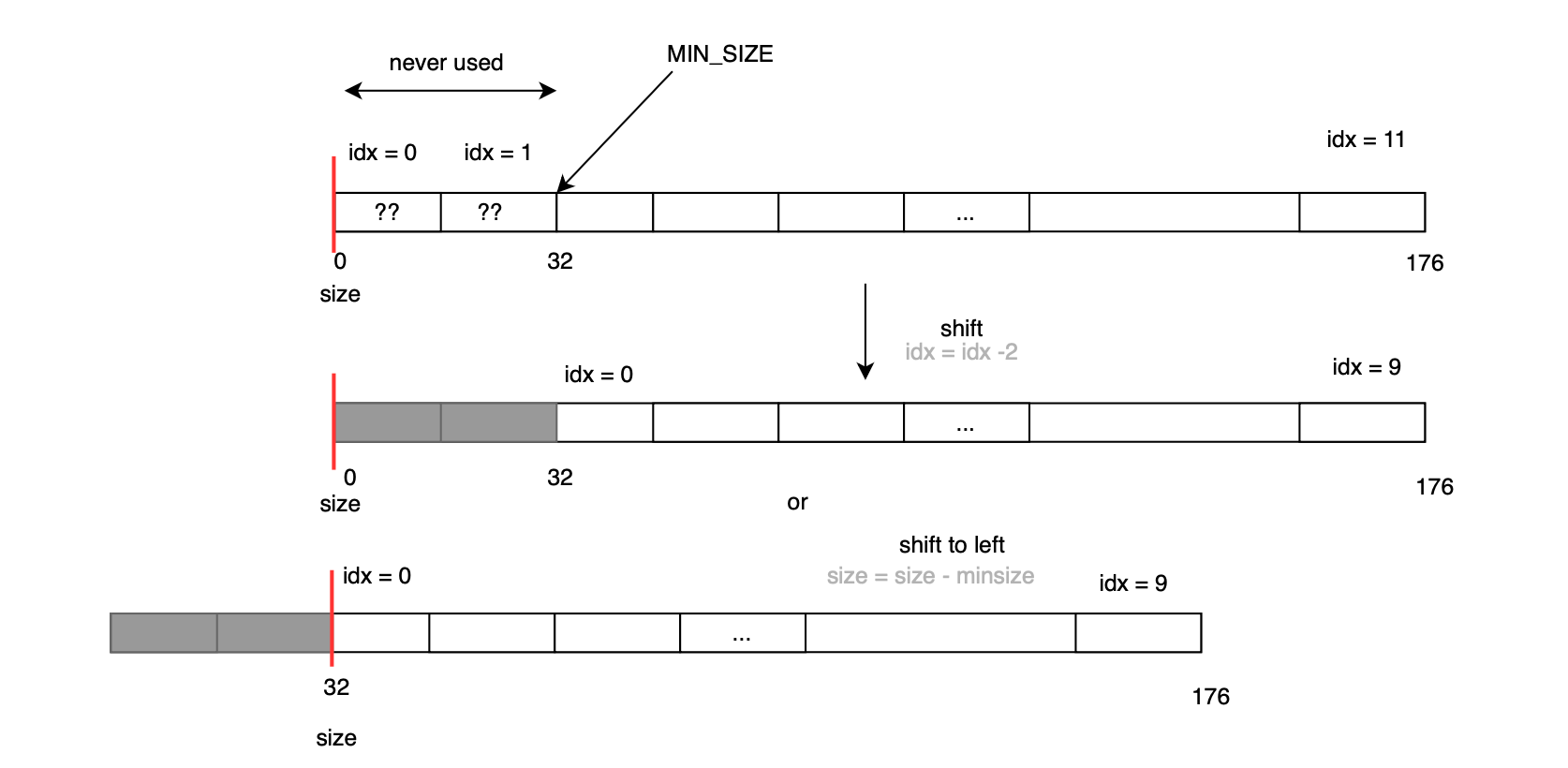

Each fast bin index maps to a specific chunk size, with sizes increasing in fixed alignment multiples (32, 48,.. 128 bytes,). In other words, the index follows the following formula size = index.MALLOC_ALIGNMENT and thus the index can be obtained by diving the size by the alignment. The index mapping is shown below:

#define fastbin_index(sz) \

((((unsigned int)(sz)) >> (SIZE_SZ == 8 ? 4 : 3)) - 2)

The macro subtract 2 because otherwise the resulting indexes 0 and 1 would never be used, as no valid chunk size maps to them (minimum size is 32); the array size can be be computed using the following macro (+ 1 as indexes starts at 0):

#define NFASTBINS (fastbin_index (request2size (MAX_FAST_SIZE)) + 1)

...

struct malloc_state

{

...

/* Fastbins */

mfastbinptr fastbinsY[NFASTBINS];

We could have also used the following indexing for fast bins which achieves the same:

index = (size - MIN_SIZE) / MALLOC_ALIGNMENT;The minimum chunk size is defined as follows:

#define MIN_CHUNK_SIZE (offsetof(struct malloc_chunk, fd_nextsize))On a 64-bit system, the minimum chunk size maps to 32 bytes. This means chunk sizes from 32 to 160 bytes fall within the fast bin range.

Finally the size for fast bin index is by default set to DEFAULT_MXFAST (64 * SIZE_SZ / 4) i.e 128 bytes on 64-bit systems (one can set it but can not go above MAX_FAST_SIZE otherwise out of bounds #define NFASTBINS (fastbin_index (request2size (MAX_FAST_SIZE)) + 1)).

#ifndef DEFAULT_MXFAST

#define DEFAULT_MXFAST (64 * SIZE_SZ / 4)

#endif

...

set_max_fast (DEFAULT_MXFAST);

...

#define set_max_fast(s) \

global_max_fast = (((size_t) (s) <= MALLOC_ALIGN_MASK - SIZE_SZ) \

? MIN_CHUNK_SIZE / 2 : ((s + SIZE_SZ) & ~MALLOC_ALIGN_MASK))

...

static __always_inline INTERNAL_SIZE_T

get_max_fast (void)

{

if (global_max_fast > MAX_FAST_SIZE)

__builtin_unreachable ();

return global_max_fast;

}

...

# int_malloc()

...

if ((unsigned long) (nb) <= (unsigned long) (get_max_fast ())) # fast path decision boundary The next category of bins is small bins which uses FIFO semantic and houses chunks of size from 32 until 1008 bytes or [32, 1024[ (there are 62 small bins in 64 bits system).

As discussed earlier, all bins (unsorted, large, and small) are housed in the bins array of the arena structure i.e malloc_state. Each bin occupies two entries in the array: the first for the forward pointer fd and the second for the backward pointer bk; basically they form a sentinel node for the double linked list. When a bin is empty, both fd and bk point to the sentinel node itself. This is illustrated below:

Small bins handle chunk sizes (request size + overhead) below MIN_LARGE_SIZE= NSMALLBINS.MALLOC_ALIGNMENT :

#define in_smallbin_range(sz) \

((unsigned long)(sz) < (unsigned long)MIN_LARGE_SIZE)

Bins are spaced by MALLOC_ALIGNMENT i.e size = index . MALLOC_ALIGNMENT. Specifically, malloc corrects the index if MALLOC_ALIGNMENT > CHUNK_HDR_SZ, i.e redefines the size indexing as size = (index-correction) . MALLOC_ALIGNMENT (honestly like this I dont' see why we need it...).

#define NBINS 128

#define NSMALLBINS 64

#define SMALLBIN_WIDTH MALLOC_ALIGNMENT

#define SMALLBIN_CORRECTION (MALLOC_ALIGNMENT > CHUNK_HDR_SZ)

#define MIN_LARGE_SIZE ((NSMALLBINS - SMALLBIN_CORRECTION) * SMALLBIN_WIDTH)

The above gives born to the following macro:

#define smallbin_index(sz) \

((SMALLBIN_WIDTH == 16 ? (((unsigned)(sz)) >> 4) : (((unsigned)(sz)) >> 3)) \

+ SMALLBIN_CORRECTION)

Small bins manage chunks of size greater than DEFAULT_MXFAST (default is 128) and smaller than MIN_LARGE_SIZE (1008 bytes =(64 - 1) x 16). For example, if we consider a chunk of size 208 bytes (on a 64-bit system) the index is (208 / 16) + 1=14, so the bin index is 14 . To find the position in the bins array, the macro used is defined (bin_at()):

#define bin_at(m, i) \

(mbinptr)(((char *)&((m)->bins[((i) - 1) * 2])) \

- offsetof(struct malloc_chunk, fd))

Here, offsetof(struct malloc_chunk, fd) is used to represent the sentinel node. As we will cover in a future section, if bin_at(..).fd = bin_at(..).bk = bin_at(..) it means the bin is empty.

In contrast to other bins, unsorted bin stores chunks with arbitrary sizes. As we will cover later, large chunks returned to malloc are not indexed in their associated bins immediatly. They are first placed in the unsorted bin index and only during traversal later (when malloc is called) the freed chunks will be indexed in their associated bins i.e large bin index.

static INTERNAL_SIZE_T

_int_free_create_chunk (mstate av, mchunkptr p, INTERNAL_SIZE_T size,

mchunkptr nextchunk, INTERNAL_SIZE_T nextsize)

{

if (nextchunk != av->top)

{

/* get and clear inuse bit */

bool nextinuse = inuse_bit_at_offset (nextchunk, nextsize);

/* consolidate forward */

if (!nextinuse) {

unlink_chunk (av, nextchunk);

size += nextsize;

} else

clear_inuse_bit_at_offset(nextchunk, 0);

mchunkptr bck, fwd;

if (!in_smallbin_range (size))

{

/* Place large chunks in unsorted chunk list. Large chunks are

not placed into regular bins until after they have

been given one chance to be used in malloc.

This branch is first in the if-statement to help branch

prediction on consecutive adjacent frees. */

bck = unsorted_chunks (av);

fwd = bck->fd;

if (__glibc_unlikely (fwd->bk != bck))

malloc_printerr ("free(): corrupted unsorted chunks");

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

else

{

/* Place small chunks directly in their smallbin, so they

don't pollute the unsorted bin. */

int chunk_index = smallbin_index (size);

bck = bin_at (av, chunk_index);

fwd = bck->fd;

if (__glibc_unlikely (fwd->bk != bck))

malloc_printerr ("free(): chunks in smallbin corrupted");

mark_bin (av, chunk_index);

}

p->bk = bck;

p->fd = fwd;

bck->fd = p;

fwd->bk = p;

set_head(p, size | PREV_INUSE);

set_foot(p, size);

check_free_chunk(av, p);

}

else

{

/* If the chunk borders the current high end of memory,

consolidate into top. */

size += nextsize;

set_head(p, size | PREV_INUSE);

av->top = p;

check_chunk(av, p);

}

return size;

}The small bin index starts at 2 while the bins array index 0 and 1 are occupied by the unsorted bin; the following macro is used during memory alloc/dealloc to get a pointer to the head of the double linked list (recall that the bin index =1 is different from that array bin index i.e o,1):

#define unsorted_chunks(M) (bin_at (M, 1))In contrast to small and fast bins, large bins store chunks within a specific range and not of same size (as it is completely impractical to maintain one bin for each size). In order to speed up the indexing and searching, two pointers fd_nextsize and bk_nextsize are introduced to traverse group of same sized chunks.

During allocation, bins are traversed in ascendant order (i.e find smallest that fits) through bk_nextsize until a chunk fit the request size and similarly while indexing (i.e bins coming from unsorted bin) fd_nextsize is used to traverse the double linked list and find the best position to place a given bin.

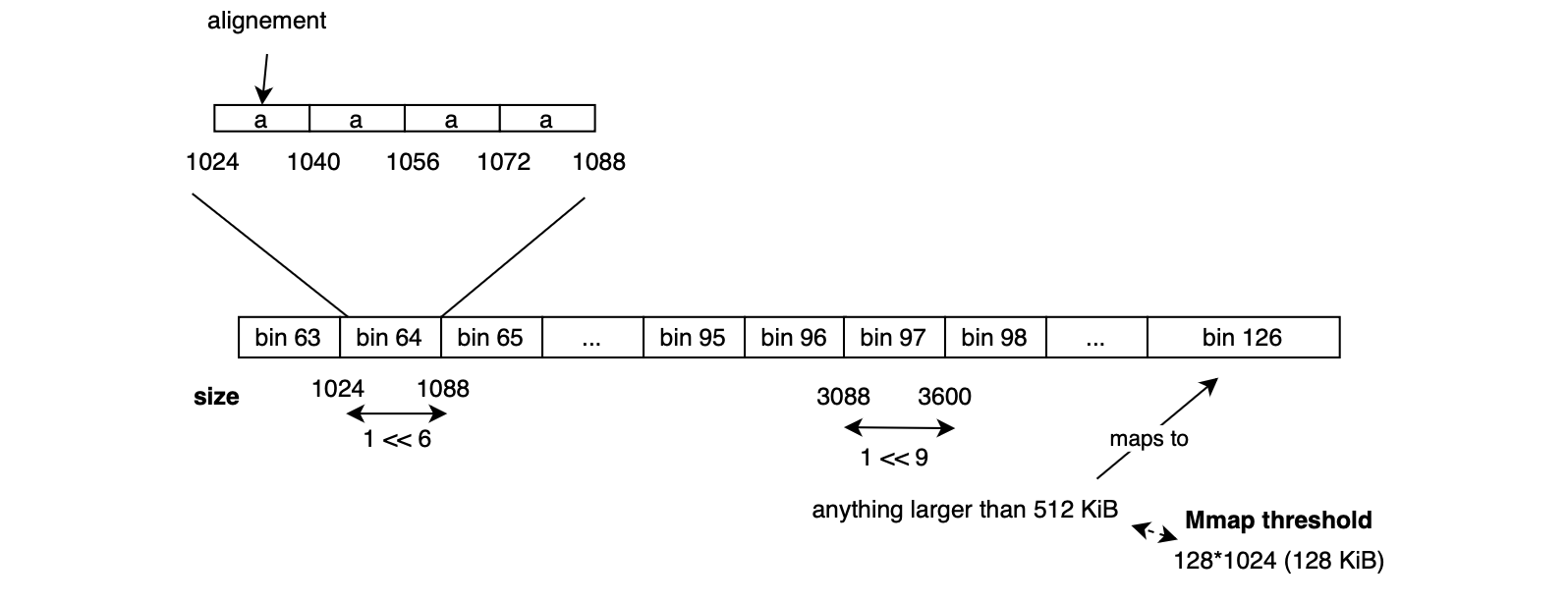

Malloc chunks with size larger than or equal to MIN_LARGE_SIZE bytes ( default 1024) are indexed in large bin index. The indexation logic is slightly more complex and is shown below:

#define largebin_index_64(sz) \

(((((unsigned long) (sz)) >> 6) <= 48) ? 48 + (((unsigned long) (sz)) >> 6) :\

((((unsigned long) (sz)) >> 9) <= 20) ? 91 + (((unsigned long) (sz)) >> 9) :\

((((unsigned long) (sz)) >> 12) <= 10) ? 110 + (((unsigned long) (sz)) >> 12) :\

((((unsigned long) (sz)) >> 15) <= 4) ? 119 + (((unsigned long) (sz)) >> 15) :\

((((unsigned long) (sz)) >> 18) <= 2) ? 124 + (((unsigned long) (sz)) >> 18) :\

126)Basically, in contrast to the small bin indexing approach, it does not increase the index every MALLOC_ALIGNMENT, it groups sizes by powers of two, for example, it updates the index once every 64 bytes if the chunk size falls in the range [1024, 3072] ( i.e every sz >> 6, which is by the way a multiple of MALLOC_ALIGNMENT) , if we consider a chunk of size 0x520 or 1312 then the bin index is 1312/64 + 48 = 68 (48 is an offset to make sure that we land at at at least one index after latest small bin index 64 = 1024/64 + 48). Chunks with size larger than >= 512 KiB falls into bin 126 i.e array positions (126‑1)*2 = 250 and 251 insidemchunkptr bins[NBINS*2 ‑ 2].

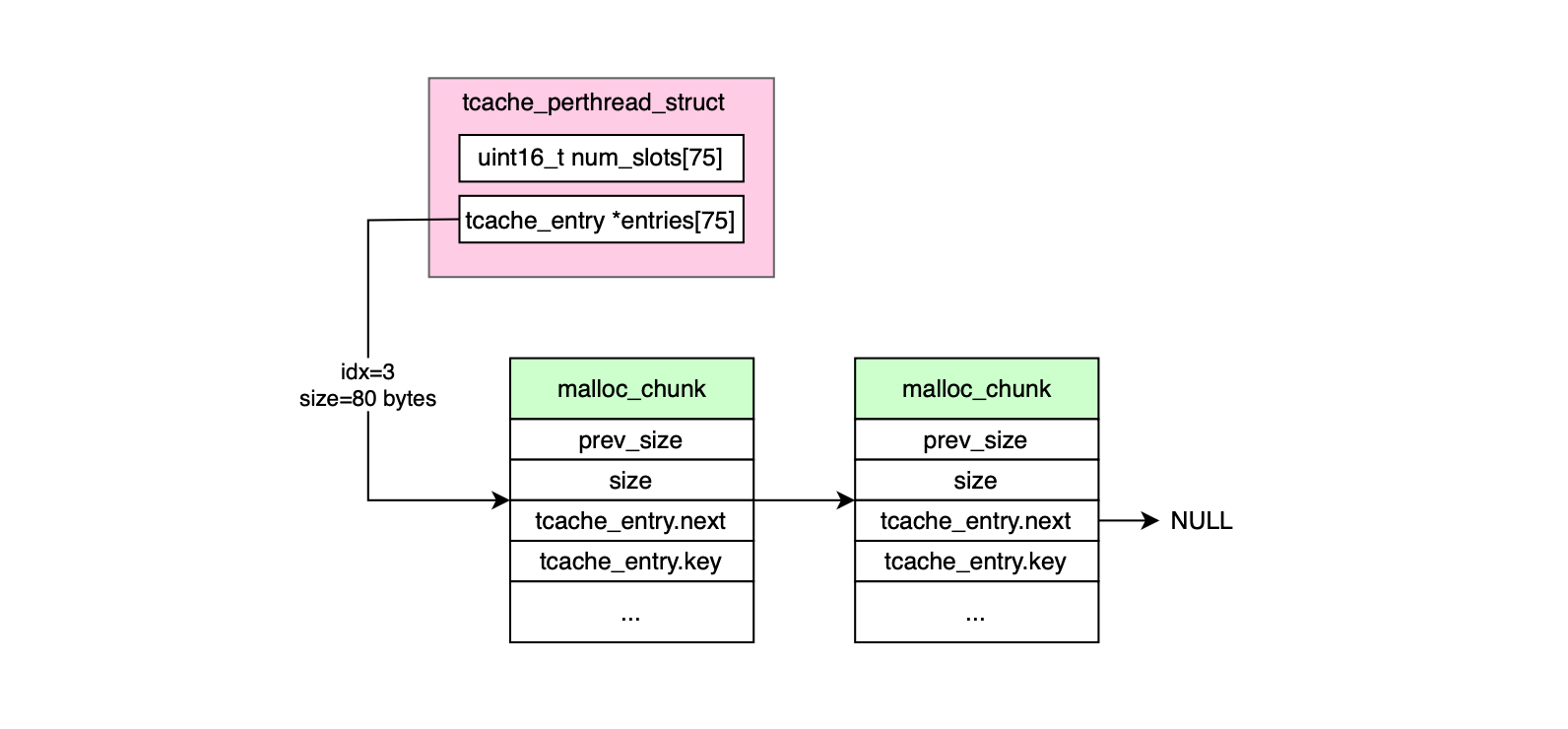

tcache_perthread_struct is a per-thread data structure that maintains thread-local bins. This is particularly efficient when several threads share the same arena and one wants to avoid the synchronization tax needed to operate on shared malloc state structure thereby improving performance in multi-threaded scenarios by reducing contention and latency for small, frequent allocations.

typedef struct tcache_perthread_struct

{

uint16_t num_slots[TCACHE_MAX_BINS];

tcache_entry *entries[TCACHE_MAX_BINS];

} tcache_perthread_struct;

The bins are stored in a specific array, similarly to previous glibc versions (in new glibc version, logarithmic binning was introduced for large tcache chunks):

tcache_entry *entries[TCACHE_MAX_BINS];

...

# define TCACHE_SMALL_BINS 64

# define TCACHE_LARGE_BINS 12 /* Up to 4M chunks */

# define TCACHE_MAX_BINS (TCACHE_SMALL_BINS + TCACHE_LARGE_BINS)

# define MAX_TCACHE_SMALL_SIZE tidx2usize (TCACHE_SMALL_BINS-1) The number of free blocks that can be added inside each bin is indicated in num_slots (default is 7):

typedef struct tcache_perthread_struct

{

uint16_t num_slots[TCACHE_MAX_BINS];

tcache_entry *entries[TCACHE_MAX_BINS];

} tcache_perthread_struct;

...

/* This is another arbitrary limit, which tunables can change. Each

tcache bin will hold at most this number of chunks. */

# define TCACHE_FILL_COUNT 7

There are basically 64 tcache small bins (housing chunks of the same size) and 12 tcache large bins (where each bin houses chunks of various size). The max bins index is then given by TCACHE_MAX_BINS = 75.

Each tcache bin (whether small or large) is a singly linked list. The nodes in that list are struct tcache_entry objects:

typedef struct tcache_entry {

struct tcache_entry *next; // Pointer to the next free chunk in the bin

uintptr_t key; // Used to detect double frees (security/integrity check)

} tcache_entry;

The key field helps detecting double frees by storing a thread specific value:

static void

tcache_key_initialize (void)

{

/* We need to use the _nostatus version here, see BZ 29624. */

if (__getrandom_nocancel_nostatus_direct (&tcache_key, sizeof(tcache_key),

GRND_NONBLOCK)

!= sizeof (tcache_key))

{

tcache_key = random_bits ();

#if __WORDSIZE == 64

tcache_key = (tcache_key << 32) | random_bits ();

#endif

}

} When a chunk is placed into the tcache, its user area (starting just after the size field) is reused to store the tcache_entry.

static __always_inline void

tcache_put_n (mchunkptr chunk, size_t tc_idx, tcache_entry **ep, bool mangled)

{

tcache_entry *e = (tcache_entry *) chunk2mem (chunk);

/* Mark this chunk as "in the tcache" so the test in __libc_free will

detect a double free. */

e->key = tcache_key;

if (!mangled)

{

e->next = PROTECT_PTR (&e->next, *ep);

*ep = e;

}

else

{

e->next = PROTECT_PTR (&e->next, REVEAL_PTR (*ep));

*ep = PROTECT_PTR (ep, e);

}

--(tcache->num_slots[tc_idx]);

}The chunk2mem macro used to get the user pointer from a chunk is:

#define chunk2mem(p) ((void*)((char*)(p) + CHUNK_HDR_SZ))For small tcache bins (indices 0...63), the indexing is straightforward and linear, very much like the old fastbins (offset by MINSIZE to align the minimum chunk to index 0):

# define csize2tidx(x) (((x) - MINSIZE) / MALLOC_ALIGNMENT)Here the indexing follows the formula size = MINSIZE + index.MALLOC_ALIGNEMT, where a is MALLOC_ALIGNMENT . For instance if i = 0, then size = MINSIZE = 32, if i = 1, then size = 32 + 16 = 48, i = 2, then size = 48 + 16 = 64 ect, thus similar to earlier versions, there are 64 small bins (up to 1024 bytes) and 12 extra bins for large chunks (up to 4194303 bytes) as listed below.

# define TCACHE_SMALL_BINS 64

# define TCACHE_LARGE_BINS 12 /* Up to 4M chunks */

# define TCACHE_MAX_BINS (TCACHE_SMALL_BINS + TCACHE_LARGE_BINS)

# define MAX_TCACHE_SMALL_SIZE tidx2usize (TCACHE_SMALL_BINS-1)

The insertion inside the tcache is shown below:

size_t tc_idx = csize2tidx (size);

if (__glibc_likely (tc_idx < TCACHE_SMALL_BINS))

{

if (__glibc_likely (tcache->num_slots[tc_idx] != 0))

return tcache_put (p, tc_idx);

}

else

{

tc_idx = large_csize2tidx (size);

if (size >= MINSIZE

&& !chunk_is_mmapped (p)

&& __glibc_likely (tcache->num_slots[tc_idx] != 0))

return tcache_put_large (p, tc_idx);

}

}

Small chunks are indexed similar to previous versions, that is, one bin index houses chunks of the same size.

static __always_inline void

tcache_put (mchunkptr chunk, size_t tc_idx)

{

tcache_put_n (chunk, tc_idx, &tcache->entries[tc_idx], false);

}

...

/* Caller must ensure that we know tc_idx is valid and there's room

for more chunks. */

static __always_inline void

tcache_put_n (mchunkptr chunk, size_t tc_idx, tcache_entry **ep, bool mangled)

{

tcache_entry *e = (tcache_entry *) chunk2mem (chunk);

/* Mark this chunk as "in the tcache" so the test in __libc_free will

detect a double free. */

e->key = tcache_key;

if (!mangled)

{

e->next = PROTECT_PTR (&e->next, *ep);

*ep = e;

}

else

{

e->next = PROTECT_PTR (&e->next, REVEAL_PTR (*ep));

*ep = PROTECT_PTR (ep, e);

}

--(tcache->num_slots[tc_idx]);

}On the other hand, larger chunks don’t grow linearly and instead they use the logarithmic mapping (large_csize2tidx). Specifically, it uses __builtin_clz, returning the number of leading 0-bits. The routine is listed below:

static __always_inline size_t

large_csize2tidx(size_t nb)

{

size_t idx = TCACHE_SMALL_BINS

+ __builtin_clz (MAX_TCACHE_SMALL_SIZE)

- __builtin_clz (nb);

return idx;

}The tcache in glibc >= 2.42 can now hold chunks up to 4 MiB (4194303 bytes), with the maximum valid tcache index being 75. At runtime, when setting the max bytes for tcache, glibc ensures tc_idx < TCACHE_MAX_BINS thereby avoiding out-of-bounds access.

static __always_inline int

do_set_tcache_max (size_t value)

{

size_t nb = request2size (value);

size_t tc_idx = csize2tidx (nb);

/* To check that value is not too big and request2size does not return an

overflown value. */

if (value > nb)

return 0;

if (nb > MAX_TCACHE_SMALL_SIZE)

tc_idx = large_csize2tidx (nb);

LIBC_PROBE (memory_tunable_tcache_max_bytes, 2, value, mp_.tcache_max_bytes);

if (tc_idx < TCACHE_MAX_BINS)

{

if (tc_idx < TCACHE_SMALL_BINS)

mp_.tcache_small_bins = tc_idx + 1;

mp_.tcache_max_bytes = nb;

return 1;

}

return 0;

}The allocator's state initialization is performed using malloc_init_state(). As shown below, unlike fast bins, which do not require explicit initialization, the bins array must be explicitly initialized. This involves setting the header and tail pointers to point to the sentinel bin itself, i.e., bin->fd = bin->bk = bin, as previously explained.

static void

malloc_init_state (mstate av)

{

int i;

mbinptr bin;

/* Establish circular links for normal bins */

for (i = 1; i < NBINS; ++i)

{

bin = bin_at (av, i);

bin->fd = bin->bk = bin;

}

#if MORECORE_CONTIGUOUS

if (av != &main_arena)

#endif

set_noncontiguous (av);

if (av == &main_arena)

set_max_fast (DEFAULT_MXFAST);

atomic_store_relaxed (&av->have_fastchunks, false);

av->top = initial_top (av);

}The maximum chunk size handled via fast bins is also set during initialization using set_max_fast (with a default of 128 bytes, i.e fast bins index chunk of size ranging from 32 bytes to 128 bytes). The have_fastchunks flag is a boolean represented as an int (since atomic operations on booleans are not always possible) and indicates whether there are any fastbin chunks available. It is set atomically using relaxed memory ordering, which is sufficient here as one only needs to defeat compiler optimizations. Finally, the top chunk of the arena is initialized to point at unsorted bin.

The entry point for memory allocation in glibc is __libc_malloc(). If tcache is not enabled it delegates directly to __libc_malloc2().

void *

__libc_malloc (size_t bytes)

{

#if USE_TCACHE

size_t nb = checked_request2size (bytes);

if (nb == 0)

{

__set_errno (ENOMEM);

return NULL;

}

if (nb < mp_.tcache_max_bytes)

{

size_t tc_idx = csize2tidx (nb);

if(__glibc_unlikely (tcache == NULL))

return tcache_malloc_init (bytes);

if (__glibc_likely (tc_idx < TCACHE_SMALL_BINS))

{

if (tcache->entries[tc_idx] != NULL)

return tag_new_usable (tcache_get (tc_idx));

}

else

{

tc_idx = large_csize2tidx (nb);

void *victim = tcache_get_large (tc_idx, nb);

if (victim != NULL)

return tag_new_usable (victim);

}

}

#endif

return __libc_malloc2 (bytes);

}If the tcache is enabled, the size is mapped to include the overhead and ensure alignment size_t nb = checked_request2size (bytes);. If memory tagging is enabled, the size is first aligned to __MTAG_GRANULE_SIZE before delegating to request2size (req);.

static __always_inline size_t

checked_request2size (size_t req) __nonnull (1)

{

_Static_assert (PTRDIFF_MAX <= SIZE_MAX / 2,

"PTRDIFF_MAX is not more than half of SIZE_MAX");

if (__glibc_unlikely (req > PTRDIFF_MAX))

return 0;

/* When using tagged memory, we cannot share the end of the user

block with the header for the next chunk, so ensure that we

allocate blocks that are rounded up to the granule size. Take

care not to overflow from close to MAX_SIZE_T to a small

number. Ideally, this would be part of request2size(), but that

must be a macro that produces a compile time constant if passed

a constant literal. */

if (__glibc_unlikely (mtag_enabled))

{

/* Ensure this is not evaluated if !mtag_enabled, see gcc PR 99551. */

asm ("");

req = (req + (__MTAG_GRANULE_SIZE - 1)) &

~(size_t)(__MTAG_GRANULE_SIZE - 1);

}

return request2size (req);

}The macro request2size() is defined below. It adds SIZE_SZ overhead and round up to next aligned value (ensuring that initial size + overhead + alignment -1 is at least MINSIZE).

#define request2size(req) \

(((req) + SIZE_SZ + MALLOC_ALIGN_MASK < MINSIZE) ? \

MINSIZE : \

((req) + SIZE_SZ + MALLOC_ALIGN_MASK) & ~MALLOC_ALIGN_MASK)Next, still on the tcache allocation path, if the the mapped size is smaller than configured size if (nb < mp_.tcache_max_bytes) , the thread index is inferred using the macro csize2tidx:

# define csize2tidx(x) (((x) - MINSIZE) / MALLOC_ALIGNMENT)If tcache is NULL , it means there is naturally no chunk to get served from the tcache. In this case, the tcache is initialized; which consists of allocating memory for housing a data structure of type tcache_perthread_struct and setting the thread local variable tcache. Then the user get a memory chunk from __libc_malloc2 (bytes) (will be covered in a moment).

static void

tcache_init (void)

{

if (tcache_shutting_down)

return;

/* Check minimum mmap chunk is larger than max tcache size. This means

mmap chunks with their different layout are never added to tcache. */

if (MAX_TCACHE_SMALL_SIZE >= GLRO (dl_pagesize) / 2)

malloc_printerr ("max tcache size too large");

size_t bytes = sizeof (tcache_perthread_struct);

tcache = (tcache_perthread_struct *) __libc_malloc2 (bytes);

if (tcache != NULL)

{

memset (tcache, 0, bytes);

for (int i = 0; i < TCACHE_MAX_BINS; i++)

tcache->num_slots[i] = mp_.tcache_count;

}

}

...

static __thread tcache_perthread_struct *tcache = NULL;Now if the tcache is not null, then there is possibly a free chunk that can be served. This is achieved by checking if the associated entry tcache->entries[tc_idx] is NULL.

if (__glibc_likely (tc_idx < TCACHE_SMALL_BINS))

{

if (tcache->entries[tc_idx] != NULL)

return tag_new_usable (tcache_get (tc_idx));

}A pointer to user chunk is obtained using the following macro tcache_get (tc_idx):

static __always_inline void *

tcache_get (size_t tc_idx)

{

return tcache_get_n (tc_idx, & tcache->entries[tc_idx], false);

}As listed below, tcache_get_n() removes the chunk from the linked list while coping with pointer mangling.

static __always_inline void *

tcache_get_n (size_t tc_idx, tcache_entry **ep, bool mangled)

{

tcache_entry *e;

if (!mangled)

e = *ep;

else

e = REVEAL_PTR (*ep);

if (__glibc_unlikely (misaligned_mem (e)))

malloc_printerr ("malloc(): unaligned tcache chunk detected");

void *ne = e == NULL ? NULL : REVEAL_PTR (e->next);

if (!mangled)

*ep = ne;

else

*ep = PROTECT_PTR (ep, ne);

++(tcache->num_slots[tc_idx]);

e->key = 0;

return (void *) e;

}If memory tagging is enabled, tag_new_usable() is invoked to create a new tag __libc_mtag_new_tag and tag the usable memory chunk (memsize()).

static __always_inline void *

tag_new_usable (void *ptr)

{

if (__glibc_unlikely (mtag_enabled) && ptr)

{

mchunkptr cp = mem2chunk(ptr);

ptr = __libc_mtag_tag_region (__libc_mtag_new_tag (ptr), memsize (cp));

}

return ptr;

}If the tcache chunk is large i.e the following condition does not hold:

if (__glibc_likely (tc_idx < TCACHE_SMALL_BINS))The tcache linked list is traversed to serve the first chunk larger or equal to the chunk size.

static __always_inline void *

tcache_get_large (size_t tc_idx, size_t nb)

{

tcache_entry **entry;

bool mangled = false;

entry = tcache_location_large (nb, tc_idx, &mangled);

if ((mangled && REVEAL_PTR (*entry) == NULL)

|| (!mangled && *entry == NULL))

return NULL;

return tcache_get_n (tc_idx, entry, mangled);

}

static __always_inline tcache_entry **

tcache_location_large (size_t nb, size_t tc_idx, bool *mangled)

{

tcache_entry **tep = &(tcache->entries[tc_idx]);

tcache_entry *te = *tep;

while (te != NULL

&& __glibc_unlikely (chunksize (mem2chunk (te)) < nb))

{

tep = & (te->next);

te = REVEAL_PTR (te->next);

*mangled = true;

}

return tep;

}The allocator ensures that the tcache bins list is always maintained in order; relevant for large tcache chunks only.

static __always_inline tcache_entry **

tcache_location_large (size_t nb, size_t tc_idx, bool *mangled)

{

tcache_entry **tep = &(tcache->entries[tc_idx]);

tcache_entry *te = *tep;

while (te != NULL

&& __glibc_unlikely (chunksize (mem2chunk (te)) < nb))

{

tep = & (te->next);

te = REVEAL_PTR (te->next);

*mangled = true;

}

return tep;

}

static __always_inline void

tcache_put_large (mchunkptr chunk, size_t tc_idx)

{

tcache_entry **entry;

bool mangled = false;

entry = tcache_location_large (chunksize (chunk), tc_idx, &mangled);

return tcache_put_n (chunk, tc_idx, entry, mangled);

}Now if the tcache is not enabled or simply can't serve the user request, the call is delegated to __libc_malloc2 (bytes);

Before discussing __libc_malloc2, let's quickly go through the pointer masking used in tcache and fastbins. In order to obfuscate pointers of single linked-list data structures from corruption, the allocator uses pointer mangling proposed by CheckPoint:

/* Safe-Linking:

Use randomness from ASLR (mmap_base) to protect single-linked lists

of Fast-Bins and TCache. That is, mask the "next" pointers of the

lists' chunks, and also perform allocation alignment checks on them.

This mechanism reduces the risk of pointer hijacking, as was done with

Safe-Unlinking in the double-linked lists of Small-Bins.

It assumes a minimum page size of 4096 bytes (12 bits). Systems with

larger pages provide less entropy, although the pointer mangling

still works. */

#define PROTECT_PTR(pos, ptr) \

((__typeof (ptr)) ((((size_t) pos) >> 12) ^ ((size_t) ptr)))

#define REVEAL_PTR(ptr) PROTECT_PTR (&ptr, ptr)Relying on the randomness provided by the ASLR, the heap address of the next pointer field (i.e the address of the tcache_entry->next field) is shifted by 12 bits and xor ed with the next pointer (that we want to mask, that is, the next chunk in the free list). The mmap_base or brk base is randomized by the kernel using the following offset random_base = ((1 << rndbits) - 1) << PAGE_SHIFT; providing 2^rndbits uniformly possible heap start addresses, each aligned to a page.

\(\text{PROTECT_PTR}(p, x) = \left( \left\lfloor \frac{p}{2^{12}} \right\rfloor \oplus x \right) \)

\(\text{REVEAL_PTR}(x') = \text{PROTECT_PTR}(p, x') = \left( \left\lfloor \frac{p}{2^{12}} \right\rfloor \oplus x' \right) \)

Thus the key is basically the heap address shifted and xored meaning a heap leak defeats the above protection. In order to reveal a pointer housed inside a linked list entry, the address of the next pointer is used, as bitwise XOR operations are involutive i.e (x XOR k) XOR k = x.

If tcache cannot serve the request, control is passed to __libc_malloc2, which continues allocation using the arena:

static void * __attribute_noinline__

__libc_malloc2 (size_t bytes)

{

mstate ar_ptr;

void *victim;

if (SINGLE_THREAD_P)

{

victim = tag_new_usable (_int_malloc (&main_arena, bytes));

assert (!victim || chunk_is_mmapped (mem2chunk (victim)) ||

&main_arena == arena_for_chunk (mem2chunk (victim)));

return victim;

}

arena_get (ar_ptr, bytes);

victim = _int_malloc (ar_ptr, bytes);

/* Retry with another arena only if we were able to find a usable arena

before. */

if (!victim && ar_ptr != NULL)

{

LIBC_PROBE (memory_malloc_retry, 1, bytes);

ar_ptr = arena_get_retry (ar_ptr, bytes);

victim = _int_malloc (ar_ptr, bytes);

}

if (ar_ptr != NULL)

__libc_lock_unlock (ar_ptr->mutex);

victim = tag_new_usable (victim);

assert (!victim || chunk_is_mmapped (mem2chunk (victim)) ||

ar_ptr == arena_for_chunk (mem2chunk (victim)));

return victim;

}In case we are in a single thread scenario, there is no potential race condition. The user chunk is allocated and tagged if applicable victim = tag_new_usable (_int_malloc (&main_arena, bytes));, next either the allocation happened i.e the pointer is not null, otherwise the chunk is either mmapped or belongs to the main arena (which can be inferred by inspecting the size field).

#define chunk_main_arena(p) (((p)->mchunk_size & NON_MAIN_ARENA) == 0)

...

static __always_inline struct malloc_state *

arena_for_chunk (mchunkptr ptr)

{

return chunk_main_arena (ptr) ? &main_arena : heap_for_ptr (ptr)->ar_ptr;

}In case the program supports multiple threads, arena_get() is invoked to acquire the arena's mutex associated with the underlying thread.

#define arena_get(ptr, size) do { \

ptr = thread_arena; \

arena_lock (ptr, size); \

} while (0)

#define arena_lock(ptr, size) do { \

if (ptr) \

__libc_lock_lock (ptr->mutex); \

else \

ptr = arena_get2 ((size), NULL); \

} while (0)

If a thread is not yet attached to any arena, the allocator will assign it to an existing arena if possible, or create a new one and associate the thread with it.

static mstate

arena_get2 (size_t size, mstate avoid_arena)

{

mstate a;

static size_t narenas_limit;

a = get_free_list ();

if (a == NULL)

{

/* Nothing immediately available, so generate a new arena. */

if (narenas_limit == 0)

{

if (mp_.arena_max != 0)

narenas_limit = mp_.arena_max;

else if (narenas > mp_.arena_test)

{

int n = __get_nprocs ();

if (n >= 1)

narenas_limit = NARENAS_FROM_NCORES (n);

else

/* We have no information about the system. Assume two

cores. */

narenas_limit = NARENAS_FROM_NCORES (2);

}

}

repeat:;

size_t n = narenas;

/* NB: the following depends on the fact that (size_t)0 - 1 is a

very large number and that the underflow is OK. If arena_max

is set the value of arena_test is irrelevant. If arena_test

is set but narenas is not yet larger or equal to arena_test

narenas_limit is 0. There is no possibility for narenas to

be too big for the test to always fail since there is not

enough address space to create that many arenas. */

if (__glibc_unlikely (n <= narenas_limit - 1))

{

if (catomic_compare_and_exchange_bool_acq (&narenas, n + 1, n))

goto repeat;

a = _int_new_arena (size);

if (__glibc_unlikely (a == NULL))

catomic_decrement (&narenas);

}

else

a = reused_arena (avoid_arena);

}

return a;

}Specifically, the allocator will try first to serve an arena from the free list:

static mstate

get_free_list (void)

{

mstate replaced_arena = thread_arena;

mstate result = free_list;

if (result != NULL)

{

__libc_lock_lock (free_list_lock);

result = free_list;

if (result != NULL)

{

free_list = result->next_free;

/* The arena will be attached to this thread. */

assert (result->attached_threads == 0);

result->attached_threads = 1;

detach_arena (replaced_arena);

}

__libc_lock_unlock (free_list_lock);

if (result != NULL)

{

LIBC_PROBE (memory_arena_reuse_free_list, 1, result);

__libc_lock_lock (result->mutex);

thread_arena = result;

}

}

return result;

}Otherwise if the number of created arenas does not exceed the limit, a new one is created:

if (__glibc_unlikely (n <= narenas_limit - 1))

{

if (catomic_compare_and_exchange_bool_acq (&narenas, n + 1, n))

goto repeat;

a = _int_new_arena (size);

if (__glibc_unlikely (a == NULL))

catomic_decrement (&narenas);

}If the number of arenas reaches the limit, the allocator iterate over available arenas, starting from the main arena. Specifically, if attempts to return the first less solicited arena i.e for which the lock can be acquired without contention if (!__libc_lock_trylock (result->mutex)) (unlike spinlocks, mutexes cause threads that fail to acquire the lock to be descheduled, rather than spinning, which reduces cpu usage under contention; for more details about locks, please refer to my previous post: https://www.deep-kondah.com/on-the-complexity-of-synchronization-memory-barriers-locks-and-scalability/).If none can be returned, then the current thread needs to wait for the lock __libc_lock_lock (result->mutex); and finally once the task is resumed, the allocator attaches the arena to the current thread.

static mstate

reused_arena (mstate avoid_arena)

{

mstate result;

/* FIXME: Access to next_to_use suffers from data races. */

static mstate next_to_use;

if (next_to_use == NULL)

next_to_use = &main_arena;

/* Iterate over all arenas (including those linked from

free_list). */

result = next_to_use;

do

{

if (!__libc_lock_trylock (result->mutex))

goto out;

/* FIXME: This is a data race, see _int_new_arena. */

result = result->next;

}

while (result != next_to_use);

/* Avoid AVOID_ARENA as we have already failed to allocate memory

in that arena and it is currently locked. */

if (result == avoid_arena)

result = result->next;

/* No arena available without contention. Wait for the next in line. */

LIBC_PROBE (memory_arena_reuse_wait, 3, &result->mutex, result, avoid_arena);

__libc_lock_lock (result->mutex);

out:

/* Attach the arena to the current thread. */

{

/* Update the arena thread attachment counters. */

mstate replaced_arena = thread_arena;

__libc_lock_lock (free_list_lock);

detach_arena (replaced_arena);

/* We may have picked up an arena on the free list. We need to

preserve the invariant that no arena on the free list has a

positive attached_threads counter (otherwise,

arena_thread_freeres cannot use the counter to determine if the

arena needs to be put on the free list). We unconditionally

remove the selected arena from the free list. The caller of

reused_arena checked the free list and observed it to be empty,

so the list is very short. */

remove_from_free_list (result);

++result->attached_threads;

__libc_lock_unlock (free_list_lock);

}

LIBC_PROBE (memory_arena_reuse, 2, result, avoid_arena);

thread_arena = result;

next_to_use = result->next;

return result;

}Now once the thread has an arena attached (housing memory chunks), the allocator can use it to allocate a chunk of memory by delegating the control to _int_malloc(), victim = _int_malloc (ar_ptr, bytes). Finally the arena lock is released __libc_lock_unlock (ar_ptr->mutex); and the memory chunk is served (with memory tagging if applicable victim = tag_new_usable (victim)).

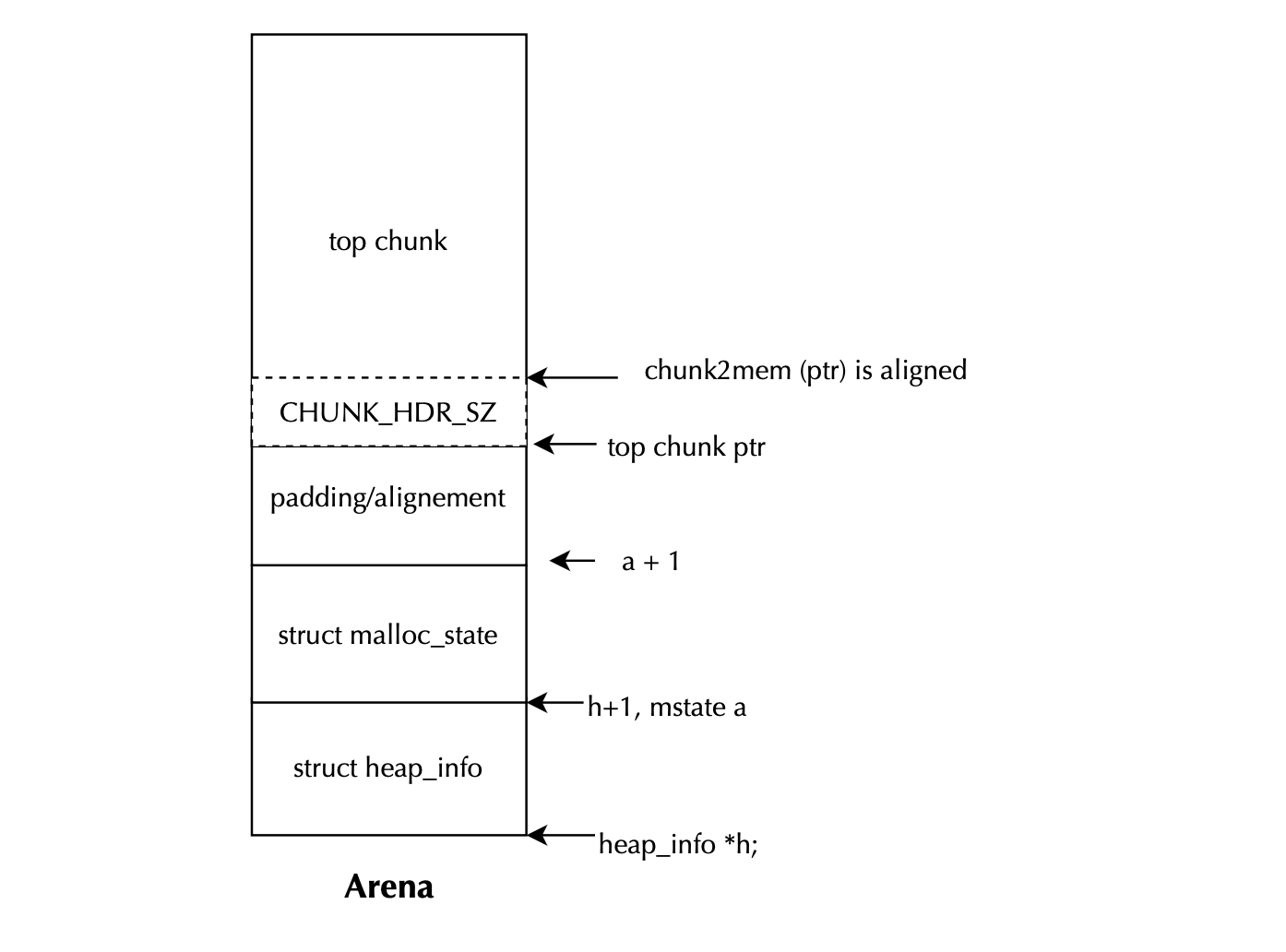

The code for creating a new arena is located in _int_new_arena() and works as follows. Given a request size plus overhead, it allocates a new heap (via alloc_new_heap). The heap is described by a heap_info struct, followed in memory by an mstate (the malloc state data structure representing the arena), and finally padding is added for alignment purposes.

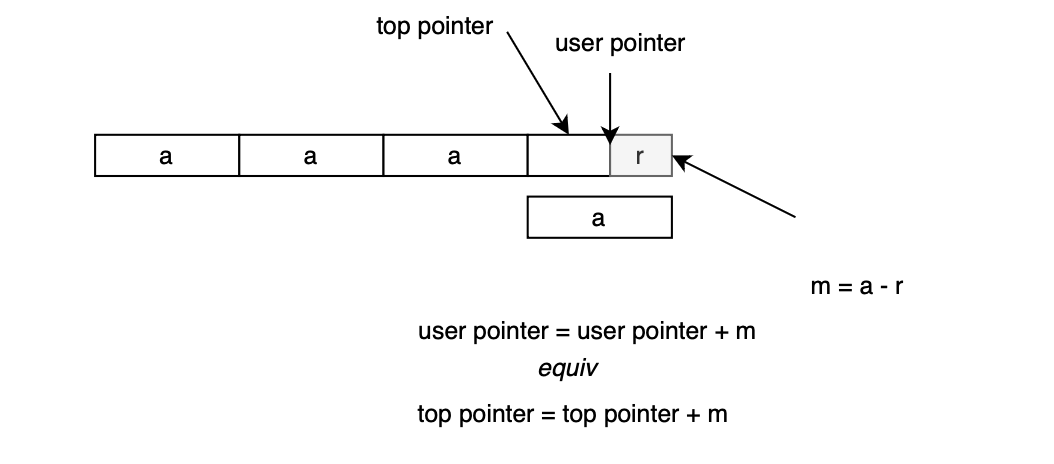

The top_pad value (stored in malloc_par or _mp) is added on top of the requested size to accommodate future allocations without immediately requiring additional mmap calls. The heap is allocated via new_heap (discussed in the next subsection):

h = new_heap(size + (sizeof(*h) + sizeof(*a) + MALLOC_ALIGNMENT), mp_.top_pad);

Here, MALLOC_ALIGNMENT is added to ensure that after placing heap_info and malloc_state, there is enough padding to allow the start of the user allocations (i.e chunk2mem (ptr)) to be rounded up to the next aligned address. This is sufficient because, in the worst case, the starting address could be misaligned by up toMALLOC_ALIGNMENT - 1. The malloc state pointer is then initialized:

a = h->ar_ptr = (mstate)(h + 1);

Adding 1 to a pointer of type heap_info * advances it by sizeof(heap_info) bytes. Next, the arena is initialized via malloc_init_state, which was covered earlier. The top chunk of the arena begins at:

ptr = (char *)(a + 1);

This pointer is checked for alignment to MALLOC_ALIGNMENT to ensure the memory returned to the user is properly aligned:

misalign = (uintptr_t)chunk2mem(ptr) & MALLOC_ALIGN_MASK;

If not aligned, the top chunk pointer is adjusted:

ptr += MALLOC_ALIGNMENT - misalign;

This ensures ptr is properly aligned by advancing it past the misalignment. The arena’s top chunk is then set top(a) = (mchunkptr)ptr; and metadata of the top chunk is updated as well:

set_head(top(a), (((char *)h + h->size) - ptr) | PREV_INUSE);

This sets the top chunk’s size to cover the remaining heap space (from the aligned ptr to the end of the heap i.e (char *)h + h->size) - ptr) and marks it asPREV_INUSE. The PREV_INUSE bit is always set for the top chunk. Finally, the new arena is chained to main_arena, and the previous arena is detached from the current thread via detach_arena(replaced_arena);.

The code for creating a new heap is shown below.

static heap_info *

new_heap (size_t size, size_t top_pad)

{

if (mp_.hp_pagesize != 0 && mp_.hp_pagesize <= heap_max_size ())

{

heap_info *h = alloc_new_heap (size, top_pad, mp_.hp_pagesize,

mp_.hp_flags);

if (h != NULL)

return h;

}

return alloc_new_heap (size, top_pad, GLRO (dl_pagesize), 0);

}

...

static heap_info *

alloc_new_heap (size_t size, size_t top_pad, size_t pagesize,

int mmap_flags)

{

char *p1, *p2;

unsigned long ul;

heap_info *h;

size_t min_size = heap_min_size ();

size_t max_size = heap_max_size ();

if (size + top_pad < min_size)

size = min_size;

else if (size + top_pad <= max_size)

size += top_pad;

else if (size > max_size)

return NULL;

else

size = max_size;

size = ALIGN_UP (size, pagesize);

/* A memory region aligned to a multiple of max_size is needed.

No swap space needs to be reserved for the following large

mapping (on Linux, this is the case for all non-writable mappings

anyway). */

p2 = MAP_FAILED;

if (aligned_heap_area)

{

p2 = (char *) MMAP (aligned_heap_area, max_size, PROT_NONE, mmap_flags);

aligned_heap_area = NULL;

if (p2 != MAP_FAILED && ((unsigned long) p2 & (max_size - 1)))

{

__munmap (p2, max_size);

p2 = MAP_FAILED;

}

}

if (p2 == MAP_FAILED)

{

p1 = (char *) MMAP (NULL, max_size << 1, PROT_NONE, mmap_flags);

if (p1 != MAP_FAILED)

{

p2 = (char *) (((uintptr_t) p1 + (max_size - 1))

& ~(max_size - 1));

ul = p2 - p1;

if (ul)

__munmap (p1, ul);

else

aligned_heap_area = p2 + max_size;

__munmap (p2 + max_size, max_size - ul);

}

else

{

/* Try to take the chance that an allocation of only max_size

is already aligned. */

p2 = (char *) MMAP (NULL, max_size, PROT_NONE, mmap_flags);

if (p2 == MAP_FAILED)

return NULL;

if ((unsigned long) p2 & (max_size - 1))

{

__munmap (p2, max_size);

return NULL;

}

}

}

if (__mprotect (p2, size, mtag_mmap_flags | PROT_READ | PROT_WRITE) != 0)

{

__munmap (p2, max_size);

return NULL;

}

/* Only considere the actual usable range. */

__set_vma_name (p2, size, " glibc: malloc arena");

madvise_thp (p2, size);

h = (heap_info *) p2;

h->size = size;

h->mprotect_size = size;

h->pagesize = pagesize;

LIBC_PROBE (memory_heap_new, 2, h, h->size);

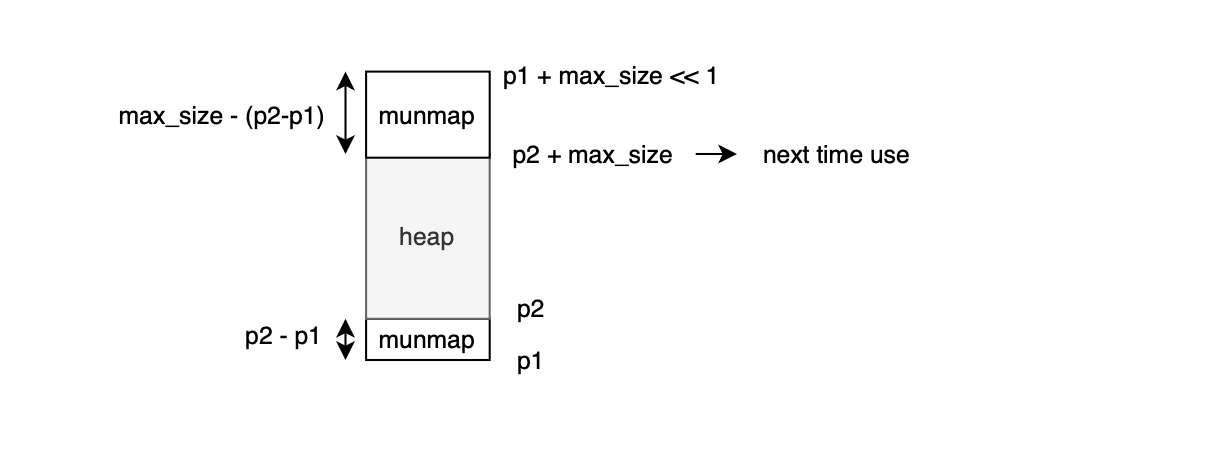

return h;

}In its simplest form, the allocator first reserves virtual address space using MMAP(NULL, max_size, PROT_NONE, mmap_flags);, if the address returned by mmap is aligned to max_size, it then change the protection flags of the already reserved virtual address range using __mprotect(p2, size, mtag_mmap_flags | PROT_READ | PROT_WRITE);. Otherwise, it unmaps the region. Specifically, it starts by requesting 2*max_sizeor max_size << 1 of virtual memory space, p1 = (char *) MMAP(NULL, max_size << 1, PROT_NONE, mmap_flags);, it then rounds up the base address to the next max_size-aligned boundary p2 = (char *) (((uintptr_t)p1 + (max_size - 1)) & ~(max_size - 1));, to reduce heap fragmentation and simplify management (e.g one can know heap from pointer by simply rounding down to nearest multiple of HEAP_MAX_SIZE i.e heap_info *h = (heap_info *) ((uintptr_t)p & ~(HEAP_MAX_SIZE - 1));), it updates a global variable for next heap allocation aligned_heap_area = p2 + max_size;, which marks the next aligned region that may be reused later. The allocator then unmaps any unused memory before the aligned address __munmap(p1, ul);, ul = p2 - p1 and also unmaps the unused tail __munmap(p2 + max_size, max_size - ul);. After storing aligned_heap_area = p2 + max_size, future heap allocations can attempt to reuse this address by passing it as a hint to mmap p2 = (char *) MMAP(aligned_heap_area, max_size, PROT_NONE, mmap_flags);. The newly mapped region is then labeled __set_vma_name(p2, size, "glibc: malloc arena");. Finally, it calls madvise_thp(p2, size);, to hint that the region should be backed by Transparent Huge Pages (THP), and returns the heap pointer h = (heap_info *) p2;.

As discussed earlier, when the number of arenas created exceeds a predefined threshold, the requesting thread must reuse an existing arena for memory allocation. The code implementing this logic is found in reused_arena(mstate avoid_arena). It attempts to acquire the lock without contention __libc_lock_trylock(result->mutex). If this fails immediately, it checks the next arena with result = result->next. If no arena is available without contention, it will then block and wait for an arena to become free using __libc_lock_lock(result->mutex). Once an arena is successfully acquired, it attaches the arena to the current thread, updates the attached thread counter with ++result->attached_threads, and updates next_to_use = result->next so that the next thread entering the function can start from there. The next_to_use variable is defined as a static function variable: static mstate next_to_use.

static mstate

reused_arena (mstate avoid_arena)

{

mstate result;

/* FIXME: Access to next_to_use suffers from data races. */

static mstate next_to_use;int_malloc takes as input the arena pointer and the amount of bytes requested by the user (without header or memory overhead).

static void *

_int_malloc (mstate av, size_t bytes)

{

INTERNAL_SIZE_T nb; /* normalized request size */

unsigned int idx; /* associated bin index */

.....

nb = checked_request2size (bytes);

if (nb == 0)

{

__set_errno (ENOMEM);

return NULL;

}

/* There are no usable arenas. Fall back to sysmalloc to get a chunk from

mmap. */

if (__glibc_unlikely (av == NULL))

{

void *p = sysmalloc (nb, av);

if (p != NULL)

alloc_perturb (p, bytes);

return p;

}The size, which can not exceed PTRDIFF_MAX is first validated and aligned using checked_request2size which delegates tot the macro request2size for adding the memory overhead + alignment to MALLOC_ALIGNEMENT . If the arena pointer is null, meaning no suitable arena was found, then the allocator falls back to sysmalloc (will be covered later) and returns the memory perturbed with alloc_perturb if configured.

#define MALLOC_ALIGN_MASK (MALLOC_ALIGNMENT - 1)

#define MALLOC_ALIGNMENT (2 * SIZE_SZ < __alignof__ (long double) \

? __alignof__ (long double) : 2 * SIZE_SZ)The fast bin index traversal is listed below.

if ((unsigned long) (nb) <= (unsigned long) (get_max_fast ()))

{

idx = fastbin_index (nb);

mfastbinptr *fb = &fastbin (av, idx);

mchunkptr pp;

victim = *fb;

if (victim != NULL)

{

if (__glibc_unlikely (misaligned_chunk (victim)))

malloc_printerr ("malloc(): unaligned fastbin chunk detected 2");

if (SINGLE_THREAD_P)

*fb = REVEAL_PTR (victim->fd);

else

REMOVE_FB (fb, pp, victim);

if (__glibc_likely (victim != NULL))

{

size_t victim_idx = fastbin_index (chunksize (victim));

if (__glibc_unlikely (victim_idx != idx))

malloc_printerr ("malloc(): memory corruption (fast)");

check_remalloced_chunk (av, victim, nb); If the requested size qualifies as a fastbin i.e. if ((unsigned long)(nb) <= (unsigned long)(get_max_fast())), the corresponding fastbin index is computed. Next, the memory chunk is validated.

/*

Properties of chunks recycled from fastbins

*/

static void

do_check_remalloced_chunk (mstate av, mchunkptr p, INTERNAL_SIZE_T s)

{

INTERNAL_SIZE_T sz = chunksize_nomask (p) & ~(PREV_INUSE | NON_MAIN_ARENA);

if (!chunk_is_mmapped (p))

{

assert (av == arena_for_chunk (p));

if (chunk_main_arena (p))

assert (av == &main_arena);

else

assert (av != &main_arena);

}

do_check_inuse_chunk (av, p);

/* Legal size ... */

assert ((sz & MALLOC_ALIGN_MASK) == 0);

assert ((unsigned long) (sz) >= MINSIZE);

/* ... and alignment */

assert (!misaligned_chunk (p));

/* chunk is less than MINSIZE more than request */

assert ((long) (sz) - (long) (s) >= 0);

assert ((long) (sz) - (long) (s + MINSIZE) < 0);

}Now, even if a chunk is present, it cannot be taken blindly due to the possibility of a race condition. Specifically, if the program is in single threaded mode (checked via the SINGLE_THREAD_P macro), it is safe to update the bin directly by advancing the linked list after revealing the actual pointer (deobfuscating with REVEAL_PTR, we discussed pointer obfuscation earlier):

mfastbinptr *fb = &fastbin (av, idx);

*fb = REVEAL_PTR(victim->fd);

However, if there are several threads in the process, the operation must be done atomically using catomic_compare_and_exchange_val_acq. This ensures the update only occurs if the value we read hasn't changed in the meantime preventing concurrent modification (basically read again pp = REVEAL_PTR (victim->fd);).

do \

{ \

victim = pp; \

if (victim == NULL) \

break; \

pp = REVEAL_PTR (victim->fd); \

if (__glibc_unlikely (pp != NULL && misaligned_chunk (pp))) \

malloc_printerr ("malloc(): unaligned fastbin chunk detected"); \

} \

while ((pp = catomic_compare_and_exchange_val_acq (fb, pp, victim)) \

!= victim);Next, it checks that the size indicated in the chunk is correct with if (__builtin_expect(victim_idx != idx, 0)), meaning it maps to the same index; this is to catch memory corruptions. It then calls check_remalloced_chunk to perform sanity checks. For example, if the chunk is not mmaped, its associated arena (main or heap) is retrieved and compared with the one expected from the function parameters to ensure consistency. The function do_check_inuse_chunk is called to verify properties of in-use chunks (do_check_inuse_chunk(mstate av, mchunkptr p)). The properties of in-use chunks are as follows: first, do_check_chunk validates general chunk properties, i.e., if the chunk is not served by mmap and is not the top chunk, it must have a legal address: assert(((char *)p) >= min_address); and assert(((char *)p + sz) <= ((char *)(av->top)));. If the arena is contiguous (determined by inspecting the arena flag), and the chunk is the top chunk, it must always be at least MINSIZE in size and must have its previous-in-use bit marked: assert(prev_inuse(p));. If the chunk is mmaped, its size must be page-aligned: assert(((prev_size(p) + sz) & (GLRO(dl_pagesize) - 1)) == 0);, and its memory pointer must also be aligned: assert(aligned_OK(chunk2mem(p)));. Additionally, if the arena is contiguous, the legal address check is added: assert(((char *)p) < min_address || ((char *)p) >= max_address); (with top previously initialized, i.e., av->top != initial_top(av)). do_check_inuse_chunk terminates immediately if the chunk is mmap-ed, as mmap-ed chunks have no next/previous linkage. Otherwise, it asserts that the chunk is in use: assert(inuse(p)); (i.e., the next chunk has the PREV_INUSE bit set; fast bins chunks are not consolidated). Once the chunk is validated, surrounding chunks are also validated: the next chunk is fetched with next = next_chunk(p);, and if free, do_check_free_chunk(av, next); is called. Similarly, if next == av->top, assertions check assert(prev_inuse(next)); and assert(chunksize(next) >= MINSIZE);. The previous chunk mchunkptr prv = prev_chunk(p); is also checked: if not in use (if (!prev_inuse(p))), it verifies assert(next_chunk(prv) == p); and calls do_check_free_chunk(av, prv);, which performs similar sanity checks as do_check_chunk to validate general properties: the chunk claims to be free, so confirm assert(!inuse(p));, it is not mmaped assert(!chunk_is_mmapped(p));, size is aligned assert((sz & MALLOC_ALIGN_MASK) == 0);, the user pointer is aligned assert(aligned_OK(chunk2mem(p)));, the footer matches assert(prev_size(next_chunk(p)) == sz);, and the chunk is consolidated, i.e., assert(prev_inuse(p)); (otherwise it would have been merged) and assert(next == av->top || inuse(next)); . Lastly, linked list properties are verified: assert(p->fd->bk == p); and assert(p->bk->fd == p);.

static void

do_check_free_chunk (mstate av, mchunkptr p)

{

INTERNAL_SIZE_T sz = chunksize_nomask (p) & ~(PREV_INUSE | NON_MAIN_ARENA);

mchunkptr next = chunk_at_offset (p, sz);

do_check_chunk (av, p);

/* Chunk must claim to be free ... */

assert (!inuse (p));

assert (!chunk_is_mmapped (p));

/* Unless a special marker, must have OK fields */

if ((unsigned long) (sz) >= MINSIZE)

{

assert ((sz & MALLOC_ALIGN_MASK) == 0);

assert (!misaligned_chunk (p));

/* ... matching footer field */

assert (prev_size (next_chunk (p)) == sz);

/* ... and is fully consolidated */

assert (prev_inuse (p));

assert (next == av->top || inuse (next));

/* ... and has minimally sane links */

assert (p->fd->bk == p);

assert (p->bk->fd == p);

}

else /* markers are always of size SIZE_SZ */

assert (sz == SIZE_SZ);

}Now after do_check_inuse_chunk completes, the following checks are performed: the chunk size, cleared of metadata flags (chunksize_nomask(p) & ~(PREV_INUSE | NON_MAIN_ARENA)), must be properly aligned:

assert((sz & MALLOC_ALIGN_MASK) == 0);

The size must be at least MINSIZE:

assert((unsigned long)(sz) >= MINSIZE);

The chunk size must be greater than or equal to the request size (including overhead):

assert((long)(sz) - (long)(s) >= 0);

and less than the request size plus MINSIZE:

assert((long)(sz) - (long)(s + MINSIZE) < 0);

Once the chunk is validated, and if tcache is enabled (USE_TCACHE), the allocator computes the tcache index (tc_idx) corresponding to the chunk size and stash all bins with same size in the thread-local cache by traversing the bin index i.e linked list improving performance through temporal and spatial locality.

#if USE_TCACHE

/* While we're here, if we see other chunks of the same size,

stash them in the tcache. */

size_t tc_idx = csize2tidx (nb);

if (tcache != NULL && tc_idx < mp_.tcache_small_bins)

{

mchunkptr tc_victim;

/* While bin not empty and tcache not full, copy chunks. */

while (tcache->num_slots[tc_idx] != 0 && (tc_victim = *fb) != NULL)

{

if (__glibc_unlikely (misaligned_chunk (tc_victim)))

malloc_printerr ("malloc(): unaligned fastbin chunk detected 3");

size_t victim_tc_idx = csize2tidx (chunksize (tc_victim));

if (__glibc_unlikely (tc_idx != victim_tc_idx))

malloc_printerr ("malloc(): chunk size mismatch in fastbin");

if (SINGLE_THREAD_P)

*fb = REVEAL_PTR (tc_victim->fd);

else

{

REMOVE_FB (fb, pp, tc_victim);

if (__glibc_unlikely (tc_victim == NULL))

break;

}

tcache_put (tc_victim, tc_idx);

}

}

#endifFinally, allocation completes by returning a pointer to the usable memory i.e. the memory address just after the chunk header void *p = chunk2mem (victim);, with optional perturbation if enabled alloc_perturb (p, bytes);.

Now that we are done with the fastbin lookup, next, let's see what happens during small bin index searching i.e. when in_smallbin_range(size) is true.

if (in_smallbin_range (nb))

{

idx = smallbin_index (nb);

bin = bin_at (av, idx);

if ((victim = last (bin)) != bin)

{

bck = victim->bk;

if (__glibc_unlikely (bck->fd != victim))

malloc_printerr ("malloc(): smallbin double linked list corrupted");

set_inuse_bit_at_offset (victim, nb);

bin->bk = bck;

bck->fd = bin;

if (av != &main_arena)

set_non_main_arena (victim);

check_malloced_chunk (av, victim, nb);

#if USE_TCACHE

/* While we're here, if we see other chunks of the same size,

stash them in the tcache. */

size_t tc_idx = csize2tidx (nb);

if (tcache != NULL && tc_idx < mp_.tcache_small_bins)

{

mchunkptr tc_victim;

/* While bin not empty and tcache not full, copy chunks over. */

while (tcache->num_slots[tc_idx] != 0

&& (tc_victim = last (bin)) != bin)

{

if (tc_victim != NULL)

{

bck = tc_victim->bk;

set_inuse_bit_at_offset (tc_victim, nb);

if (av != &main_arena)

set_non_main_arena (tc_victim);

bin->bk = bck;

bck->fd = bin;

tcache_put (tc_victim, tc_idx);

}

}

}

#endif

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}We start by mapping the index using smallbin_index(nb). Then we retrieve the corresponding bin bin_at(av, idx); remember, each smallbin has a sentinel node, index i holds the forward pointer (fd), and index i+1 holds the backward pointer (bk). If the bin is not empty (i.e. bin->bk != bin), we get the last chunk: victim = last(bin). Before using the chunk, a sanity check is performed to guard against corruption:

if (__glibc_unlikely(bck->fd != victim)) {

malloc_printerr ("malloc(): smallbin double linked list corrupted");

}

If valid, the chunk is marked as in-use by setting the in-use bit in the metadata set_inuse_bit_at_offset(victim, nb); and set the non-main arena bit if needed set_non_main_arena(victim); Then the double linked list is updated to remove the victim chunk:

bin->bk = bck;

bck->fd = bin;

As with fastbins, do_check_remalloced_chunk is called to validate additional chunk properties (via do_check_malloced_chunk)

If there are other chunks of the same size, they may be stashed in the tcache to improve temporal and spatial locality. Finally, the user receives a pointer to the usable memory, chunk2mem (victim).

As said before, the chunks in fast bin are marked as in_use. malloc_consolidate() basically performs the consolidation of theses chunks i.e for each bin index, it traverse the list and performs backward:

if (!prev_inuse(p)) {

prevsize = prev_size (p);

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

if (__glibc_unlikely (chunksize(p) != prevsize))

malloc_printerr ("corrupted size vs. prev_size in fastbins");

unlink_chunk (av, p);

}

and forward consolidation:

if (nextchunk != av->top) {

nextinuse = inuse_bit_at_offset(nextchunk, nextsize);

if (!nextinuse) {

size += nextsize;

unlink_chunk (av, nextchunk);

} else

clear_inuse_bit_at_offset(nextchunk, 0);

first_unsorted = unsorted_bin->fd;

unsorted_bin->fd = p;

first_unsorted->bk = p;

if (!in_smallbin_range (size)) {

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

set_head(p, size | PREV_INUSE);

p->bk = unsorted_bin;

p->fd = first_unsorted;

set_foot(p, size);

}

else {

size += nextsize;

set_head(p, size | PREV_INUSE);

av->top = p;

}static void malloc_consolidate(mstate av)

{

mfastbinptr* fb; /* current fastbin being consolidated */

mfastbinptr* maxfb; /* last fastbin (for loop control) */

mchunkptr p; /* current chunk being consolidated */

mchunkptr nextp; /* next chunk to consolidate */

mchunkptr unsorted_bin; /* bin header */

mchunkptr first_unsorted; /* chunk to link to */

/* These have same use as in free() */

mchunkptr nextchunk;

INTERNAL_SIZE_T size;

INTERNAL_SIZE_T nextsize;

INTERNAL_SIZE_T prevsize;

int nextinuse;

atomic_store_relaxed (&av->have_fastchunks, false);

unsorted_bin = unsorted_chunks(av);

/*

Remove each chunk from fast bin and consolidate it, placing it

then in unsorted bin. Among other reasons for doing this,

placing in unsorted bin avoids needing to calculate actual bins

until malloc is sure that chunks aren't immediately going to be

reused anyway.

*/

maxfb = &fastbin (av, NFASTBINS - 1);

fb = &fastbin (av, 0);

do {

p = atomic_exchange_acquire (fb, NULL);

if (p != NULL) {

do {

{

if (__glibc_unlikely (misaligned_chunk (p)))

malloc_printerr ("malloc_consolidate(): "

"unaligned fastbin chunk detected");

unsigned int idx = fastbin_index (chunksize (p));

if ((&fastbin (av, idx)) != fb)

malloc_printerr ("malloc_consolidate(): invalid chunk size");

}

check_inuse_chunk(av, p);

nextp = REVEAL_PTR (p->fd);

/* Slightly streamlined version of consolidation code in free() */

size = chunksize (p);

nextchunk = chunk_at_offset(p, size);

nextsize = chunksize(nextchunk);

if (!prev_inuse(p)) {

prevsize = prev_size (p);

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

if (__glibc_unlikely (chunksize(p) != prevsize))

malloc_printerr ("corrupted size vs. prev_size in fastbins");

unlink_chunk (av, p);

}

if (nextchunk != av->top) {

nextinuse = inuse_bit_at_offset(nextchunk, nextsize);

if (!nextinuse) {

size += nextsize;

unlink_chunk (av, nextchunk);

} else

clear_inuse_bit_at_offset(nextchunk, 0);

first_unsorted = unsorted_bin->fd;

unsorted_bin->fd = p;

first_unsorted->bk = p;

if (!in_smallbin_range (size)) {

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

set_head(p, size | PREV_INUSE);

p->bk = unsorted_bin;

p->fd = first_unsorted;

set_foot(p, size);

}

else {

size += nextsize;

set_head(p, size | PREV_INUSE);

av->top = p;

}

} while ( (p = nextp) != NULL);

}

} while (fb++ != maxfb);

}Note that, as shown above, while fast chunk consolidation happens, the update of the have_fast_chunks must be done atomically (only relaxed as one only need to defeat compiler optimization or reordering as arena is already owned via the mutex and using more aggressive barrier will only incurs unnecessary overhead) i.e atomic_store_relaxed (&av->have_fastchunks, false);.

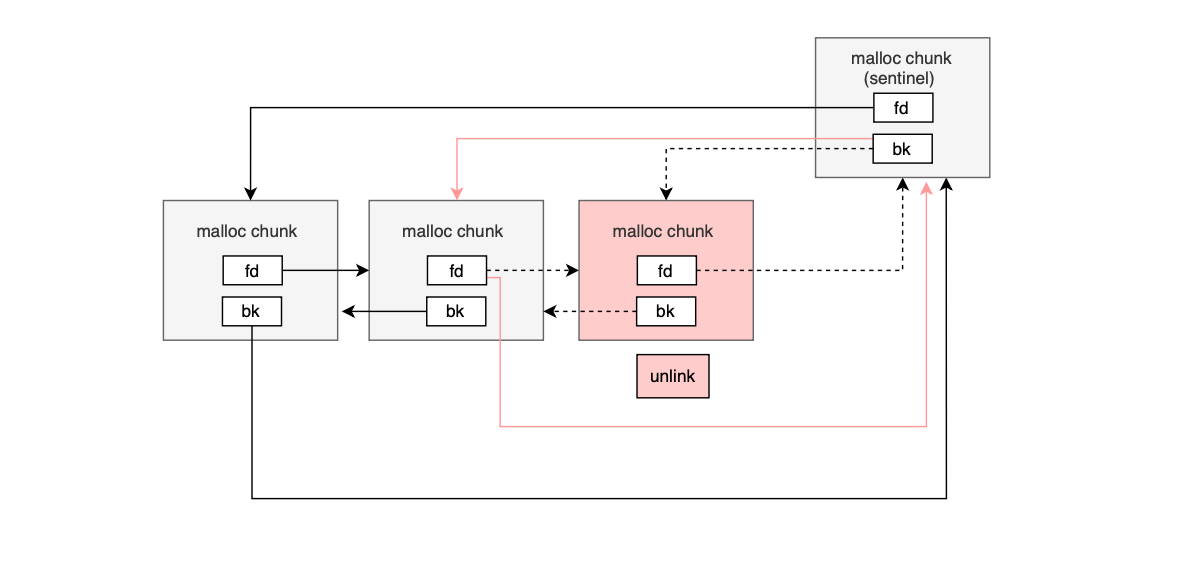

The logic for unlinking a malloc chunk from a free or bin list is listed below.

static void

unlink_chunk (mstate av, mchunkptr p)

{

if (chunksize (p) != prev_size (next_chunk (p)))

malloc_printerr ("corrupted size vs. prev_size");

mchunkptr fd = p->fd;

mchunkptr bk = p->bk;

if (__glibc_unlikely (fd->bk != p || bk->fd != p))

malloc_printerr ("corrupted double-linked list");

fd->bk = bk;

bk->fd = fd;

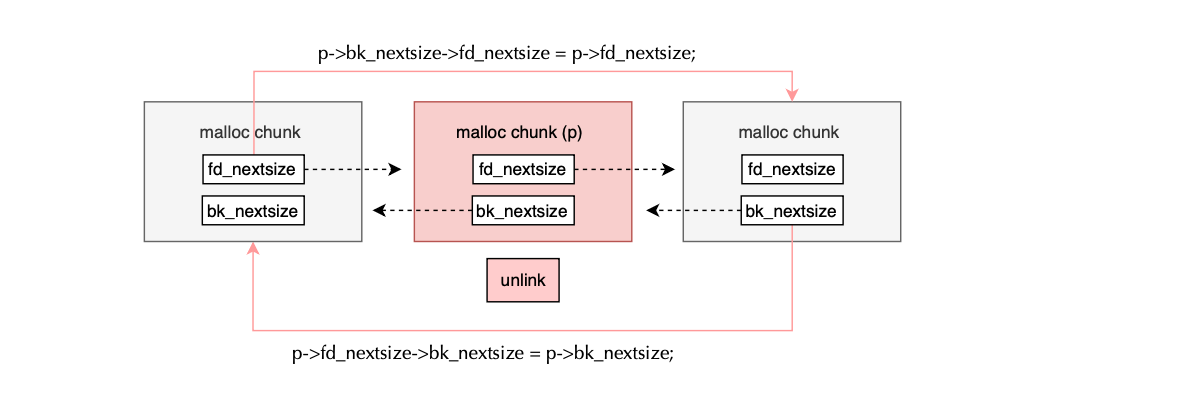

if (!in_smallbin_range (chunksize_nomask (p)) && p->fd_nextsize != NULL)

{

if (p->fd_nextsize->bk_nextsize != p

|| p->bk_nextsize->fd_nextsize != p)

malloc_printerr ("corrupted double-linked list (not small)");

if (fd->fd_nextsize == NULL)

{

if (p->fd_nextsize == p)

fd->fd_nextsize = fd->bk_nextsize = fd;

else

{

fd->fd_nextsize = p->fd_nextsize;

fd->bk_nextsize = p->bk_nextsize;

p->fd_nextsize->bk_nextsize = fd;

p->bk_nextsize->fd_nextsize = fd;

}

}

else

{

p->fd_nextsize->bk_nextsize = p->bk_nextsize;

p->bk_nextsize->fd_nextsize = p->fd_nextsize;

}

}