Whether you are an AI engineer or working on workflow automation, you probably know how much attention the MCP or Model Context Protocol has attracted recently; thanks to the AI hype cycle. Since its introduction by Anthropic, it has gained real momentum, supported by a vibrant community ecosystem with thousands

Whether you are an AI engineer or working on workflow automation, you probably know how much attention the MCP or Model Context Protocol has attracted recently; thanks to the AI hype cycle. Since its introduction by Anthropic, it has gained real momentum, supported by a vibrant community ecosystem with thousands of MCP servers proposed and major companies already announcing MCP support (partial) in their LLM products.

Over the past few days and weeks, I decided to dive into MCP and LLM-MCP integration, to better understand what all the hype was about.

Standardizing how applications inject context for LLMs, MCP aims to streamline the development of Agentic AI systems. However, there are still adoption challenges stemming from MCP’s inherent technical complexity (compared to Function Calling; initial and still widely used approach to connecting LLMs with external tools). In fact, how LLMs actually utilize MCP capabilities is still poorly understood; highlighting a gap between its theoretical benefits and practical usefulness.

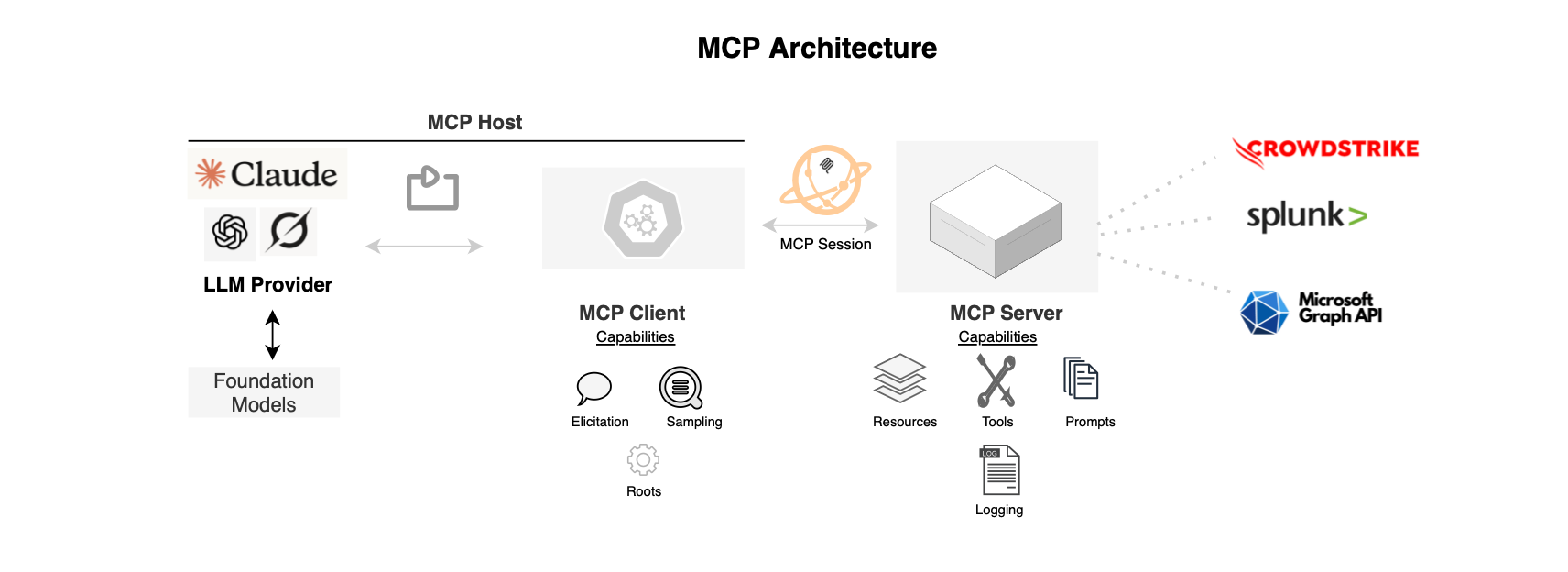

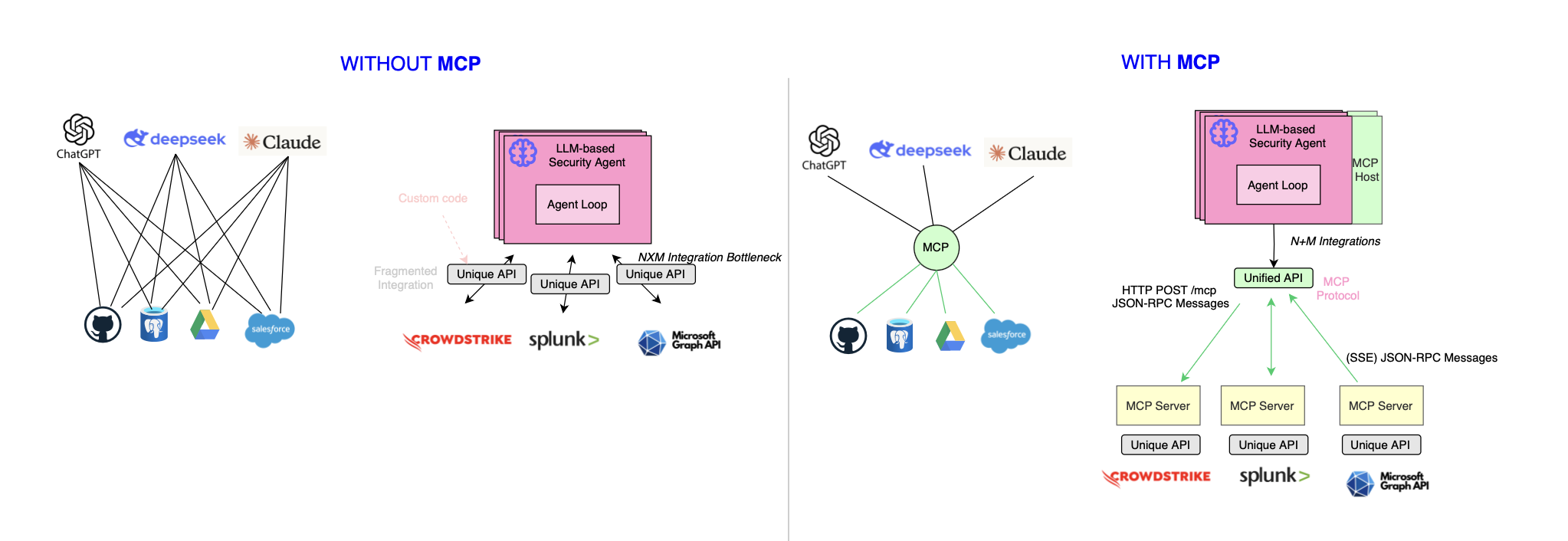

MCP empowers foundation models with context and tool calls, without custom and fragmented integrations (i.e through standardized context sharing mechanisms), and is expected to improve the development productivity. It solves the long-standing N×M integration problem , where each AI application requires engineering unique bespoke integrations for each tool or data source the underlying agent needs to interact with. Without a standard, connecting N AI apps to M tools requires N×M custom integrations duplicating engineering efforts and leading to a fragmented and complex landscape. With MCP, only N + M amenable standard integrations are required, significantly reducing integration complexity in comparison with traditional APIs struggling to cope with real-time and multi-protocol interactions. Workflows could benefit a lot from standardization and we have seen this with OTel, OCSF, etc.

In this post, I will introduce the Model Context Protocol, its standardized primitives (prompts, resources, tools...), its architecture, session management, message formats and routing, security (authentication, authorization and attacks), and finally how MCP agents are developed.

Large Language Models (LLMs) are developed to understand the probability distribution that governs the world language space. Autoregressive models approximate this distribution by predicting subsequent words based on previous context, forming a Markov chain. World knowledge (often referred as parametric knowledge) is stored implicitly within the model's parameters.

LLMs are trained using next token prediction on a large corpora across the web and naturally acquire general and commonsense knowledge of both the world and language and how humans use it to comprehend and interact with the external environment.

LLMs are not only trained to predict the next token, they also undergo a further fine-tuning step (Supervised Fine-Tuning, or SFT) to follow instructions (using (instruction, completion) pairs. Additionally, LLMs must generate unbiased responses and align with human values (alignment problem), something achieved using reinforcement learning from human feedback or RLHF.

With the resulting LLM, one can use different forms of prompting to get answers to questions using acquired world knowledge, apply zero/few-shot learning, and use RAG or Retrieval-Augmented Generation to enhance the LLM’s capabilities.

Once an LLM can follow commands, one can use stacking to create an agent or LLM-based agent (LLMs without function interface are mere text generators and can't do anything meaningful).

The LLM, once equipped with tools and other interfaces, has access to context, and through an agent loop (one way to develop an agent is by using an agent loop; one can use other mechanisms), the foundation model is instructed to choose the next tool to use in order to achieve certain goals. For example, a security agent querying the CrowdStrike API and appending the result of each fetching, forming a larger context for future decisions (e.g autonomous security event investigation).

However, providing LLMs with such aforementioned capabilities requires a high integration cost (increasing the intelligence of an application increases complexity...), and this is where MCP or Model Context Protocol comes into play.

MCP offers a standard way to incorporate context, and empower LLM-based or AI agents to make autonomous decisions.

Language modeling is not something new, it has been a central task in NLP and existed long before the foundation models revolutionized AI applications. Before transformers and the attention mechanism, machine learning models like Word2Vec, RNNs, and LSTMs already showed promising results. However, two main problems persisted: their sequential nature made parallel learning impossible and slowed training, and the influence of distant words (long-term or range dependencies) diminished due to the vanishing gradient problem, essentially the squashing of information during backpropagation (as the gradients propagate through each layer, their magnitude tends to decrease, think of it as\(r^n\) , where \(n\) is large and \(r<1\)). This is why RNNs were known to excel only at short-term dependencies.

Word embeddings produced by Word2Vec and GloVe already produces meaningful latent vectors. But with the introduction of transformer-based architectures and LLMs, you get even more powerful embeddings. Specifically, Word2Vec produces static embeddings, while LLMs generate dynamic contextual embeddings, where each token’s embedding is influenced by the surrounding words or tokens (thanks to the attention mechanism). This is also why LLMs are now widely used for semantic and contextual similarity search providing a compact bottleneck (like a sketch) that summarizes the underlying text.

Today, LLMs are not merely text generators, they are being transformed from raw language models to more capable generative models with sophisticated reasoning and agentic tool use capabilities.

Discussing LLMs in detail and the research around them is beyond the scope of this post, one can explore topics such as LLM scaling laws, multimodal LLMs, and LLM reasoning, there are plenty of scientific articles and free courses available.

To overcome interoperability challenges and compatibility issues (e.g when wiring APIs) across diverse AI frameworks, Anthropic introduced MCP in late November 2024. Within a short period, it has become the de facto standard for AI application development. Major companies have all already embraced (partially) the MCP ecosystem to empower AI and LLM products.

Large language models best exemplified by GPT4 are pre-trained on massive corpora of data and already exhibited outstanding instruction-following performance, and once equipped with a structured tool calling, their capability extends beyond mere text generation. They become capable of executing actions in real-world environments; the augmented foundation model autonomously discover, select when and which tool to use enabling a wide range of use cases.

Every coin has two faces, while MCP brings a lot of benefits in exposing tools (executable functions) and resources to foundation models, it also expand the attack surface and is subject to several attacks including LLM inherent vulnerabilities (Prompt injection, Hallucination, Backdoor... ). For example, Tool Poisoning Attacks, introduced by Invariant Labs, refers to the scenario where the agent initial goal is hijacked due to hidden malicious instructions in the tool description (or runtime responses) exposed via a malicious MCP server. The adversary instructions are not directly visible to users and have the whole attention of the foundation model as the agent uses these descriptions for planning (see LLM sycophancy).

The first problem MCP solves regarding tools calling is the lack of scalability when messy, unscalable integrations are tailored for each use case. Consider a scenario where N tools are used across M applications requiring N × M individual integrations.

Tools are often wrappers around APIs, and because applications are heterogeneous, you typically need an SDK for each programming language. Even if a REST API is available, language-specific web clients are still required. With MCP, however, you can rely on a universal MCP client, reducing the burden of language-specific integrations thereby reducing maintenance overhead.

Some frameworks or providers may enable language models call functions (by serving definitions, parsing arguments, results), but this doesn’t fully solve the integration bottleneck as there’s no standardized way for tools to declare their capabilities and for clients to consume it i.e each provider uses a different function definition. A standard is needed.

Finally, there are cases where you can’t modify the agent code but still want to serve your tool in a unified way (e.g when using ChatGPT or Claude). This is yet another area where MCP shines. With MCP, one needs to implement tools only once and serve it in consistent format, empowering AI agent to dynamically discover, select, and orchestrate tools and moving beyond the static hard-wired workflows.

As will be covered later, MCP is not only about tools and includes additional capabilities on client and server side by introducing several primitives to simplify how to build AI agents and workflows (you don’t even need an LLM to use MCP).

Simply stated, MCP is a specialized protocol for AI or LLM-based applications that standardizes how agents or context-aware AI systems interact with external systems including session management, resource abstraction and tools exposure.

In term of architecture, MCP follows a server–client architecture. First, you have an LLM service such as OpenAI; the agent is built on top of it. MCP clients maintain connections to MCP servers that run alongside the LLM (i.e the foundation model guides the MCP requests). MCP servers act as resource servers, exposing capabilities and data in a standardized way.

MCP supports several transport protocols including standard input/output (stdio) and streamable HTTP. In this post, we focus on streamable HTTP (with optional Server-Sent Events (SSE) responses).

There are several MCP client and server implementations available, we will focus on the Official Python SDK provided by Anthropic.

The MCP protocol uses JSON-RPC 2.0 for communication between clients and servers. MCP defines three primary message types: request, response and notification.

class JSONRPCMessage(RootModel[JSONRPCRequest | JSONRPCNotification | JSONRPCResponse | JSONRPCError]):

pass

Request:

class JSONRPCRequest(Request[dict[str, Any] | None, str]):

"""A request that expects a response."""

jsonrpc: Literal["2.0"]

id: RequestId

method: str

params: dict[str, Any] | None = None{

"jsonrpc": "2.0",

"id": 0,

"method": "string",

"params": {}

}Response:

class JSONRPCResponse(BaseModel):

"""A successful (non-error) response to a request."""

jsonrpc: Literal["2.0"]

id: RequestId

result: dict[str, Any]

model_config = ConfigDict(extra="allow"){

"jsonrpc": "2.0",

"id": 0,

"result": {}

}Error Response:

class JSONRPCError(BaseModel):

"""A response to a request that indicates an error occurred."""

jsonrpc: Literal["2.0"]

id: str | int

error: ErrorData

model_config = ConfigDict(extra="allow")

{

"jsonrpc": "2.0",

"id": 0,

"error": {

"code": ..,

"message": "",

"data": {}

}

}Notification ( one-way message, e.g task progress):

class JSONRPCNotification(Notification[dict[str, Any] | None, str]):

"""A notification which does not expect a response."""

jsonrpc: Literal["2.0"]

params: dict[str, Any] | None = None{

"jsonrpc": "2.0",

"method": string,

"params": {}

}Here is what the first request (initialization phase) issued by the MPC client looks like:

{

"jsonrpc": "2.0",

"id": 0,

"method": "initialize",

"params": {

"protocolVersion": "2025-06-18",

"capabilities": {},

"clientInfo": {

"name": "mcp",

"version": "0.1.0"

}

},

}Each request (and response) includes a unique id, which is used for message routing. Ids are unique per session meaning you can reuse the same id (e.g., 4) across different sessions without conflict.

In addition to requests, notifications travel from client to server or vice versa. In this case, the entity receiving and processing the message/notification (e.g task progress) is not expected to send a response. For example, when the session is initialized, the client sends a notification to complete the handshake, and the server responds with 202 accepted without a follow-up message.

You can review the full schema reference at https://modelcontextprotocol.io/specification/2025-06-18/schema.

To empower LLMs, we equip them with tools. Once the developer provides tool descriptions, the foundation model can be equipped to use the tools using in-context learning implementation; where specific instructions are encoded in the prompt guiding the model to use function calls based on the task the LLM needs to solve. The parameters of those functions are exposed as JSON fields (using JSON Schema). When the model decides a tool should be called, it emits a structured JSON payload matching the schema. The agent code processes the requests, executes the function call and inject the result of the invocation to the context served to the LLM to continue reasoning.

A tool is essentially a function with metadata:

class Tool(BaseModel):

"""Internal tool registration info."""

fn: Callable[..., Any] = Field(exclude=True)

name: str = Field(description="Name of the tool")

title: str | None = Field(None, description="Human-readable title of the tool")

description: str = Field(description="Description of what the tool does")

parameters: dict[str, Any] = Field(description="JSON schema for tool parameters")

fn_metadata: FuncMetadata = Field(

description="Metadata about the function including a pydantic model for tool arguments"

)

is_async: bool = Field(description="Whether the tool is async")

context_kwarg: str | None = Field(None, description="Name of the kwarg that should receive context")

annotations: ToolAnnotations | None = Field(None, description="Optional annotations for the tool")

Tools can be registered declaratively:

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + bOr programmatically:

class ServerModule:

...

def add_tool(self, server: FastMCP, method: Callable, name: str) -> None:

server.add_tool(method, name=name)

...

class FastMCP(Generic[LifespanResultT]):

...

def add_tool(

self,

fn: AnyFunction,

name: str | None = None,

title: str | None = None,

description: str | None = None,

annotations: ToolAnnotations | None = None,

structured_output: bool | None = None,

) -> None:

self._tool_manager.add_tool(

fn,

name=name,

title=title,

description=description,

annotations=annotations,

structured_output=structured_output,

)When listing tools, the MCP client receives a response that looks as follows:

{

"name" : "tool_name",

"description" : "tool_description.",

"inputSchema" : {

"properties" : { },

"title" : "tool_arguments",

"type" : "object"

},

"outputSchema" : {

"properties" : {

"result" : {

"additionalProperties" : {

"type" : "boolean"

},

"title" : "Result",

"type" : "object"

}

},

"required" : [ "result" ],

"title" : "",

"type" : "object"

}

}

MCP provides both input and output schemas for validation. The Python SDK, for instance, supports validated function calls (as show below both synchronous and asynchronous tools function are supported, however in order to avoid blocking the event loop one should consider wrapping blocking calls as async functions e.g using anyio.to_thread.run_sync):

async def call_fn_with_arg_validation(

self,

fn: Callable[..., Any | Awaitable[Any]],

fn_is_async: bool,

arguments_to_validate: dict[str, Any],

arguments_to_pass_directly: dict[str, Any] | None,

) -> Any:

"""Call the given function with arguments validated and injected.

Arguments are first attempted to be parsed from JSON, then validated against

the argument model, before being passed to the function.

"""

arguments_pre_parsed = self.pre_parse_json(arguments_to_validate)

arguments_parsed_model = self.arg_model.model_validate(arguments_pre_parsed)

arguments_parsed_dict = arguments_parsed_model.model_dump_one_level()

arguments_parsed_dict |= arguments_to_pass_directly or {}

if fn_is_async:

return await fn(**arguments_parsed_dict)

else:

return fn(**arguments_parsed_dict)

Tools can also access the MCP’s request context as illustrated below:

@mcp.tool()

async def my_tool(x: int, ctx: Context) -> str:

"""Tool that uses context capabilities."""

# The context parameter can have any name as long as it's type-annotated

return await process_with_context(x, ctx)Another interface implemented by MCP servers is Resources. It refers to any type of data the server wants to make available to clients for context enrichment, such as database entities or documentation. Each resource is identified by a unique URI that houses either textual or binary data.

class Resource(BaseModel, abc.ABC):

"""Base class for all resources."""

model_config = ConfigDict(validate_default=True)

uri: Annotated[AnyUrl, UrlConstraints(host_required=False)] = Field(default=..., description="URI of the resource")

name: str | None = Field(description="Name of the resource", default=None)

title: str | None = Field(description="Human-readable title of the resource", default=None)

description: str | None = Field(description="Description of the resource", default=None)

mime_type: str = Field(

default="text/plain",

description="MIME type of the resource content",

pattern=r"^[a-zA-Z0-9]+/[a-zA-Z0-9\-+.]+$",

)Resources registration can be achieved using declarative API:

@mcp.resource("greeting://{name}")

def get_greeting(name: str) -> str:

"""Get a personalized greeting"""

return f"Hello, {name}!"or programatic API:

def add_resource(self, resource: Resource) -> None:

"""Add a resource to the server.

Args:

resource: A Resource instance to add

"""

self._resource_manager.add_resource(resource)Resources can be listed at runtime by submitting ListResourcesRequest:

class ListResourcesRequest(PaginatedRequest[Literal["resources/list"]]):

"""Sent from the client to request a list of resources the server has."""

method: Literal["resources/list"]Next, a specific resource can be read by issuing a ReadResourceRequest.

async def read_resource(self, uri: AnyUrl) -> types.ReadResourceResult:

"""Send a resources/read request."""

return await self.send_request(

types.ClientRequest(

types.ReadResourceRequest(

method="resources/read",

params=types.ReadResourceRequestParams(uri=uri),

)

),

types.ReadResourceResult,

)A MCP client can also send a SubscribeRequest to request resources/updated notifications from the server:

class SubscribeRequest(Request[SubscribeRequestParams, Literal["resources/subscribe"]]):

"""

Sent from the client to request resources/updated notifications from the server

whenever a particular resource changes.

"""

method: Literal["resources/subscribe"]

params: SubscribeRequestParamsPrompts are another interface served by the MCP server, standardizing the provisioning of reusable templates presented to users or LLMs designed for common interactions. Each prompt is represented using a name, description and optional arguments.

class Prompt(BaseModel):

"""A prompt template that can be rendered with parameters."""

name: str = Field(description="Name of the prompt")

title: str | None = Field(None, description="Human-readable title of the prompt")

description: str | None = Field(None, description="Description of what the prompt does")

arguments: list[PromptArgument] | None = Field(None, description="Arguments that can be passed to the prompt")

fn: Callable[..., PromptResult | Awaitable[PromptResult]] = Field(exclude=True)

Prompts can be registered using decorators or programmatically.

def add_prompt(self, prompt: Prompt) -> None:

"""Add a prompt to the server.

Args:

prompt: A Prompt instance to add

"""

self._prompt_manager.add_prompt(prompt)@mcp.prompt()

def greet_user(name: str, style: str = "friendly") -> str:

"""Generate a greeting prompt"""

styles = {

"friendly": "Please write a warm, friendly greeting",

"formal": "Please write a formal, professional greeting",

"casual": "Please write a casual, relaxed greeting",

}

return f"{styles.get(style, styles['friendly'])} for someone named {name}."Similar to resources, the MCP can list and fetch prompts by submitting ListPromptsRequest and GetPromptRequest respectively:

async def list_prompts(self, cursor: str | None = None) -> types.ListPromptsResult:

"""Send a prompts/list request."""

return await self.send_request(

types.ClientRequest(

types.ListPromptsRequest(

method="prompts/list",

params=types.PaginatedRequestParams(cursor=cursor) if cursor is not None else None,

)

),

types.ListPromptsResult,

)

async def get_prompt(self, name: str, arguments: dict[str, str] | None = None) -> types.GetPromptResult:

"""Send a prompts/get request."""

return await self.send_request(

types.ClientRequest(

types.GetPromptRequest(

method="prompts/get",

params=types.GetPromptRequestParams(name=name, arguments=arguments),

)

),

types.GetPromptResult,

)Roots provide the operational scope or context for MCP servers. They redirect servers to specific resources, represented with URIs.

class Root(BaseModel):

"""Represents a root directory or file that the server can operate on."""

uri: FileUrl

"""

The URI identifying the root. This *must* start with file:// for now.

This restriction may be relaxed in future versions of the protocol to allow

other URI schemes.

"""

name: str | None = None

"""

An optional name for the root. This can be used to provide a human-readable

identifier for the root, which may be useful for display purposes or for

referencing the root in other parts of the application.

"""

meta: dict[str, Any] | None = Field(alias="_meta", default=None)

"""

See [MCP specification](https://github.com/modelcontextprotocol/modelcontextprotocol/blob/47339c03c143bb4ec01a26e721a1b8fe66634ebe/docs/specification/draft/basic/index.mdx#general-fields)

for notes on _meta usage.

"""

model_config = ConfigDict(extra="allow")Sampling enables MCP servers to request LLM completions by initiating structured inference requests to the MCP client (subject to approval by the user).

class SamplingMessage(BaseModel):

"""Describes a message issued to or received from an LLM API."""

role: Role

content: TextContent | ImageContent | AudioContent

model_config = ConfigDict(extra="allow")Elicitation was added recently. It enables MCP servers to request and gather additional information at runtime like tool parameters.

class ElicitRequest(Request[ElicitRequestParams, Literal["elicitation/create"]]):

"""A request from the server to elicit information from the client."""

method: Literal["elicitation/create"]

params: ElicitRequestParamsclass ElicitRequestParams(RequestParams):

"""Parameters for elicitation requests."""

message: str

requestedSchema: ElicitRequestedSchema

model_config = ConfigDict(extra="allow")During the initialization phase, the client first issues a POST request containing an InitializeRequest (with its own capabilities and information). This is what the request looks like:

{

"method": "initialize",

"params": {

"protocolVersion": "2025-06-18",

"capabilities": {},

"clientInfo": {

"name": "mcp",

"version": "0.1.0"

}

},

"jsonrpc": "2.0",

"id": 0

}The server responds with an InitializeResult (with its capabilities and information):

{

"jsonrpc" : "2.0",

"id" : 0,

"result" : {

"protocolVersion" : "2025-06-18",

"capabilities" : {

"experimental" : { },

"prompts" : {

"listChanged" : false

},

"resources" : {

"subscribe" : false,

"listChanged" : false

},

"tools" : {

"listChanged" : false

}

},

"serverInfo" : {

"name" : "Falcon MCP Server",

"version" : "1.12.4"

},

"instructions" : "This server provides access to CrowdStrike Falcon capabilities."

}

}Then the client sends an InitializedNotification to complete the session handshake:

{"method":"notifications/initialized","jsonrpc":"2.0"}

The server immendiatly responds with 202 Accepted. After the handshake, the session is ready, and interaction can begin e.g the client can issue requests like listing tools:

{"method":"tools/list","jsonrpc":"2.0","id":1}The code performing the above logic is shown below:

async def initialize(self) -> types.InitializeResult:

sampling = types.SamplingCapability() if self._sampling_callback is not _default_sampling_callback else None

elicitation = (

types.ElicitationCapability() if self._elicitation_callback is not _default_elicitation_callback else None

)

roots = (

# TODO: Should this be based on whether we

# _will_ send notifications, or only whether

# they're supported?

types.RootsCapability(listChanged=True)

if self._list_roots_callback is not _default_list_roots_callback

else None

)

result = await self.send_request(

types.ClientRequest(

types.InitializeRequest(

method="initialize",

params=types.InitializeRequestParams(

protocolVersion=types.LATEST_PROTOCOL_VERSION,

capabilities=types.ClientCapabilities(

sampling=sampling,

elicitation=elicitation,

experimental=None,

roots=roots,

),

clientInfo=self._client_info,

),

)

),

types.InitializeResult,

)

if result.protocolVersion not in SUPPORTED_PROTOCOL_VERSIONS:

raise RuntimeError(f"Unsupported protocol version from the server: {result.protocolVersion}")

await self.send_notification(

types.ClientNotification(types.InitializedNotification(method="notifications/initialized"))

)

return resultClientSession

There are different types of notifications, including InitializedNotification, sent from the client after initialization has finished and ResourceUpdatedNotification sent from the server to the client, informing it that a resource has changed and may need to be read again.

The complete list for both client and server is shown below:

class ClientNotification(

RootModel[CancelledNotification | ProgressNotification | InitializedNotification | RootsListChangedNotification]

):class ServerNotification(

RootModel[

CancelledNotification

| ProgressNotification

| LoggingMessageNotification

| ResourceUpdatedNotification

| ResourceListChangedNotification

| ToolListChangedNotification

| PromptListChangedNotification

]

):

passMC Servers offer different capabilities including tools (e.g., calling the CrowdStrike API to contain a host or obtain detection information), resources (e.g., file or documentation), resource subscriptions (e.g., receiving a notification when a database entry changes) and prompt templates (used to guide language model interactions). All tools are registered during server initialization and can be listed by the client (to serve the foundation model).

class ServerCapabilities(BaseModel):

"""Capabilities that a server may support."""

experimental: dict[str, dict[str, Any]] | None = None

"""Experimental, non-standard capabilities that the server supports."""

logging: LoggingCapability | None = None

"""Present if the server supports sending log messages to the client."""

prompts: PromptsCapability | None = None

"""Present if the server offers any prompt templates."""

resources: ResourcesCapability | None = None

"""Present if the server offers any resources to read."""

tools: ToolsCapability | None = None

"""Present if the server offers any tools to call."""

completions: CompletionsCapability | None = None

"""Present if the server offers autocompletion suggestions for prompts and resources."""

model_config = ConfigDict(extra="allow")MCP Clients also provide capabilities for servers. A server can initiate requests such as elicitation (to request more information from the user), roots (indicating operational context/scope and resource boundaries) and sampling (to leverage LLM/AI capabilities i.e servers request completions). The capability negotiation happens during session initialization.

class ClientCapabilities(BaseModel):

"""Capabilities a client may support."""

experimental: dict[str, dict[str, Any]] | None = None

"""Experimental, non-standard capabilities that the client supports."""

sampling: SamplingCapability | None = None

"""Present if the client supports sampling from an LLM."""

elicitation: ElicitationCapability | None = None

"""Present if the client supports elicitation from the user."""

roots: RootsCapability | None = None

"""Present if the client supports listing roots."""

model_config = ConfigDict(extra="allow")

The client issues requests via HTTP POST, while the server uses an open stream after the client issues an HTTP GET to the MCP endpoint.

async def _handle_get_request(self, request: Request, send: Send) -> None:

"""

Handle GET request to establish SSE.

This allows the server to communicate to the client without the client

first sending data via HTTP POST. The server can send JSON-RPC requests

and notifications on this stream.

"""StreamableHTTPServerTransport

MCP clients can remain connected to multiple SSE streams simultaneously, with each stream belonging to a different request in the same session. MCP also supports message replay (resumability).

# Handle resumability: check for Last-Event-ID header

if last_event_id := request.headers.get(LAST_EVENT_ID_HEADER):

await self._replay_events(last_event_id, request, send)

return

Note that MCP servers do not use session IDs for authentication; only for message routing.

Building a simple MCP workflow consists of connecting an MCP client to an MCP server, discovering and calling tools (guided by the foundation model), and feeding the results back into the injected context.

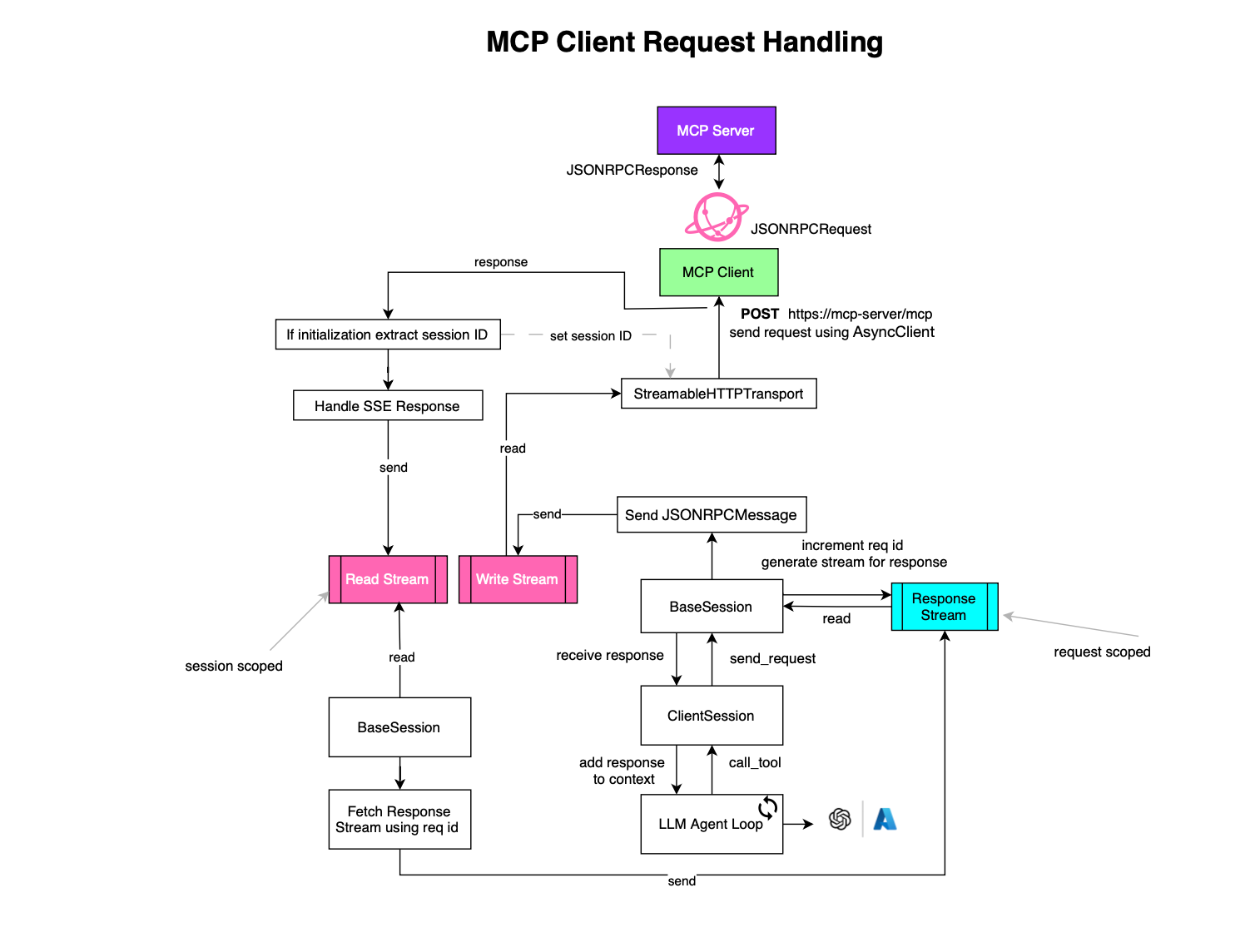

The process of handling an MCP request works as follows: the MCP client prepares a request (e.g., InitializeRequest or CallToolRequest) via POST /mcp:

result = await self.send_request(

types.ClientRequest(

types.CallToolRequest(

method="tools/call",

params=types.CallToolRequestParams(

name=name,

arguments=arguments,

),

)

),

types.CallToolResult,

request_read_timeout_seconds=read_timeout_seconds,

progress_callback=progress_callback,

)A request id is generated by incrementing the current one (there is no race condition here, as the event loop consists of a single thread handling asynchronous jobs). An in-memory response stream is then created and indexed:

async def send_request(

self,

request: SendRequestT,

result_type: type[ReceiveResultT],

request_read_timeout_seconds: timedelta | None = None,

metadata: MessageMetadata = None,

progress_callback: ProgressFnT | None = None,

) -> ReceiveResultT:

request_id = self._request_id

self._request_id = request_id + 1

response_stream, response_stream_reader = anyio.create_memory_object_stream[JSONRPCResponse | JSONRPCError](1)

self._response_streams[request_id] = response_streamAnd the caller coroutine waits for the stream to be filled before returning:

try:

with anyio.fail_after(timeout):

response_or_error = await response_stream_reader.receive()A JSON-RPC request is created and placed in the session write stream:

try:

jsonrpc_request = JSONRPCRequest(

jsonrpc="2.0",

id=request_id,

**request_data,

)

await self._write_stream.send(SessionMessage(message=JSONRPCMessage(jsonrpc_request), metadata=metadata))

Each connection or session is represented by a StreamableHTTPTransport object. Read and write streams, used for reading/sending messages from/to the MCP server, are created during initialization.

async def streamablehttp_client(

url: str,

headers: dict[str, str] | None = None,

timeout: float | timedelta = 30,

sse_read_timeout: float | timedelta = 60 * 5,

terminate_on_close: bool = True,

httpx_client_factory: McpHttpClientFactory = create_mcp_http_client,

auth: httpx.Auth | None = None,

) -> AsyncGenerator[

tuple[

MemoryObjectReceiveStream[SessionMessage | Exception],

MemoryObjectSendStream[SessionMessage],

GetSessionIdCallback,

],

None,

]:

transport = StreamableHTTPTransport(url, headers, timeout, sse_read_timeout, auth)

read_stream_writer, read_stream = anyio.create_memory_object_stream[SessionMessage | Exception](0)

write_stream, write_stream_reader = anyio.create_memory_object_stream[SessionMessage](0)

When a message is placed in the write stream, a subscriber on the stream consumes it:

async def post_writer(

self,

client: httpx.AsyncClient,

write_stream_reader: StreamReader,

read_stream_writer: StreamWriter,

write_stream: MemoryObjectSendStream[SessionMessage],

start_get_stream: Callable[[], None],

tg: TaskGroup,

) -> None:

"""Handle writing requests to the server."""

try:

async with write_stream_reader:

async for session_message in write_stream_reader:

message = session_message.message

metadata = (

session_message.metadata

if isinstance(session_message.metadata, ClientMessageMetadata)

else None

)StreamableHTTPTransport

and then sends it via POST request:

async def _handle_post_request(self, ctx: RequestContext) -> None:

"""Handle a POST request with response processing."""

headers = self._prepare_request_headers(ctx.headers)

message = ctx.session_message.message

is_initialization = self._is_initialization_request(message)

async with ctx.client.stream(

"POST",

self.url,

json=message.model_dump(by_alias=True, mode="json", exclude_none=True),

headers=headers,

) as response:Both SSE or plain JSON responses are supported.

if content_type.startswith(JSON):

await self._handle_json_response(response, ctx.read_stream_writer, is_initialization)

elif content_type.startswith(SSE):

await self._handle_sse_response(response, ctx, is_initialization)StreamableHTTPTransport

When JSON responses are enabled, the SSE stream is not created and events or messages can be consumed directly:

async def _handle_json_response(

self,

response: httpx.Response,

read_stream_writer: StreamWriter,

is_initialization: bool = False,

) -> None:

"""Handle JSON response from the server."""

try:

content = await response.aread()

message = JSONRPCMessage.model_validate_json(content)

# Extract protocol version from initialization response

if is_initialization:

self._maybe_extract_protocol_version_from_message(message)

session_message = SessionMessage(message)

await read_stream_writer.send(session_message)

except Exception as exc:

logger.exception("Error parsing JSON response")

await read_stream_writer.send(exc)In case of SSE response from the server, an EventSource object is used to subscribe to the SSE stream:

event_source = EventSource(response)

async for sse in event_source.aiter_sse():

is_complete = await self._handle_sse_event(

sse,

ctx.read_stream_writer,

resumption_callback=(ctx.metadata.on_resumption_token_update if ctx.metadata else None),

is_initialization=is_initialization,

)

# If the SSE event indicates completion, like returning respose/error

# break the loop

if is_complete:

breakOnce the server responds, the result is placed in the read stream:

"""Handle an SSE event, returning True if the response is complete."""

if sse.event == "message":

try:

message = JSONRPCMessage.model_validate_json(sse.data)

logger.debug(f"SSE message: {message}")

# Extract protocol version from initialization response

if is_initialization:

self._maybe_extract_protocol_version_from_message(message)

# If this is a response and we have original_request_id, replace it

if original_request_id is not None and isinstance(message.root, JSONRPCResponse | JSONRPCError):

message.root.id = original_request_id

session_message = SessionMessage(message)

await read_stream_writer.send(session_message)The ClientSession object defines an event loop that consumes incoming messages, reads the request id, search the indexed response stream and places the response there:

async def _receive_loop(self) -> None:

async with (

self._read_stream,

self._write_stream,

):

...

stream = self._response_streams.pop(message.message.root.id, None)

if stream:

await stream.send(message.message.root)

else:

await self._handle_incoming(

RuntimeError(f"Received response with an unknown request ID: {message}")

)

Now the coroutine will resume and the caller can use the tool call result as additional context for the foundation model.

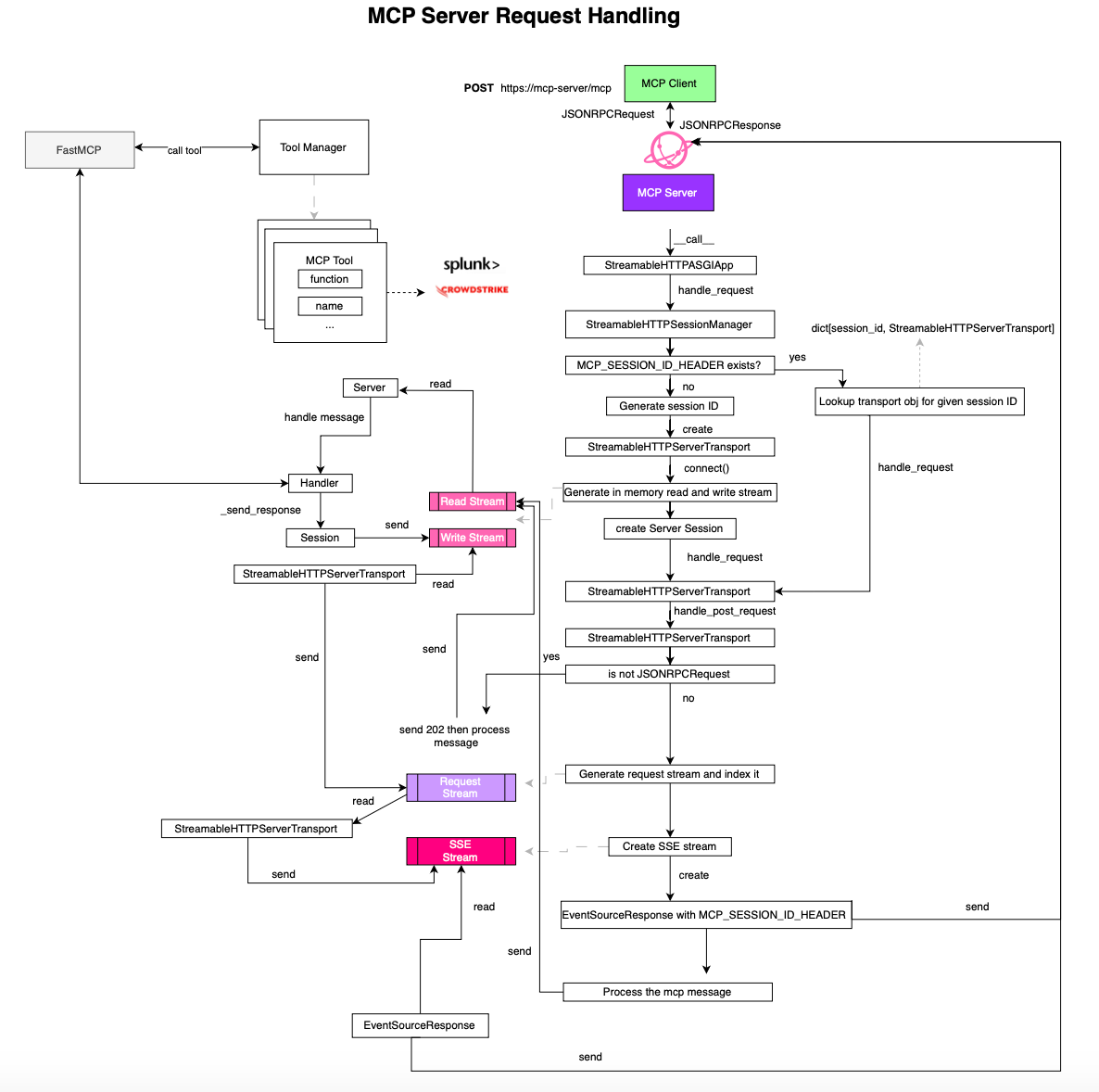

On the server side, when the main server ( specifically the ASGI handler) receives the request, it passes it to the session manager (StreamableHTTPSessionManager) for routing:

class StreamableHTTPASGIApp:

"""

ASGI application for Streamable HTTP server transport.

"""

def __init__(self, session_manager: StreamableHTTPSessionManager):

self.session_manager = session_manager

async def __call__(self, scope: Scope, receive: Receive, send: Send) -> None:

await self.session_manager.handle_request(scope, receive, send)

If a session id is present in the headers, the request is delegated to the existing transport instance (StreamableHTTPServerTransport). Otherwise, a new session ID and transport object are created:

async def _handle_stateful_request(

self,

scope: Scope,

receive: Receive,

send: Send,

) -> None:

"""

Process request in stateful mode - maintaining session state between requests.

Args:

scope: ASGI scope

receive: ASGI receive function

send: ASGI send function

"""

request = Request(scope, receive)

request_mcp_session_id = request.headers.get(MCP_SESSION_ID_HEADER)

# Existing session case

if request_mcp_session_id is not None and request_mcp_session_id in self._server_instances:

transport = self._server_instances[request_mcp_session_id]

logger.debug("Session already exists, handling request directly")

await transport.handle_request(scope, receive, send)

return

if request_mcp_session_id is None:

# New session case

logger.debug("Creating new transport")

async with self._session_creation_lock:

new_session_id = uuid4().hex

http_transport = StreamableHTTPServerTransport(

mcp_session_id=new_session_id,

is_json_response_enabled=self.json_response,

event_store=self.event_store, # May be None (no resumability)

security_settings=self.security_settings,

)

assert http_transport.mcp_session_id is not None

self._server_instances[http_transport.mcp_session_id] = http_transport

logger.info(f"Created new transport with session ID: {new_session_id}")

Each connection gets a session id, which the client uses for subsequent requests (by including it in headers).

StreamableHTTPServerTransport supports JSON responses (instead of SSE streams) when enabled, but the default isFalse.

Similar to client side, each connection provides read and write streams for inbound and outbound messages.

async def run_server(*, task_status: TaskStatus[None] = anyio.TASK_STATUS_IGNORED) -> None:

async with http_transport.connect() as streams:

read_stream, write_stream = streams

task_status.started()POST requests are processed by _handle_post_request, which validates headers, content types, and JSONRPCMessage. If the message is not a JSONRPCRequest (e.g., an InitializedNotification), it returns 202 Acceptedimmediately and places the message in the read stream for processing.

async def _handle_post_request(self, scope: Scope, request: Request, receive: Receive, send: Send) -> None:

"""Handle POST requests containing JSON-RPC messages."""

writer = self._read_stream_writer

if writer is None:

raise ValueError("No read stream writer available. Ensure connect() is called first.")

try:

# Check Accept headers

has_json, has_sse = self._check_accept_headers(request)

if not (has_json and has_sse):

response = self._create_error_response(

("Not Acceptable: Client must accept both application/json and text/event-stream"),

HTTPStatus.NOT_ACCEPTABLE,

)

await response(scope, receive, send)

return

# Validate Content-Type

if not self._check_content_type(request):

response = self._create_error_response(

"Unsupported Media Type: Content-Type must be application/json",

HTTPStatus.UNSUPPORTED_MEDIA_TYPE,

)

await response(scope, receive, send)

return

# Parse the body - only read it once

body = await request.body()

try:

raw_message = json.loads(body)

except json.JSONDecodeError as e:

response = self._create_error_response(f"Parse error: {str(e)}", HTTPStatus.BAD_REQUEST, PARSE_ERROR)

await response(scope, receive, send)

return

try:

message = JSONRPCMessage.model_validate(raw_message)

except ValidationError as e:

response = self._create_error_response(

f"Validation error: {str(e)}",

HTTPStatus.BAD_REQUEST,

INVALID_PARAMS,

)

await response(scope, receive, send)

return

# Check if this is an initialization request

is_initialization_request = isinstance(message.root, JSONRPCRequest) and message.root.method == "initialize"

if is_initialization_request:

# Check if the server already has an established session

if self.mcp_session_id:

# Check if request has a session ID

request_session_id = self._get_session_id(request)

# If request has a session ID but doesn't match, return 404

if request_session_id and request_session_id != self.mcp_session_id:

response = self._create_error_response(

"Not Found: Invalid or expired session ID",

HTTPStatus.NOT_FOUND,

)

await response(scope, receive, send)

return

elif not await self._validate_request_headers(request, send):

returnOtherwise, it creates a stream specific to the request, indexed by the request id:

# Extract the request ID outside the try block for proper scope

request_id = str(message.root.id)

# Register this stream for the request ID

self._request_streams[request_id] = anyio.create_memory_object_stream[EventMessage](0)

request_stream_reader = self._request_streams[request_id][1]If JSON response is enabled, process the message immediately by placing it in the read stream of the session:

if self.is_json_response_enabled:

# Process the message

metadata = ServerMessageMetadata(request_context=request)

session_message = SessionMessage(message, metadata=metadata)

await writer.send(session_message)

try:

# Process messages from the request-specific stream

# We need to collect all messages until we get a response

response_message = None

# Use similar approach to SSE writer for consistency

async for event_message in request_stream_reader:

# If it's a response, this is what we're waiting for

if isinstance(event_message.message.root, JSONRPCResponse | JSONRPCError):

response_message = event_message.message

break

# For notifications and request, keep waiting

else:

logger.debug(f"received: {event_message.message.root.method}")

# At this point we should have a response

if response_message:

# Create JSON response

response = self._create_json_response(response_message)

await response(scope, receive, send)

else:

# This shouldn't happen in normal operation

logger.error("No response message received before stream closed")

response = self._create_error_response(

"Error processing request: No response received",

HTTPStatus.INTERNAL_SERVER_ERROR,

)

await response(scope, receive, send)

except Exception:

logger.exception("Error processing JSON response")

response = self._create_error_response(

"Error processing request",

HTTPStatus.INTERNAL_SERVER_ERROR,

INTERNAL_ERROR,

)

await response(scope, receive, send)

finally:

await self._clean_up_memory_streams(request_id)Otherwise, it creates an SSE stream and use the EventSourceResponse object to handle SSE responses:

# Create SSE stream

sse_stream_writer, sse_stream_reader = anyio.create_memory_object_stream[dict[str, str]](0)

async def sse_writer():

# Get the request ID from the incoming request message

try:

async with sse_stream_writer, request_stream_reader:

# Process messages from the request-specific stream

async for event_message in request_stream_reader:

# Build the event data

event_data = self._create_event_data(event_message)

await sse_stream_writer.send(event_data)

# If response, remove from pending streams and close

if isinstance(

event_message.message.root,

JSONRPCResponse | JSONRPCError,

):

break

except Exception:

logger.exception("Error in SSE writer")

finally:

logger.debug("Closing SSE writer")

await self._clean_up_memory_streams(request_id)

# Create and start EventSourceResponse

# SSE stream mode (original behavior)

# Set up headers

headers = {

"Cache-Control": "no-cache, no-transform",

"Connection": "keep-alive",

"Content-Type": CONTENT_TYPE_SSE,

**({MCP_SESSION_ID_HEADER: self.mcp_session_id} if self.mcp_session_id else {}),

}

response = EventSourceResponse(

content=sse_stream_reader,

data_sender_callable=sse_writer,

headers=headers,

)And once connection is upgraded to be streamable, place the request in the read stream:

# Start the SSE response (this will send headers immediately)

try:

# First send the response to establish the SSE connection

async with anyio.create_task_group() as tg:

tg.start_soon(response, scope, receive, send)

# Then send the message to be processed by the server

metadata = ServerMessageMetadata(request_context=request)

session_message = SessionMessage(message, metadata=metadata)

await writer.send(session_message)Similar to the client side, there is an event loop _receive_loop in BaseSession consuming incoming messages. They are processed using _received_request using _handle_incoming.

async with anyio.create_task_group() as tg:

async for message in session.incoming_messages:

logger.debug("Received message: %s", message)

tg.start_soon(

self._handle_message,

message,

session,

lifespan_context,

raise_exceptions,

)

As said, when the server starts, an event loop task continuously drains incoming messages and delegates them to the appropriate request or notification handler (_handle_message).

async def _handle_message(

self,

message: RequestResponder[types.ClientRequest, types.ServerResult] | types.ClientNotification | Exception,

session: ServerSession,

lifespan_context: LifespanResultT,

raise_exceptions: bool = False,

):

with warnings.catch_warnings(record=True) as w:

# TODO(Marcelo): We should be checking if message is Exception here.

match message: # type: ignore[reportMatchNotExhaustive]

case RequestResponder(request=types.ClientRequest(root=req)) as responder:

with responder:

await self._handle_request(message, req, session, lifespan_context, raise_exceptions)

case types.ClientNotification(root=notify):

await self._handle_notification(notify)

for warning in w:

logger.info("Warning: %s: %s", warning.category.__name__, warning.message)The handling of the request is shown below. It delegates to the request handler for processing.

async def _handle_request(

self,

message: RequestResponder[types.ClientRequest, types.ServerResult],

req: Any,

session: ServerSession,

lifespan_context: LifespanResultT,

raise_exceptions: bool,

):

logger.info("Processing request of type %s", type(req).__name__)

if handler := self.request_handlers.get(type(req)): # type: ignore

logger.debug("Dispatching request of type %s", type(req).__name__)

token = None

try:

# Extract request context from message metadata

request_data = None

if message.message_metadata is not None and isinstance(message.message_metadata, ServerMessageMetadata):

request_data = message.message_metadata.request_context

# Set our global state that can be retrieved via

# app.get_request_context()

token = request_ctx.set(

RequestContext(

message.request_id,

message.request_meta,

session,

lifespan_context,

request=request_data,

)

)

response = await handler(req)

except McpError as err:

response = err.error

except anyio.get_cancelled_exc_class():

logger.info(

"Request %s cancelled - duplicate response suppressed",

message.request_id,

)

return

except Exception as err:

if raise_exceptions:

raise err

response = types.ErrorData(code=0, message=str(err), data=None)

finally:

# Reset the global state after we are done

if token is not None:

request_ctx.reset(token)

await message.respond(response)

else:

await message.respond(

types.ErrorData(

code=types.METHOD_NOT_FOUND,

message="Method not found",

)

)

logger.debug("Response sent")In case of a tool call request it would delegate to TooManager via FastMCP (self._tool_manager.call_tool()

async def call_tool(self, name: str, arguments: dict[str, Any]) -> Sequence[ContentBlock] | dict[str, Any]:

"""Call a tool by name with arguments."""

context = self.get_context()

return await self._tool_manager.call_tool(name, arguments, context=context, convert_result=True)

Once a response is ready, it is placed in the _write_stream.

async def _send_response(self, request_id: RequestId, response: SendResultT | ErrorData) -> None:

if isinstance(response, ErrorData):

jsonrpc_error = JSONRPCError(jsonrpc="2.0", id=request_id, error=response)

session_message = SessionMessage(message=JSONRPCMessage(jsonrpc_error))

await self._write_stream.send(session_message)

else:

jsonrpc_response = JSONRPCResponse(

jsonrpc="2.0",

id=request_id,

result=response.model_dump(by_alias=True, mode="json", exclude_none=True),

)

session_message = SessionMessage(message=JSONRPCMessage(jsonrpc_response))

await self._write_stream.send(session_message)

Another task (connect() in StreamableHTTPServerTransport) drains this write stream, matches the response to the correct request stream via the request id:

async def connect(

self,

) -> AsyncGenerator[

tuple[

MemoryObjectReceiveStream[SessionMessage | Exception],

MemoryObjectSendStream[SessionMessage],

],

None,

]:

"""Context manager that provides read and write streams for a connection.

Yields:

Tuple of (read_stream, write_stream) for bidirectional communication

"""

# Create the memory streams for this connection

read_stream_writer, read_stream = anyio.create_memory_object_stream[SessionMessage | Exception](0)

write_stream, write_stream_reader = anyio.create_memory_object_stream[SessionMessage](0)

# Store the streams

self._read_stream_writer = read_stream_writer

self._read_stream = read_stream

self._write_stream_reader = write_stream_reader

self._write_stream = write_stream

# Start a task group for message routing

async with anyio.create_task_group() as tg:

# Create a message router that distributes messages to request streams

async def message_router():

try:

async for session_message in write_stream_reader:

# Determine which request stream(s) should receive this message

message = session_message.message

target_request_id = None

# Check if this is a response

if isinstance(message.root, JSONRPCResponse | JSONRPCError):

response_id = str(message.root.id)

# If this response is for an existing request stream,

# send it there

target_request_id = response_id

else:

# Extract related_request_id from meta if it exists

if (

session_message.metadata is not None

and isinstance(

session_message.metadata,

ServerMessageMetadata,

)

and session_message.metadata.related_request_id is not None

):

target_request_id = str(session_message.metadata.related_request_id)

request_stream_id = target_request_id if target_request_id is not None else GET_STREAM_KEY

# Store the event if we have an event store,

# regardless of whether a client is connected

# messages will be replayed on the re-connect

event_id = None

if self._event_store:

event_id = await self._event_store.store_event(request_stream_id, message)

logger.debug(f"Stored {event_id} from {request_stream_id}")

if request_stream_id in self._request_streams:

try:

# Send both the message and the event ID

await self._request_streams[request_stream_id][0].send(EventMessage(message, event_id))

except (

anyio.BrokenResourceError,

anyio.ClosedResourceError,

):

# Stream might be closed, remove from registry

self._request_streams.pop(request_stream_id, None)

else:

logging.debug(

f"""Request stream {request_stream_id} not found

for message. Still processing message as the client

might reconnect and replay."""

)

except Exception:

logger.exception("Error in message router")

# Start the message router

tg.start_soon(message_router)And finally delivers the response back to the client over SSE or JSON only.

# Create SSE stream

sse_stream_writer, sse_stream_reader = anyio.create_memory_object_stream[dict[str, str]](0)

async def sse_writer():

# Get the request ID from the incoming request message

try:

async with sse_stream_writer, request_stream_reader:

# Process messages from the request-specific stream

async for event_message in request_stream_reader:

# Build the event data

event_data = self._create_event_data(event_message)

await sse_stream_writer.send(event_data)

# If response, remove from pending streams and close

if isinstance(

event_message.message.root,

JSONRPCResponse | JSONRPCError,

):

break

except Exception:

logger.exception("Error in SSE writer")

finally:

logger.debug("Closing SSE writer")

await self._clean_up_memory_streams(request_id)Empowering applications with LLMs, AI and MCP naturally comes with challenges. Applications become more and more complex as they implement intelligent workflows, and deploying guards against attacks becomes critical. Since tools are essentially executable functions, they can represent arbitrary code execution if not secured properly. This section discusses how to implement consent and authorization flows using MCP facilities.

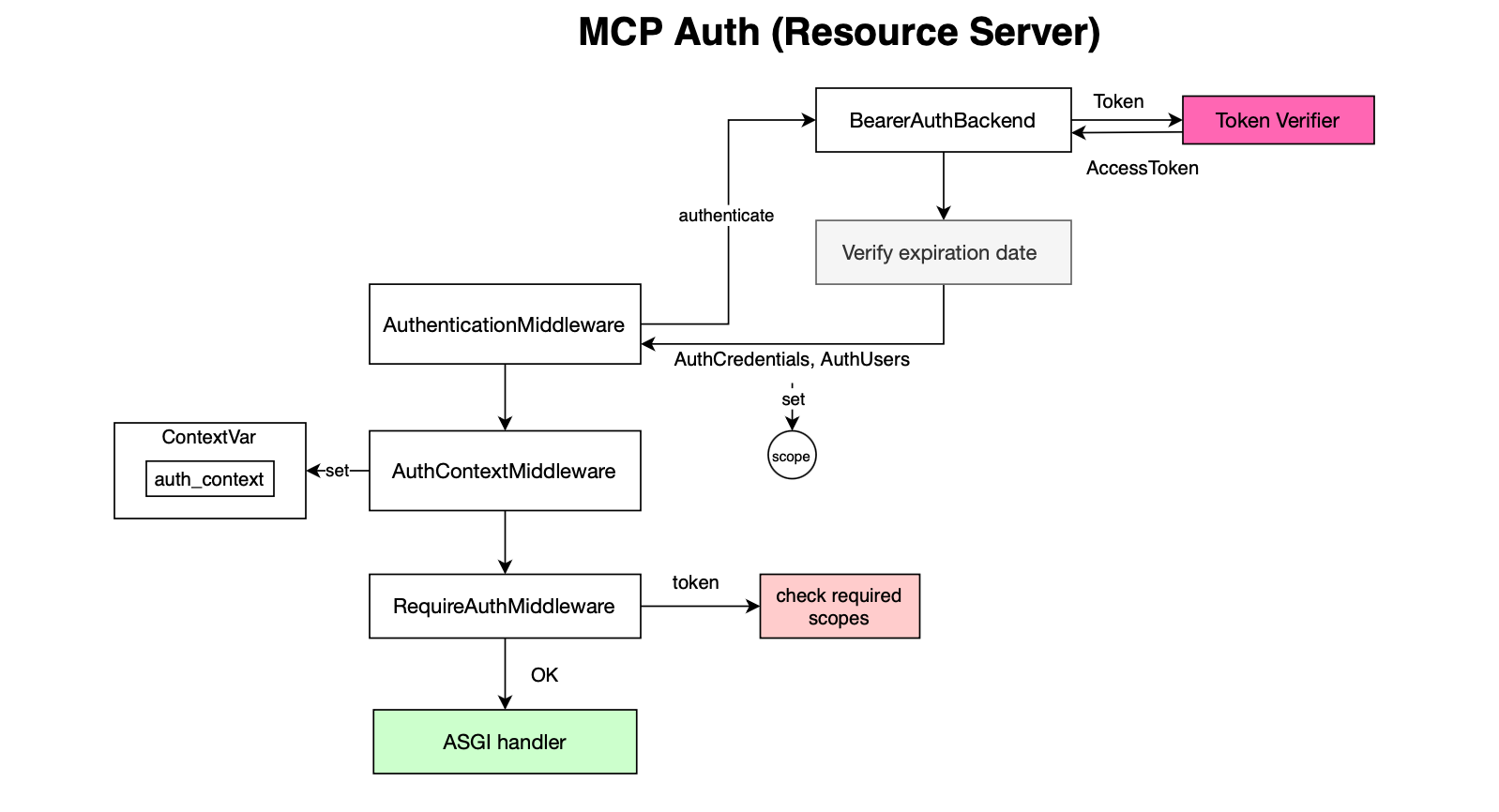

Whether publicly exposed or not, one may want to secure the MCP server API. MCP supports bearer token verification on each request before handling the request.

# Set up auth if configured

if self.settings.auth:

required_scopes = self.settings.auth.required_scopes or []

# Add auth middleware if token verifier is available

if self._token_verifier:

middleware = [

Middleware(

AuthenticationMiddleware,

backend=BearerAuthBackend(self._token_verifier),

),

Middleware(AuthContextMiddleware),

]

The flow works as follows: the request passes through the AuthenticationMiddleware

class AuthenticationMiddleware:

def __init__(

self,

app: ASGIApp,

backend: AuthenticationBackend,

on_error: Callable[[HTTPConnection, AuthenticationError], Response] | None = None,

) -> None:

self.app = app

self.backend = backend

self.on_error: Callable[[HTTPConnection, AuthenticationError], Response] = (

on_error if on_error is not None else self.default_on_error

)

async def __call__(self, scope: Scope, receive: Receive, send: Send) -> None:

if scope["type"] not in ["http", "websocket"]:

await self.app(scope, receive, send)

return

conn = HTTPConnection(scope)

try:

auth_result = await self.backend.authenticate(conn)

except AuthenticationError as exc:

response = self.on_error(conn, exc)

if scope["type"] == "websocket":

await send({"type": "websocket.close", "code": 1000})

else:

await response(scope, receive, send)

return

if auth_result is None:

auth_result = AuthCredentials(), UnauthenticatedUser()

scope["auth"], scope["user"] = auth_result

await self.app(scope, receive, send)which delegates to the token verifier provided by the developer:

class BearerAuthBackend(AuthenticationBackend):

"""

Authentication backend that validates Bearer tokens using a TokenVerifier.

"""

def __init__(self, token_verifier: TokenVerifier):

self.token_verifier = token_verifier

async def authenticate(self, conn: HTTPConnection):

auth_header = next(

(conn.headers.get(key) for key in conn.headers if key.lower() == "authorization"),

None,

)

if not auth_header or not auth_header.lower().startswith("bearer "):

return None

token = auth_header[7:] # Remove "Bearer " prefixOnce the token is verified the context is set using AuthContextMiddleware:

class AuthContextMiddleware:

"""

Middleware that extracts the authenticated user from the request

and sets it in a contextvar for easy access throughout the request lifecycle.

This middleware should be added after the AuthenticationMiddleware in the

middleware stack to ensure that the user is properly authenticated before

being stored in the context.

"""

def __init__(self, app: ASGIApp):

self.app = app

async def __call__(self, scope: Scope, receive: Receive, send: Send):

user = scope.get("user")

if isinstance(user, AuthenticatedUser):

# Set the authenticated user in the contextvar

token = auth_context_var.set(user)

try:

await self.app(scope, receive, send)

finally:

auth_context_var.reset(token)

else:

# No authenticated user, just process the request

await self.app(scope, receive, send)Finally, the configured scopes are then checked:

routes.append(

Route(

self.settings.streamable_http_path,

endpoint=RequireAuthMiddleware(streamable_http_app, required_scopes, resource_metadata_url),

)

)class RequireAuthMiddleware:

...

async def __call__(self, scope: Scope, receive: Receive, send: Send) -> None:

auth_user = scope.get("user")

if not isinstance(auth_user, AuthenticatedUser):

await self._send_auth_error(

send, status_code=401, error="invalid_token", description="Authentication required"

)

return

auth_credentials = scope.get("auth")

for required_scope in self.required_scopes:

# auth_credentials should always be provided; this is just paranoia

if auth_credentials is None or required_scope not in auth_credentials.scopes:

await self._send_auth_error(

send, status_code=403, error="insufficient_scope", description=f"Required scope: {required_scope}"

)

return

await self.app(scope, receive, send)One may want to apply permissions at the tool level. For example, security analysts may require different privileges than other users. Since the context is populated, you can implement tool-level security checks in addition to endpoint-level scope enforcement using for instance using FastMCP middleware.

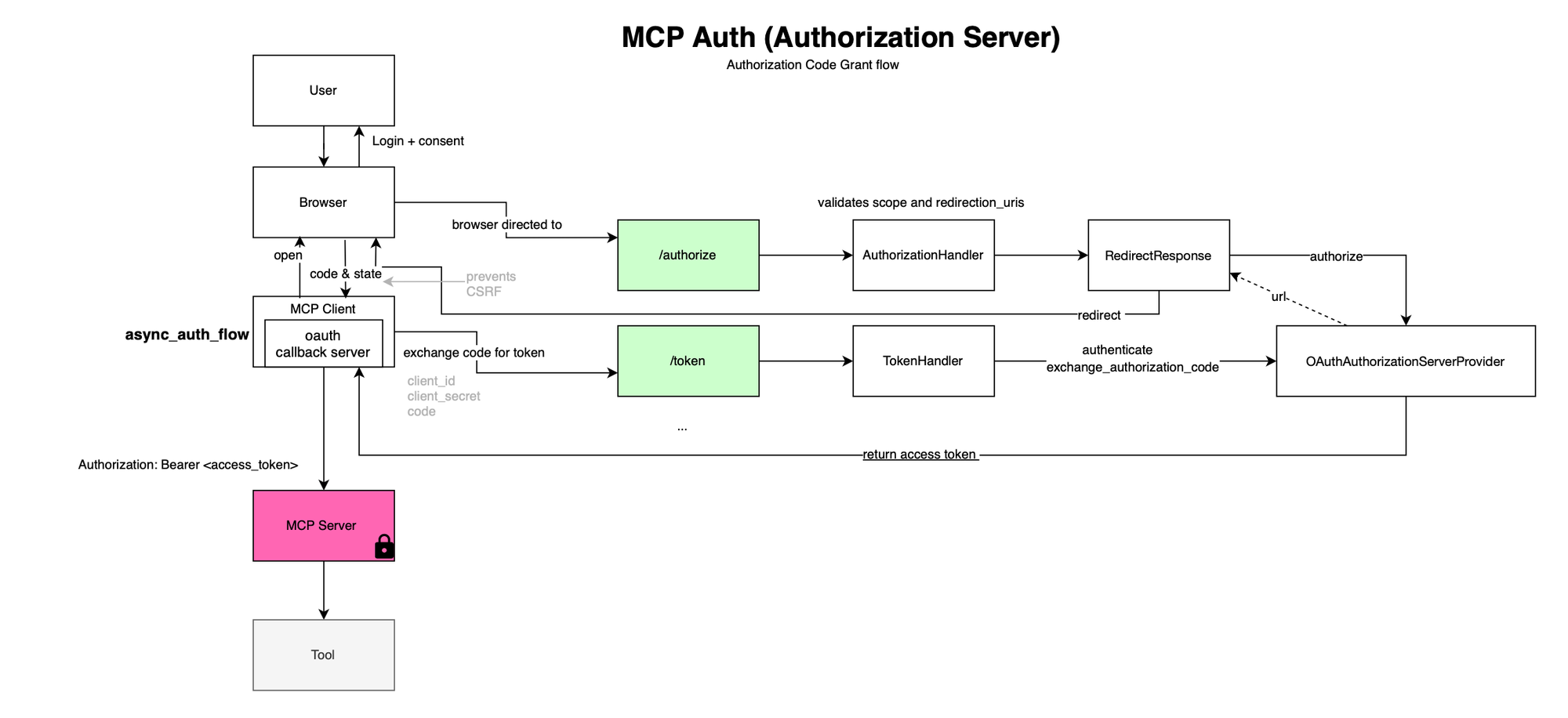

MCP also implements the Authorization Code Grant flow. In this case, the MCP server acts as the authorization server.

# Add auth endpoints if auth server provider is configured

if self._auth_server_provider:

from mcp.server.auth.routes import create_auth_routes

routes.extend(

create_auth_routes(

provider=self._auth_server_provider,

issuer_url=self.settings.auth.issuer_url,

service_documentation_url=self.settings.auth.service_documentation_url,

client_registration_options=self.settings.auth.client_registration_options,

revocation_options=self.settings.auth.revocation_options,

)

)The /authorize and /token endpoints are deployed in the app.

def create_auth_routes(

provider: OAuthAuthorizationServerProvider[Any, Any, Any],

issuer_url: AnyHttpUrl,

service_documentation_url: AnyHttpUrl | None = None,

client_registration_options: ClientRegistrationOptions | None = None,

revocation_options: RevocationOptions | None = None,

) -> list[Route]:

validate_issuer_url(issuer_url)

client_registration_options = client_registration_options or ClientRegistrationOptions()

revocation_options = revocation_options or RevocationOptions()

metadata = build_metadata(

issuer_url,

service_documentation_url,

client_registration_options,

revocation_options,

)

client_authenticator = ClientAuthenticator(provider)

# Create routes

# Allow CORS requests for endpoints meant to be hit by the OAuth client

# (with the client secret). This is intended to support things like MCP Inspector,

# where the client runs in a web browser.

routes = [

Route(

"/.well-known/oauth-authorization-server",

endpoint=cors_middleware(

MetadataHandler(metadata).handle,

["GET", "OPTIONS"],

),

methods=["GET", "OPTIONS"],

),

Route(

AUTHORIZATION_PATH,

# do not allow CORS for authorization endpoint;

# clients should just redirect to this

endpoint=AuthorizationHandler(provider).handle,

methods=["GET", "POST"],

),

Route(

TOKEN_PATH,

endpoint=cors_middleware(

TokenHandler(provider, client_authenticator).handle,

["POST", "OPTIONS"],

),

methods=["POST", "OPTIONS"],

),

]The MCP client redirects the user to the /authorize endpoint, retrieves an authorization code, and exchanges it for an access token via /token .

async def _send_handling_auth(

self,

request: Request,

auth: Auth,

follow_redirects: bool,

history: list[Response],

) -> Response:

auth_flow = auth.async_auth_flow(request)

....

async def async_auth_flow(self, request: httpx.Request) -> AsyncGenerator[httpx.Request, httpx.Response]:

"""HTTPX auth flow integration."""

async with self.context.lock:

if not self._initialized:

await self._initialize()

# Capture protocol version from request headers

self.context.protocol_version = request.headers.get(MCP_PROTOCOL_VERSION)

if not self.context.is_token_valid() and self.context.can_refresh_token():

# Try to refresh token

refresh_request = await self._refresh_token()

refresh_response = yield refresh_request

if not await self._handle_refresh_response(refresh_response):

# Refresh failed, need full re-authentication

self._initialized = False

if self.context.is_token_valid():

self._add_auth_header(request)

response = yield request

if response.status_code == 401:

# Perform full OAuth flow

try:

# OAuth flow must be inline due to generator constraints

# Step 1: Discover protected resource metadata (RFC9728 with WWW-Authenticate support)

discovery_request = await self._discover_protected_resource(response)

discovery_response = yield discovery_request

await self._handle_protected_resource_response(discovery_response)

# Step 2: Discover OAuth metadata (with fallback for legacy servers)

discovery_urls = self._get_discovery_urls()

for url in discovery_urls:

oauth_metadata_request = self._create_oauth_metadata_request(url)

oauth_metadata_response = yield oauth_metadata_request

if oauth_metadata_response.status_code == 200:

try:

await self._handle_oauth_metadata_response(oauth_metadata_response)

break

except ValidationError:

continue

elif oauth_metadata_response.status_code < 400 or oauth_metadata_response.status_code >= 500:

break # Non-4XX error, stop trying

# Step 3: Register client if needed

registration_request = await self._register_client()

if registration_request:

registration_response = yield registration_request

await self._handle_registration_response(registration_response)

# Step 4: Perform authorization

auth_code, code_verifier = await self._perform_authorization()

# Step 5: Exchange authorization code for tokens

token_request = await self._exchange_token(auth_code, code_verifier)

token_response = yield token_request

await self._handle_token_response(token_response)

except Exception:

logger.exception("OAuth flow error")

raise

# Retry with new tokens

self._add_auth_header(request)

yield request

class OAuthClientProvider(httpx.Auth):

"""

OAuth2 authentication for httpx.

Handles OAuth flow with automatic client registration and token storage.

"""

requires_response_body = True

def __init__(

self,

server_url: str,

client_metadata: OAuthClientMetadata,

storage: TokenStorage,

redirect_handler: Callable[[str], Awaitable[None]],

callback_handler: Callable[[], Awaitable[tuple[str, str | None]]],

timeout: float = 300.0,

):

...

async def _perform_authorization(self) -> tuple[str, str]:

"""Perform the authorization redirect and get auth code."""

if self.context.oauth_metadata and self.context.oauth_metadata.authorization_endpoint:

auth_endpoint = str(self.context.oauth_metadata.authorization_endpoint)

else:

auth_base_url = self.context.get_authorization_base_url(self.context.server_url)

auth_endpoint = urljoin(auth_base_url, "/authorize")

if not self.context.client_info:

raise OAuthFlowError("No client info available for authorization")

# Generate PKCE parameters

pkce_params = PKCEParameters.generate()

state = secrets.token_urlsafe(32)

auth_params = {

"response_type": "code",

"client_id": self.context.client_info.client_id,

"redirect_uri": str(self.context.client_metadata.redirect_uris[0]),

"state": state,

"code_challenge": pkce_params.code_challenge,

"code_challenge_method": "S256",

}

# Only include resource param if conditions are met

if self.context.should_include_resource_param(self.context.protocol_version):

auth_params["resource"] = self.context.get_resource_url() # RFC 8707

if self.context.client_metadata.scope:

auth_params["scope"] = self.context.client_metadata.scope

authorization_url = f"{auth_endpoint}?{urlencode(auth_params)}"

await self.context.redirect_handler(authorization_url)

# Wait for callback

auth_code, returned_state = await self.context.callback_handler()

if returned_state is None or not secrets.compare_digest(returned_state, state):

raise OAuthFlowError(f"State parameter mismatch: {returned_state} != {state}")

if not auth_code:

raise OAuthFlowError("No authorization code received")

# Return auth code and code verifier for token exchange

return auth_code, pkce_params.code_verifier

....

class OAuth(OAuthClientProvider):

"""

OAuth client provider for MCP servers with browser-based authentication.

This class provides OAuth authentication for FastMCP clients by opening

a browser for user authorization and running a local callback server.

"""

def __init__(

self,

mcp_url: str,

scopes: str | list[str] | None = None,

client_name: str = "FastMCP Client",

token_storage_cache_dir: Path | None = None,

additional_client_metadata: dict[str, Any] | None = None,

callback_port: int | None = None,

):

"""

Initialize OAuth client provider for an MCP server.

Args:

mcp_url: Full URL to the MCP endpoint (e.g. "http://host/mcp/sse/")

scopes: OAuth scopes to request. Can be a

space-separated string or a list of strings.

client_name: Name for this client during registration

token_storage_cache_dir: Directory for FileTokenStorage

additional_client_metadata: Extra fields for OAuthClientMetadata

callback_port: Fixed port for OAuth callback (default: random available port)

"""

parsed_url = urlparse(mcp_url)

server_base_url = f"{parsed_url.scheme}://{parsed_url.netloc}"

# Setup OAuth client

self.redirect_port = callback_port or find_available_port()

redirect_uri = f"http://localhost:{self.redirect_port}/callback"

if isinstance(scopes, list):

scopes = " ".join(scopes)

client_metadata = OAuthClientMetadata(

client_name=client_name,

redirect_uris=[AnyHttpUrl(redirect_uri)],

grant_types=["authorization_code", "refresh_token"],

response_types=["code"],

# token_endpoint_auth_method="client_secret_post",

scope=scopes,

**(additional_client_metadata or {}),

)

# Create server-specific token storage

storage = FileTokenStorage(

server_url=server_base_url, cache_dir=token_storage_cache_dir

)

# Store server_base_url for use in callback_handler

self.server_base_url = server_base_url

# Initialize parent class

super().__init__(

server_url=server_base_url,

client_metadata=client_metadata,

storage=storage,

redirect_handler=self.redirect_handler,

callback_handler=self.callback_handler,

)

async def redirect_handler(self, authorization_url: str) -> None:

"""Open browser for authorization."""

logger.info(f"OAuth authorization URL: {authorization_url}")

webbrowser.open(authorization_url)

async def callback_handler(self) -> tuple[str, str | None]:

"""Handle OAuth callback and return (auth_code, state)."""

# Create a future to capture the OAuth response

response_future = asyncio.get_running_loop().create_future()

# Create server with the future

server = create_oauth_callback_server(

port=self.redirect_port,

server_url=self.server_base_url,

response_future=response_future,

)

# Run server until response is received with timeout logic

async with anyio.create_task_group() as tg:

tg.start_soon(server.serve)

logger.info(

f"🎧 OAuth callback server started on http://localhost:{self.redirect_port}"

)

TIMEOUT = 300.0 # 5 minute timeout

try:

with anyio.fail_after(TIMEOUT):

auth_code, state = await response_future

return auth_code, state

except TimeoutError:

raise TimeoutError(f"OAuth callback timed out after {TIMEOUT} seconds")

finally:

server.should_exit = True

await asyncio.sleep(0.1) # Allow server to shutdown gracefully

tg.cancel_scope.cancel()

This token is then used to securely access MCP resources on behalf of the user.

LLM-driven agents's abilities are significantly improved once endowed with tools or function calls capabilities; injecting external data sources and API responses into the context window.

Over the past few months/years, the industry has explored different approaches to enable LLMs to generate accurate function calls. Many SOTA LLMs [https://arxiv.org/html/2412.01130v2, https://arxiv.org/pdf/2508.07575 ] are specially pre-trained/finetuned on function-calling capabilities (e.g Gorilla) i.e to generate function calls through specialized fine-tuning/ retraining of the model. The model then learns when to respond directly or invoke functions. This approach has been shown to surpass SOTA models on emitting API calls and demonstrated good adaptation to changes in API documentation.

Another approach is few-shot/in-context or zero shot learning implementations (could be combined with reasoning like using the popular ReACT framework) which uses demonstrations without requiring model parameter updates (less expensive).

Additionally, to reduce lately and accelerates the execution process when orchestrating function calls, Parallel Function Calling has been proposed recently for empowering LLMs to issue multiple function or tool calls in one LLM response.

A tool-based AI workflow typically follows this sequence: the foundation model is provided with a list of function definitions alongside the task goal. The model then responds with a structured tool call in JSON format, specifying the tool name and its parameters. The application code parses this output and invokes the corresponding function. The tool’s response is then injected back into the context, and the LLM, now working with the extended context, can either request another function call in the next iteration or signal task completion, often with additional output (e.g., an investigation result). Additionally, when using tools, LLMs will often comment on what they are doing before or while invoking tools. Finally other sophisticated prompting techniques can be used, for instance by adding complex agentic framework like ReAct.

While one could integrate tools by injecting tool descriptions into the prompt, it is advised to use tools parameter of the API request served by different LLM providers (e.g either because the underlying foundation model was fine tuned or simply to ensure one consistent way of serving function calls i.e the provider automatically include a special system prompt for you). Let's see what Function Calling looks like for different providers.

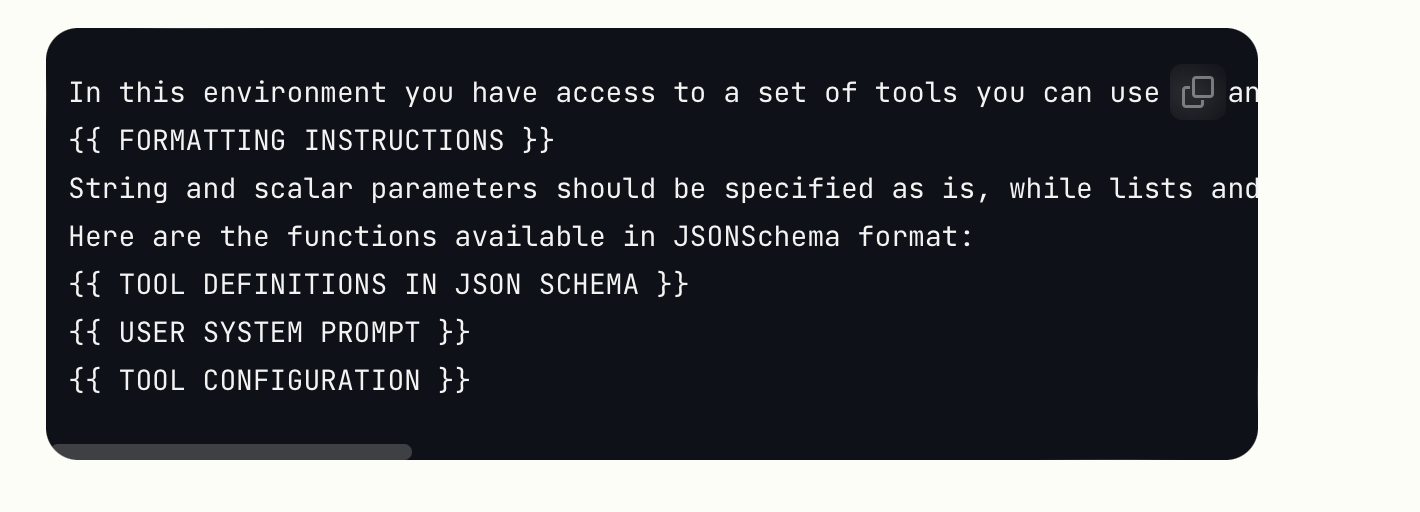

When using Claude, tools are provided in the tools parameter of the API request. Behind the scene, Anthropic utilizes a special system prompt from the tool definitions:

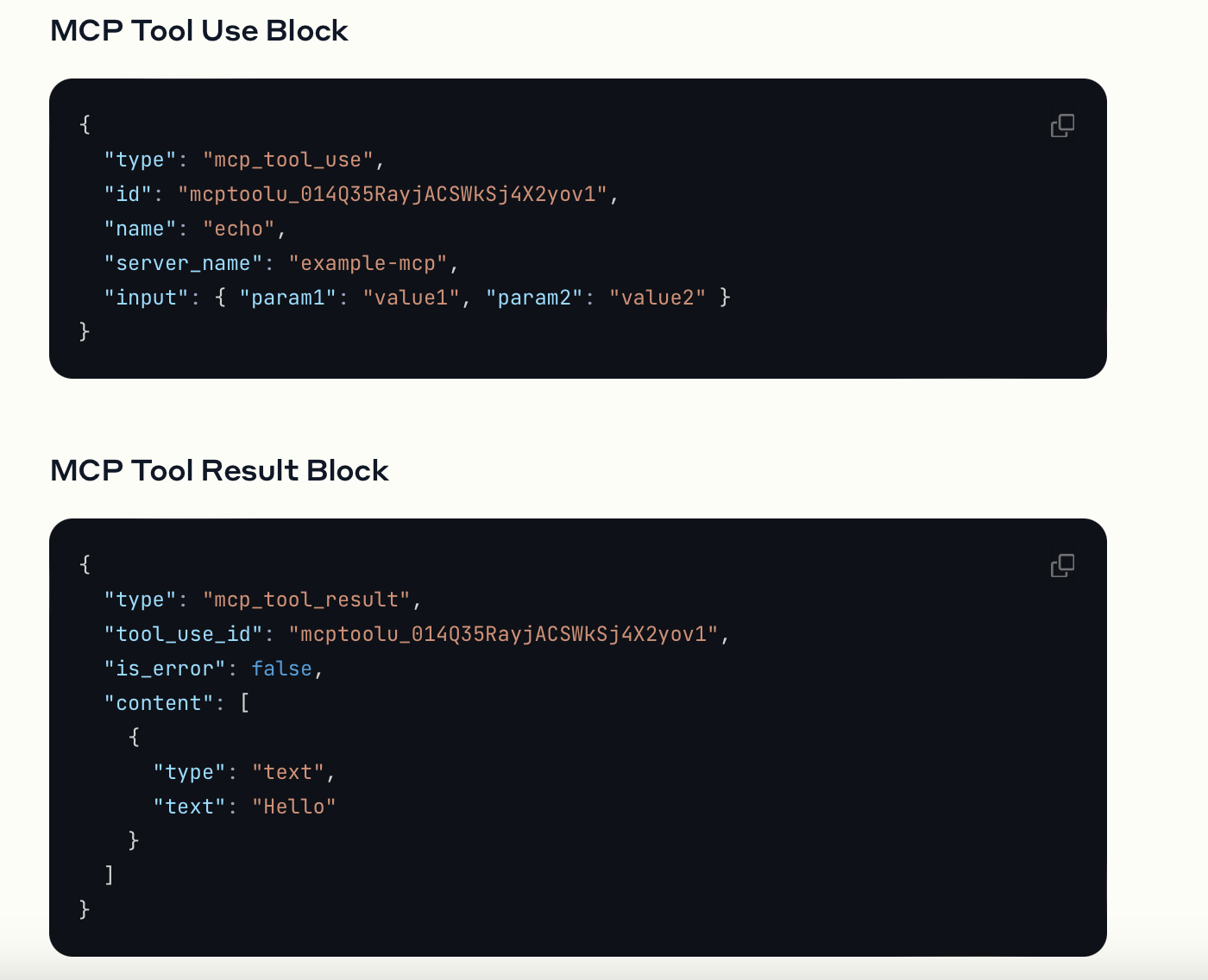

The response will have a stop_reason of tool_use and one or more tool_use content blocks:

{

"id": "msg_01Aq9w938a90dw8q",

"model": "claude-opus-4-1-20250805",

"stop_reason": "tool_use",

"role": "assistant",

"content": [

{

"type": "text",

"text": "I'll check the current weather in San Francisco for you."

},

{

"type": "tool_use",

"id": "toolu_01A09q90qw90lq917835lq9",

"name": "get_weather",

"input": {"location": "San Francisco, CA", "unit": "celsius"}

}

]

}Fore more details please refer to the documentation: https://docs.anthropic.com/en/docs/agents-and-tools/tool-use/overview

GPT-4.1 has undergone more training on effectively utilizing tools passed as arguments in an OpenAI API request, and also recommends using the tools field rather than manually injecting tool descriptions into the prompt. Here is what the output of the model looks like (call id or tool_call_id is used later to submit the function result similar to tool_use id used by Anthropic):

{

"id": "chatcmpl-",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "",

"tool_calls": [

{

"id": "call_",

"type": "function",

"function": {

"name": "...",

"arguments": "..."

}

}

]

},

"finish_reason": "tool_calls"

}

]

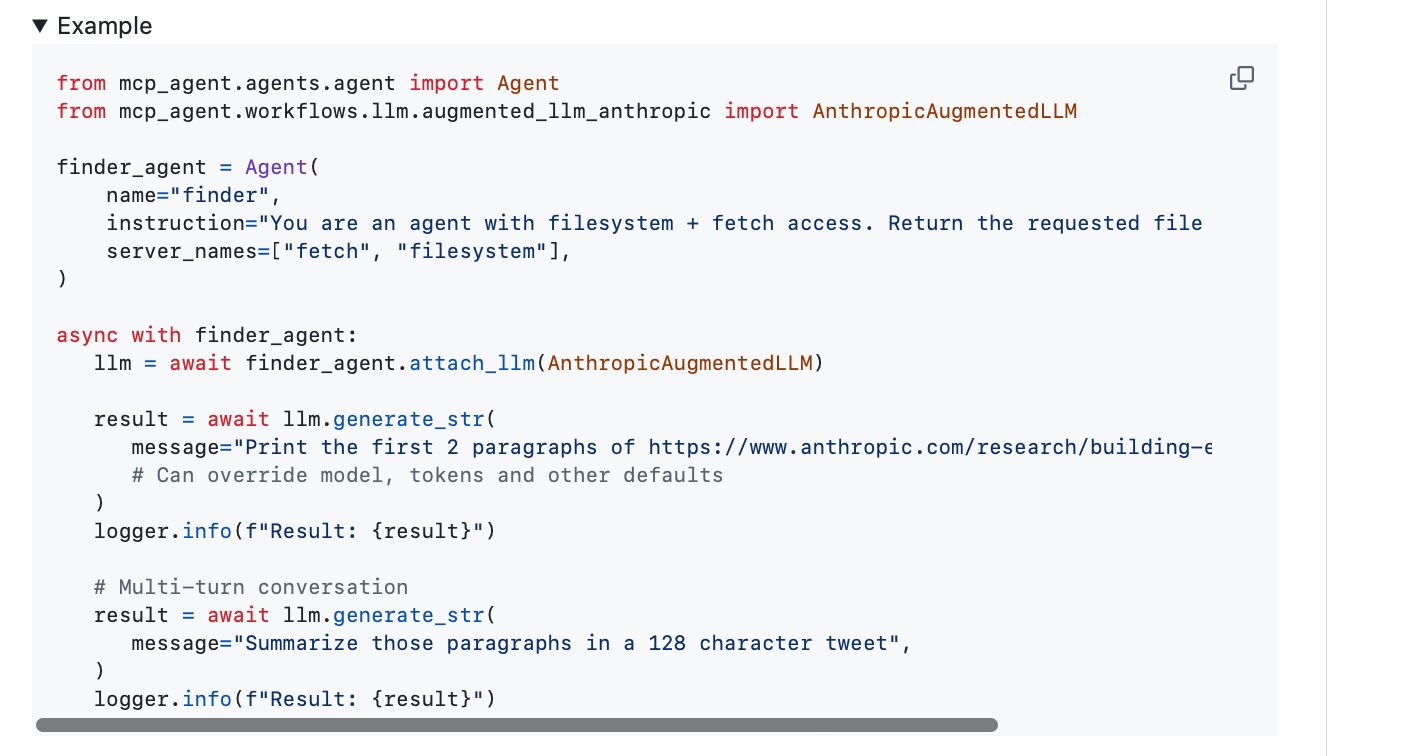

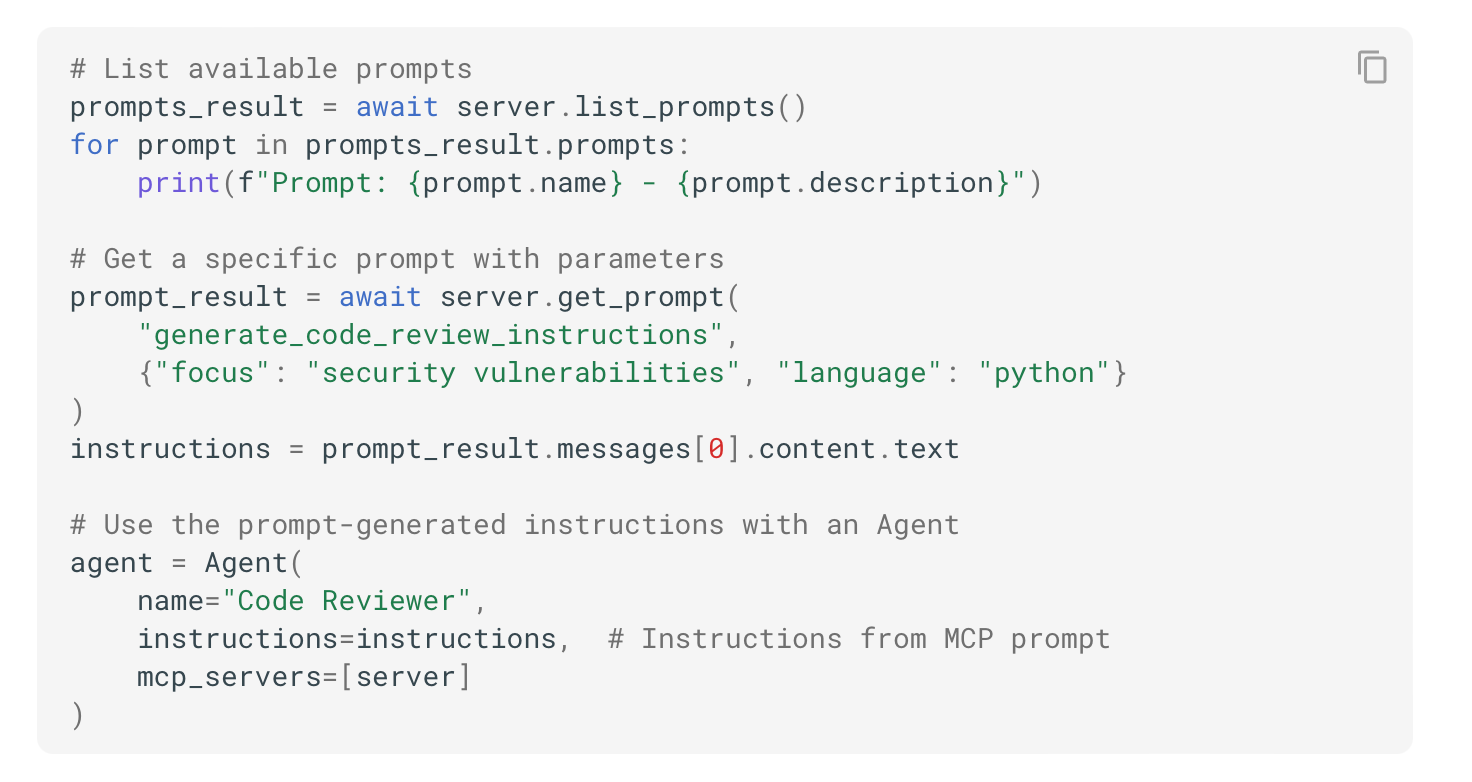

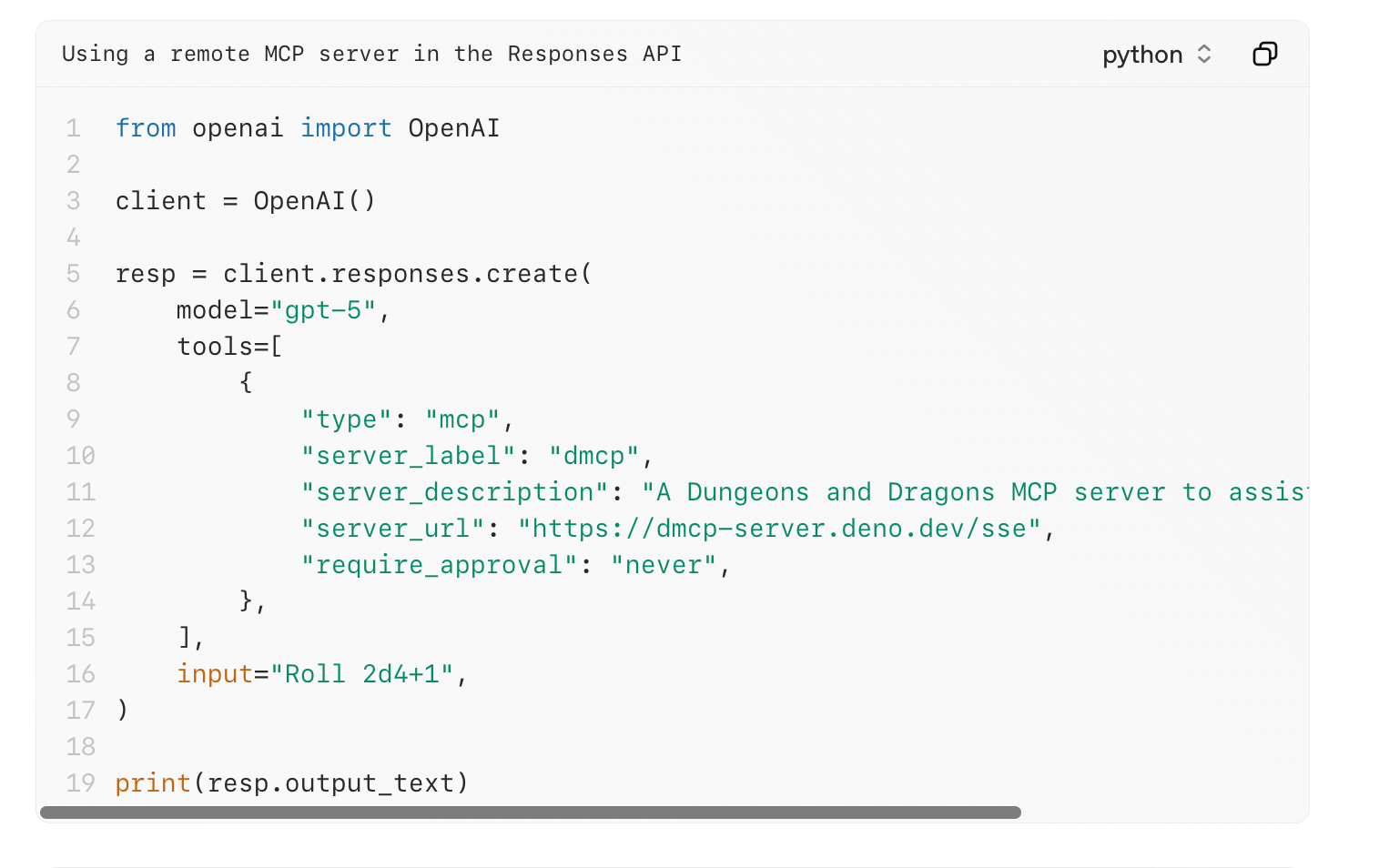

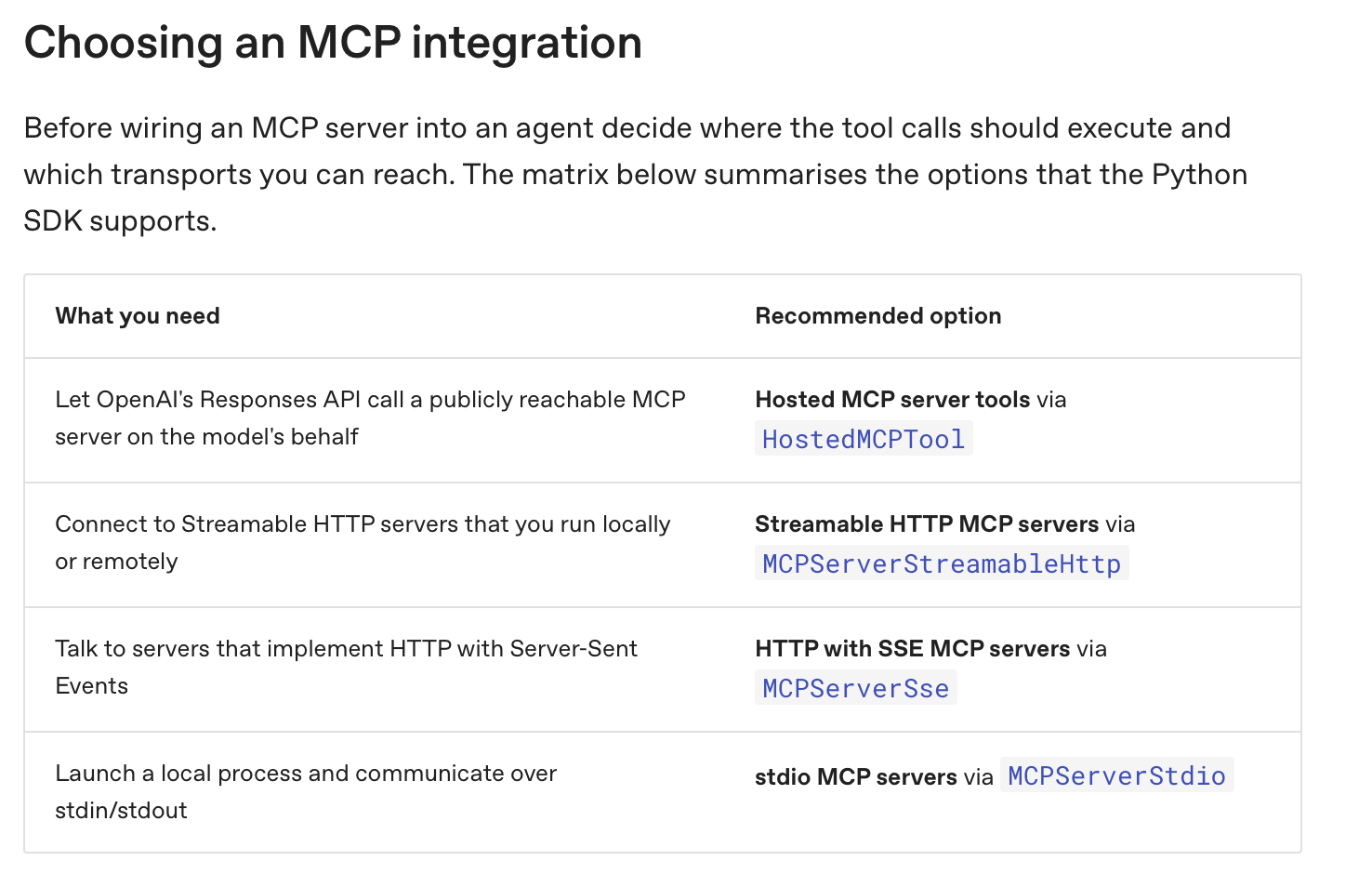

}Also for more details please refer to the documentation: https://platform.openai.com/docs/guides/function-calling.